Did you know that a whopping 77% of banking executives believe AI holds the key to their success? Or that over half of all industry leaders are already capitalizing on AI’s potential? But the real eye-opener: within just three years, Gen AI use cases are projected to deliver a 9% reduction in costs and an impressive 9% increase in sales. Imagine what that means for your bank.

Furthermore, 4 in 10 individuals are already seeing AI as a tool to manage their finances. And over half are willing to adopt it if it eases their money woes. In fact, one-third of those who’ve tried this technology say they’d trust it more than a human to handle their assets.

Additionally, take note of how forward-looking companies like Morgan Stanley are already putting artificial intelligence to work with their internal chatbots. With OpenAI’s GPT-4, Morgan Stanley’s chatbot now searches through its wealth management content. This simplifies the process of accessing crucial information, making it more practical for the company.

Eager to see how other financial institutions are driving advancements? Discover more examples of how Generative AI in banking is transforming the landscape, along with strategic insights to realize its maximum capacity for your organization.

Table of Contents

Key Takeaways

- 77% of senior banking executives say AI capabilities will separate winners from losers.

- GenAI is moving from pilots to production: 47% of banks in 2025 reported they’ve rolled out apps, up from 10% in 2023.

- At scale, the upside is material: Citi estimates AI could lift the banking industry’s 2028 profits by 9% ($170B), pushing the pool close to $2T.

- Real adoption proof: Banks using AI at scale are already seeing results: Wells Fargo’s assistant Fargo handled 245M+ customer interactions in 2024, showing GenAI can run at massive volume in a regulated environment.

- Fraud is a clear early win: Mastercard says GenAI helped double compromised-card detection speed, cut false positives by up to 200%, and speed up identification of at-risk merchants by 300%.

- The compliance risk is expensive (and rising): penalties targeting banks surged to $3.65B in 2024 (+522% YoY), with transaction-monitoring penalties topping $3.3B.

Read also: How Generative AI in Finance Addresses 10 Key Operational and Strategic Industry Challenges

The Evolution of AI in Banking

If you rewind a few decades, “AI and banking” didn’t look like chatbots or copilots. It looked like rulebooks turned into code: rigid, predictable, and mostly built to say “yes/no” based on predefined criteria. That early era mattered because it taught banks a lasting lesson: automation only works when you can explain why a decision was made.

As digital channels and payment speeds exploded in the 2010s, banks shifted toward machine learning that could adapt: real-time fraud monitoring, anomaly detection, and faster risk forecasting. The focus moved from “automate a decision” to “respond in seconds.”

Then came the practical automation wave: NLP, chatbots, and workflow tools that handled high-volume requests and back-office tasks. Many banks learned a key lesson here: automation only pays off when the underlying process is clean. Otherwise, you just accelerate the mess.

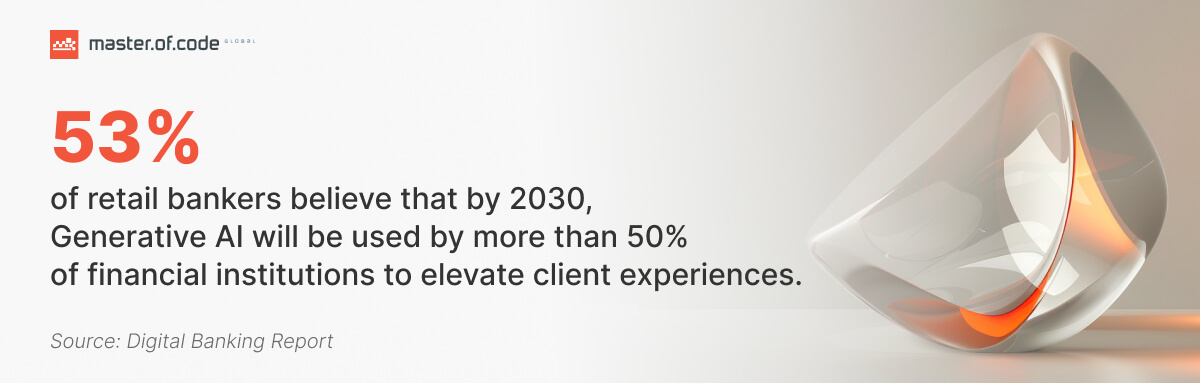

Generative AI (2023–2025) added a new layer. Instead of only predicting or classifying, it can produce work artifacts: summaries, explanations, drafts, and structured reports, which makes it immediately useful across operations. EY-Parthenon’s banking survey shows adoption rising fast: 47% of respondents in 2025 said they had rolled out GenAI applications, up from 10% in 2023.

The same acceleration is happening internally: S&P Global highlights how financial services firms are scaling governance and internal AI usage (including governance tooling becoming more common as adoption grows). And third-party reporting on S&P’s survey data notes that nearly 50% of financial institutions are using or developing GenAI for internal use, often expanding from basic automation into multi-step process execution.

In parallel, regulation and oversight became the differentiator. EU rules classify certain banking uses, like creditworthiness and credit scoring for individuals, as “high-risk” under the AI Act, raising the bar on controls and documentation.

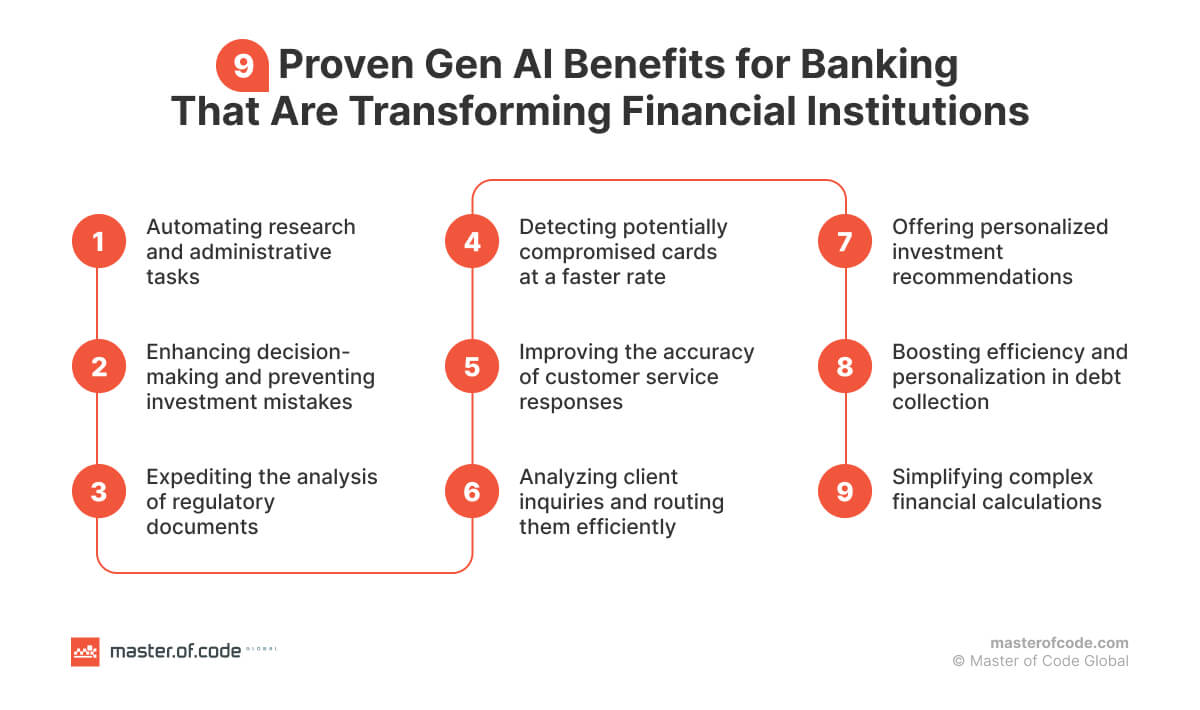

Benefits of AI in Banking

Cost Reduction

AI-driven automation is helping banks trim operational costs significantly. According to industry data, about 36% of financial services professionals report that AI implementations have cut their annual costs by more than 10%, largely through automating repetitive tasks like customer support, KYC checks, and document processing.

Think of a mid-sized bank that traditionally relied on large call centres and manual loan review teams. By introducing Сonversational AI and intelligent process automation, it can reduce labour costs, shorten decision timelines, and redeploy human talent to higher-impact roles. This shift doesn’t just lower expenses; it accelerates service delivery and customer satisfaction simultaneously.

Reduced Human Error

In finance, even small mistakes can cascade into big losses. Banking AI systems excel in handling high-volume, rule-based tasks with consistency.

Studies show AI can automate up to 80% of routine banking work, including data entry, reconciliation, and compliance checks, thereby reducing the risk of mistakes that occur with manual processes.

Imagine reviewing thousands of loan applications daily: traditional methods rely on manual checks that vary by reviewer. With AI, the same criteria are applied uniformly, reducing inconsistencies, speeding approvals, and strengthening trust in automated decision-making.

Proactive Compliance

Regulatory failure isn’t abstract. The compliance landscape has become more punitive: in 2024, US regulators issued about $4.6 billion in financial penalties across financial institutions, with banks absorbing $3.65 billion of that total – a 522% increase year-over-year for banking compliance penalties.

In parallel, high-profile enforcement actions continued into 2025; for instance, TD Bank agreed to pay a $3 billion penalty for anti-money-laundering failures.

These benefits of Generative AI in banking help institutions stay ahead of regulatory expectations, automatically detecting suspicious activity, mapping transactions to global watchlists, and generating structured compliance reports long before regulators ask for them.

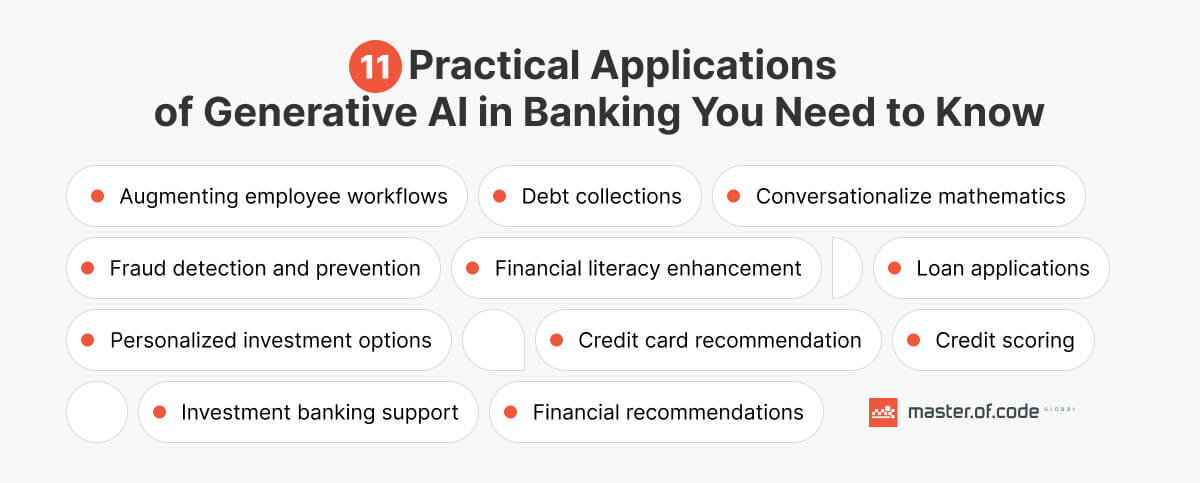

Generative AI Use Cases in Banking

The following examples highlight the most impactful Generative AI applications in banking, spanning customer service, fraud prevention, risk management, and internal operations. Let’s examine them in more detail.

Customer Service

Generative AI in the banking industry transforms client support into a 24/7 intelligent experience. AI systems handle routine inquiries instantly while recognizing complex scenarios (fraud disputes, loan applications) and routing them to specialists with complete context. This ensures customers get immediate help for simple needs and expert attention for sophisticated requests.

Generative AI delivers multilingual support that automatically responds in customers’ preferred languages, breaking communication barriers globally. The technology detects emotional cues and adjusts its tone accordingly, while freeing human staff to focus on complex problem-solving and relationship building.

Fraud Detection and Prevention

Generative AI for banking industry is a game-changer in the battle against fraudulent activities. By training on past instances of scams and continuously scrutinizing financial operations, it swiftly pinpoints unusual behavior and promptly notifies clients.

Some examples of how the technology can help with fraud identification measures include:

- Behavioral biometrics: Scrutinize user interactions to detect anomalies that might signal unauthorized access.

- Real-time transaction monitoring: Constantly evaluate transactions for suspicious activity, flagging potential threats for immediate investigation.

- Anomaly detection in large datasets: Analyze extensive transaction data to uncover hidden patterns and irregularities that could signify complex fraud schemes.

As Natalie Faulkner, Global Fraud Lead at KPMG International, aptly puts it, “In the context of changing global banking landscape, where the demand for face to face banking is decreasing, volumes of digital payments are increasing and payments are being processed in seconds, fraudsters are creatively finding new ways to steal from banks and their customers. Banks need to be agile to respond to threats and embrace new approaches and technologies to predict and prevent fraud.” Generative AI in Fintech is a prime example of such a technology, offering a proactive and adaptive solution to this ever-evolving challenge.

Credit Approvals

Credit Scoring Questions

As per research, 21%-33% of Americans regularly check their credit score, a critical factor in financial health. The score is a three-digit number, usually ranging from 300 to 850, that estimates how likely you are to repay borrowed money and pay bills. An intelligent FAQ chatbot is able to answer questions such as “What is credit scoring?” or provide general recommendations on “How to boost your creditworthiness?” Generative AI for banking could get even further, enabling customers to make informed decisions. It’s capable of instantly analyzing earnings, employment data, and client history to generate one’s ranking.

Loan Application

By rapidly examining diverse financial information, AI models offer an exhaustive overview of a borrower’s possibilities. This enables lenders to not only make faster decisions but also tailor loan terms and interest rates to individual circumstances. Additionally, AI-powered simulations assess potential risks under various economic conditions. Such a method enhances the ability to manage one’s portfolio effectively. The result is a win-win scenario for both businesses and borrowers, making the lending process safer, more efficient, and transparent.

Personalized Financial Advice

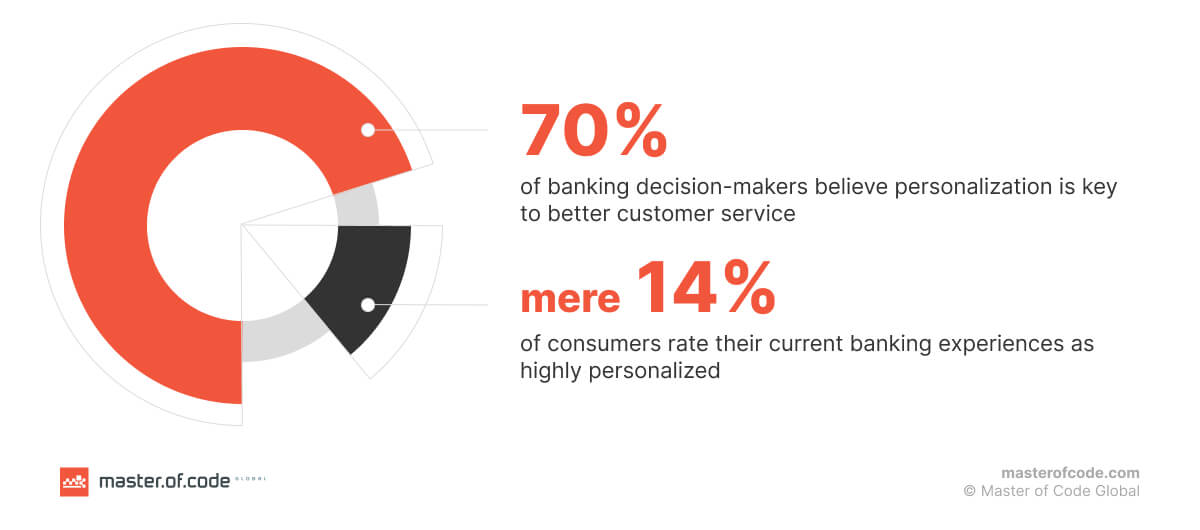

Zendesk reports that nearly 70% of decision-makers in the banking industrybelieve that personalization is critical to serving customers effectively. These efforts are also backed by company executives. They recognize that a tailored approach is essential for business success. However, a mere 14% of surveyed consumers feel that banks currently offer excellent personalized experiences.

Generative AI in banking can easily bridge this gap. By scrutinizing a consumer’s unique objectives and risk appetite, it suggests customized investment recommendations. This goes beyond generic advice, ensuring that tips align with individual needs and preferences, ultimately enhancing the customer’s journey. Additionally, the technology relies on market trends and economic forecasts to provide up-to-date investment insights.

Risk Assessment

By analyzing historical data, market conditions, and user profiles, artificial intelligence upgrades risk modeling and forecasting. Generative AI for banking dynamically assesses creditworthiness, liquidity risks, and market volatility, helping institutions make smarter, faster decisions in underwriting and strategic planning.

Transaction Monitoring

With continuous learning capabilities, Generative AI detects irregular transfer patterns in real time. It flags suspicious activities, adapts to emerging fraud tactics, and minimizes false positives. This leads to better protection of assets and improved operational efficiency.

Financial Literacy

When banks expand or work with new client categories, it’s crucial that they provide excellent customer service. This is achieved by addressing FAQs and offering clear guidelines on how to proceed. The information provided should be communicated clearly, using understandable language. Generative AI conversational systems powered by deep learning models can be a valuable resource. It offers multi-language support and promotes dialogue diversity. Consumers are able to request documentation in different languages. The technology improves their understanding of essential financial concepts, banking products, and services.

Credit Card Recommendation

Another powerful application is using Generative AI in customer service for elevated satisfaction. For example, it can be helpful for clients looking for the right banking card. Intelligent solutions could deliver personalized recommendations based on one’s spending habits, financial goals, and lifestyle. Furthermore, the technology can explain the features of different cards, compare them, and guide users through the application process.

With this support, consumers make informed decisions and choose the card that best suits their needs. Ultimately, AI-powered systems provide a convenient and efficient way for customers to find answers to all of their questions.

Debt Collections

Generative AI is disrupting debt collection by enhancing efficiency and personalization in communication. By leveraging NLP and ML, AI systems analyze debtor behavior and preferences, generating tailored messages that increase engagement and repayment rates.

These algorithms simulate human-like interactions, offering empathetic answers and solutions that resonate with debtors, thereby reducing hostility and improving collection outcomes. Additionally, this technology can predict client responses and adjust strategies in real-time, optimizing the process and ensuring compliance with regulations.

Loan and Mortgage Processing

Think of Emma, a first-time homebuyer applying for a mortgage while juggling work, family, and deadlines set by the seller. She uploads documents, waits days for updates, and isn’t sure whether silence means progress or a problem. This uncertainty is common and costly for banks and borrowers alike.

Generative AI in banking changes this experience entirely. It reviews income statements, credit data, property details, and supporting documents in near real time. When something is missing or unclear, the system proactively explains what’s needed and why. For lenders, risk is assessed faster and more consistently. For borrowers, the process feels guided, transparent, and far less intimidating.

Debt Collections

Generative AI is disrupting debt collection by enhancing efficiency and personalization in communication. By leveraging NLP and ML, AI systems analyze debtor behavior and preferences, generating tailored messages that increase engagement and repayment rates.

These algorithms simulate human-like interactions, offering empathetic answers and solutions that resonate with debtors, thereby reducing hostility and improving collection outcomes. Additionally, this technology can predict client responses and adjust strategies in real-time, optimizing the process and ensuring compliance with regulations.

Conversationalize Mathematics

Investing, regulated cryptocurrencies, stock trading, and exchange-traded funds is needlessly complex. However, the mission of banks is to ensure the accessibility of services. This should be true regardless of one’s net worth or literacy. With the application of Generative AI in banking, businesses can simplify the processes. The result is financial services that are easy to understand, transparent, and low-cost.

It allows users to ask math-related questions in a more conversational manner. For instance, one might inquire, “If I invest $X at Y% interest for Z years, what will my return be?” Alternatively, they wish to clarify, “What would be the difference in my monthly mortgage payments if I choose a variable rate of X% or a fixed rate of Y%?”

Furthermore, investment and mortgage calculators tend to utilize technical jargon. This can hinder one’s ability to accurately estimate payments and comprehend the nature of the service. When applying Generative AI for payments, you may find that these complexities become more manageable.

Financial Recommendations

Understanding and determining customer needs in order to recommend solutions specific to those necessities while exercising discretion in confidential matters is key to building perfect client relationships and loyalty. Generative AI in banking can make savings advice for certain accounts based on previous user activity. For example, if you add $XX more to your retirement plan (RRSP), you could receive a higher return of $$.

Another use case is to provide financial product suggestions that help users with budgeting. For instance, the LLM-powered banking chatbot automatically transfers a precise amount of every pay cheque into an account and potentially sets alerts for when a definite sum of money is spent.

Augmenting Employee Workflows

By automating repetitive tasks, bank workers are freed from mundane responsibilities and are able to focus on complex problem-solving and strategic initiatives. AI-driven support tools provide real-time data analysis and insights, enhancing the quality and speed of decision-making. Furthermore, Generative AI tailors training modules to individual learning styles, accelerating employee development and skill acquisition. This synergy between human expertise and technological capabilities unlocks a new level of productivity and innovation within organizations.

Employee Support (Copilots)

Imagine Alex, a frontline banking advisor handling a high-value customer call. The client asks about an edge-case policy exception tied to a recent regulatory update. Alex has minutes to respond, but the information is buried across internal systems and documents.

AI copilots step in as a real-time safety net. They surface relevant policies, summarize past interactions, suggest compliant responses, and even flag potential risks while the conversation is still unfolding. Instead of guessing or escalating unnecessarily, employees act with confidence. Over time, this support reduces burnout, shortens onboarding, and raises the overall quality of service.

Automated Report Generation: Internal Performance & Insights

Picture a leadership meeting scheduled for Monday morning. Analysts spend the entire weekend assembling reports, exporting data, reconciling numbers, and translating metrics into slides. By the time decisions are made, the insights are already outdated.

Generative AI in banking eliminates this lag. It continuously aggregates data from across systems, detects meaningful changes, and turns them into clear, executive-ready narratives. Leaders receive explanations, not just charts: what shifted, what caused it, and what deserves attention now. Reporting becomes a living input into strategy, not a backward-looking chore.

Automated Report Generation: Client-Facing Statements & Explanations

Now think of a customer opening their monthly statement and seeing pages of numbers, abbreviations, and unfamiliar terms. The information is technically accurate, yet emotionally confusing, and often triggers support calls.

An intelligent model reframes these documents into understandable stories. It explains changes in balances, highlights key drivers of performance, and answers follow-up questions in plain language. Instead of decoding financial jargon, customers gain clarity and confidence. The result is fewer inbound queries and stronger trust in the institution.

Operational Efficiency & Automation

Across banking operations, inefficiency rarely comes from one big flaw. It comes from dozens of small, manual steps, copying data between systems, checking the same information twice, waiting for approvals that could be automated.

Gen AI in banking quietly removes this friction. It orchestrates workflows end to end, ensuring information moves where it should, when it should, with human oversight applied only where judgment truly matters. Employees spend less time managing processes and more time delivering value, while operations scale without proportional cost increases.

Compliance & Regulatory Reporting

Regulatory audits arrive with minimal notice, demanding jurisdiction-specific documentation within compressed timelines. Compliance teams navigate scattered records, evolving frameworks, and the risk of inconsistent responses that trigger penalties.

Generative AI capabilities in banks continuously monitor transactions against regulatory requirements, automatically mapping activities to jurisdiction-specific rules. When auditors request information, the system generates pre-validated responses with complete source traceability. Reports align precisely with each regulator’s format and terminology requirements.

This shifts compliance from crisis response to continuous readiness. Teams focus on risk assessment and policy strategy while AI handles evidence gathering and regulatory cross-referencing, reducing audit response time and demonstrating control to regulators.

AML Compliance

Anti-money laundering efforts gain a powerful ally in Generative AI and banking. The technology cross-references transactions with global watchlists, detects layered concealment tactics, and outputs detailed SARs (Suspicious Activity Reports) to facilitate proactive compliance and reduce regulatory exposure.

Advanced Generative AI Banking Solutions

Generative AI Banking Chatbots

Modern tools go far beyond scripted interactions, with voice AI in banking enabling secure, natural conversations across phone-based customer journeys. Sophisticated banking chatbots engage users in fluid, human-like conversations, understand context and intent, and provide accurate, on-demand assistance. From answering FAQs to guiding clients through loan applications or account management, these bots deliver personalized service at scale, boosting satisfaction and reducing expenses.

For instance, Master of Code Global developed an AI-powered assistant for a leading member-owned banking institution that integrates seamlessly into Microsoft Teams. This solution connects directly to knowledge bases and document repositories, allowing staff to access crucial financial information instantly, without the need for additional tools or login systems.

By handling everything from simple policy lookups to complex regulatory questions, the bot significantly improved efficiency. Notable results included a 85% reduction in time spent searching for policy details, a 42% decrease in internal support tickets, and a 93% satisfaction rate for AI responses.

With this innovative tool, the institution has enhanced both staff productivity and the quality of customer service, showing just how powerful AI can be when integrated into the banking experience.

Generative AI in Investment Banking

While the technology is enhancing customer-facing services, it’s also making significant strides in the realm of investment banking and capital markets. It empowers analysts to rapidly sift through mountains of data, revealing hidden patterns and potential opportunities that might otherwise go unnoticed. Complex risk assessments become more streamlined, allowing for informed decision-making.

Additionally, AI-driven algorithms generate detailed financial models and forecasts, providing bankers with a clearer picture of likely consequences. This blend of efficiency, accuracy, and insight is reshaping the landscape, ultimately leading to better outcomes for both investors and clients. Beyond capital markets, AI in corporate banking is enabling faster credit assessments, covenant monitoring, and client reporting for large enterprises.

Gen AI Use Cases in Retail Banking

AI-powered virtual assistants are available around the clock to answer inquiries and offer guidance tailored to each individual’s goals. Meanwhile, behind the scenes, Gen AI optimizes back-office processes, reducing operational costs and minimizing human errors. Crucially, generative solutions play a vital role in providing a safer financial space for all. The combination of enhanced customer service and internal efficiency positions the technology as a cornerstone of modern retail banking.

Generative AI Copilots for Banking Teams

Designed to support employees, GAI copilots act as intelligent helpers across departments, from client aid and compliance to financial advisory. They can summarize documents, auto-complete forms, retrieve relevant data, or even generate insights during live communication. By lowering cognitive load and surfacing the right information at the right time, they improve productivity and decision-making.

11 Examples of Financial Institutions and Banks Using Generative AI

Organizations and banks, such as Swift, ABN Amro, ING Bank, BBVA, and Goldman Sachs, are experimenting with Generative AI in banking. These industry leaders are introducing technology to automate processes, enhance customer interactions, analyze behavior patterns, optimize wealth management, and more. Let’s explore further how 11 influential brands are adopting or testing this transformative force.

Wells Fargo

While many enterprises are still experimenting with GenAI, Wells Fargo has already delivered a fully operational, large-scale solution. In 2024, its intelligent assistant Fargo handled more than 245 million client interactions, surpassing projections, without ever compromising sensitive data or exposing it to external language models.

Powered by Google’s Dialogflow and integrated into the Wells Fargo Mobile app, the application supports both voice and text-based communication. It helps customers manage everyday tasks like paying bills, transferring money, checking account activity, and getting transaction details. With users engaging multiple times per session, the assistant has become a sticky, value-added feature. Fargo also takes a proactive role, identifying irregular action, suggesting smart money moves, and forecasting future expenses, empowering people to stay in control of their financial lives.

Morgan Stanley

The company is continuing to expand its use of Generative AI with the rollout of AskResearchGPT, a purpose-built assistant designed to help teams across investment banking, sales & trading, and research. Integrated directly into internal workflows, Morgan Stanley provides one-click access to a massive library of over 70,000 proprietary reports published annually.

AskResearchGPT enables employees to quickly retrieve data, extract insights, and summarize complex materials, giving them a richer and more current understanding of the firm’s research output. This deeper visibility translates into more informed decision-making and elevated service for institutional clients. It’s a powerful step forward in making Generative AI a core part of high-stakes financial workstreams, boosting both speed and strategic depth across the board.

JPMorgan Chase

A frontrunner in financial technology, the company is stepping up its AI game with “Moneyball”. This tool is designed to assist portfolio managers in making more objective investment decisions by analyzing historical data and identifying potential biases in their strategies. The “virtual coach” approach aims to enhance decision-making processes, prevent premature selling of high-performing stocks, and ultimately improve investment outcomes for clients, by drawing on 40 years of market data.

Citigroup

1,089 pages of new capital rules – a daunting task for any compliance team. But Citigroup didn’t flinch. Instead, they turned to Gen AI, a powerful tool that swiftly parsed the dense regulatory document, distilling it into key takeaways. This AI-powered analysis empowered risk and compliance teams, ensuring rapid understanding and informed decision-making. A testament to Citigroup’s innovative approach, this move showcases how AI is disrupting the domain in the face of complex regulations.

Mastercard

Fraudsters are always evolving, but Mastercard is staying one step ahead. With cutting-edge Generative AI, they can now detect potentially compromised cards at twice the speed, safeguarding cardholders and the financial ecosystem. The intelligent algorithms scan billions of transactions across millions of merchants, uncovering complex fraud patterns previously undetectable.

Brand’s predictive AI also reduces false positives by up to 200% while accelerating the identification of at-risk dealers by 300%. The result? Faster alerts to banks, quicker card replacements, and enhanced trust in the digital infrastructure. This latest advancement further strengthens Mastercard’s robust suite of security solutions, ensuring a safer landscape for all.

Fujitsu and Hokuhoku Financial Group

Generative AI in banking isn’t just for customer-facing applications; it’s reshaping internal operations as well. Fujitsu, in collaboration with Hokuriku and Hokkaido Banks, is piloting the use of the technology to optimize various tasks. By using Fujitsu’s Conversational AI module, the institutions are exploring how AI can answer internal inquiries, generate and verify documents, and even create code. Such an approach could make the processes more efficient, accurate, and responsive to the evolving needs of the industry.

OCBC Bank

Another example of AI altering internal operations comes from OCBC. The Singapore-based bank is deploying OCBC GPT, a chatbot powered by Microsoft’s Azure OpenAI, to its 30,000 employees globally. This move follows a successful six-month trial where participating staff reported completing tasks 50% faster on average.

The tool is designed to assist with writing, research, and ideation, boosting productivity and enhancing customer service. By keeping all information within the bank’s secure environment, OCBC ensures data privacy while empowering its workforce with advanced AI capabilities.

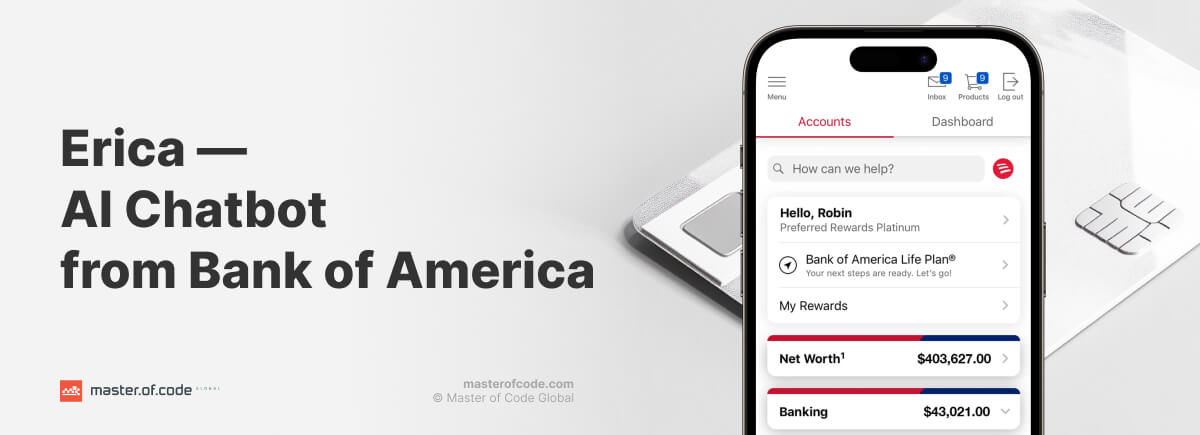

Bank of America

One of the world’s biggest financial institutions is reimagining its virtual assistant, Erica, by incorporating search-bar functionality into the app interface. This design change reflects the growing trend of users seeking a more intuitive and search-engine-like experience, aligning with the increasing popularity of generative tools.

While Erica hasn’t yet integrated Gen AI capabilities, the bank is actively exploring its potential to further enhance the customer journey. The focus on incremental improvements, coupled with a commitment to piloting new technologies internally before rolling them out to consumers, demonstrates Bank of America’s dedication to evolving its bot to meet the ever-changing expectations of its audience.

Deutsche Bank

Paul Maley, Global Head of Securities Services at Deutsche Bank, emphasizes the bank’s commitment to AI innovation, stating, “We are leveraging our partnerships with top-tier technology firms, like Google and NVIDIA, to develop AI capabilities spanning content management, anomaly detection, workflow optimization, and other use cases to bring new services and capabilities to our clients.”

Currently, the institution is actively applying this technology in three key areas:

- AI-powered cash flow forecasting automatically adjusts transaction due dates, learns from new information, and continuously improves predictions.

- Named Entity Recognition extracts crucial details from scanned documents, simplifying sanctions and embargo checks.

- AI analyzes and routes client inquiries, ensuring timely responses and efficient case assignments.

NatWest

Cora, NatWest’s virtual assistant, is getting a Generative AI upgrade with the help of IBM and their Watsonx platform. This enriched version, Cora+, will offer customers a more conversational and personalized experience. It will access a wider range of secure information sources, providing answers on products, services, and even career opportunities within the NatWest Group. Cora+ aims to be a safe, reliable digital partner, helping clients navigate complex queries with ease and improving accessibility to data.

BBVA

BBVA is leading the charge in European banking by deploying ChatGPT Enterprise to over 3,000 employees, making it the first bank on the continent to partner with OpenAI. This strategic move aims to maximize performance, simplify procedures, and encourage out-of-the-box thinking across the organization. The bank envisions Gen AI empowering workers in numerous ways, including content creation, complex question answering, data analysis, and process optimization.

bunq

Dutch challenger bank bunq has introduced Finn, its proprietary digital assistant built using advanced large language models. Finn performs much like a conversational agent, allowing users to ask questions about anything from their spending habits to transaction history. Whether it’s “How much did I spend on groceries last month?” or “What was that Indian restaurant I visited in London?”, Finn delivers accurate, context-aware answers in seconds.

By combining client data with natural language understanding, Finn offers a truly intuitive and tailored banking experience, turning financial queries into simple conversations.

Raiffeisen Bank

The institution has taken a major step toward enterprise-wide AI integration by launching RBI ChatGPT, its own solution powered by Microsoft Azure OpenAI Service and Azure AI Search within the Azure AI Foundry. The tool was developed to automate repetitive tasks such as summarizing legal, regulatory, and financial papers, as well as documenting business intelligence.

With RBI ChatGPT now live, employees report increased productivity and faster resolution of customer requests. The successful rollout has laid the groundwork for scaling artificial intelligence across more functions, positioning RBI as one of the region’s banking companies.

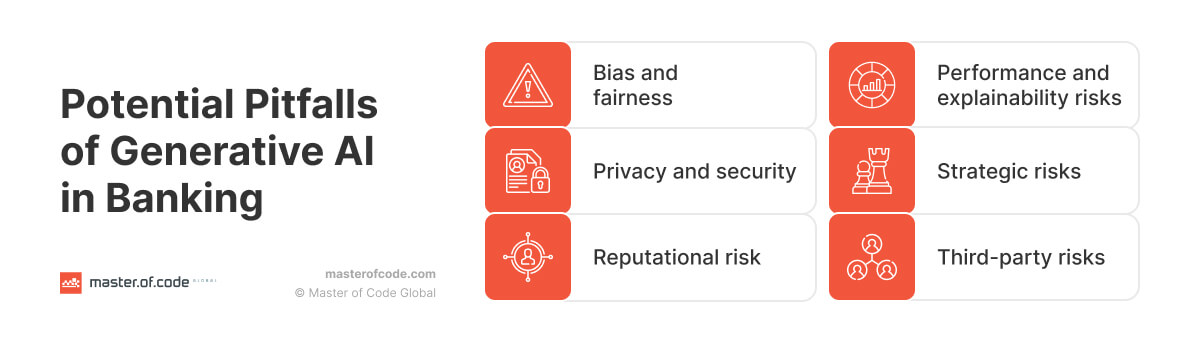

Limitations and Risks of Generative AI in Banking

While GAI holds immense potential for the banking sector, it’s essential to also consider the limitations inherent in LLMs and the risks related to its implementation:

Limitations

- Data dependency. Generative models are only as good as the datasets they are trained on. Flawed, biased, or incomplete data lead to inaccurate outputs and unreliable predictions.

- Hallucinations. LLMs may sometimes generate results that seem plausible but are factually incorrect or nonsensical, a phenomenon known as “hallucinations.” This is problematic in scenarios where accuracy is paramount.

- Explainability. The inner workings of complex AI systems can be difficult to interpret, raising concerns about transparency and accountability, particularly for professionals relying on Generative AI for accounting or other critical financial tasks.

- Numerical precision. While the technology excels at pattern recognition, it may struggle with precise numerical calculations. It necessitates human oversight in certain operations to ensure the reliability of the information given.

Risks

- Bias and fairness. If training data is incorrect, AI models can perpetuate and even intensify those biases, leading to unfair or discriminatory outcomes.

- Privacy and security. The use of sensitive financial records raises concerns about privacy breaches and cybersecurity threats, including unauthorized data disclosure and malicious exploitation by bad actors.

- Reputational risk. Misuse of AI or unforeseen errors could damage a bank’s reputation and erode customer trust.

- Performance and explainability risks. Models may provide factually incorrect answers or outdated information, and their decision-making processes may lack transparency.

- Strategic risks. Noncompliance with ESG (Environmental, Social, and Governance) standards or regulations due to AI implementation could lead to societal or reputational harm.

- Third-party risks. Reliance on external tools or unknown vendors could expose banks to data leakage or other vulnerabilities.

Financial organizations must adopt a cautious, responsible approach to integrate Generative AI. With proper mitigation strategies, like robust data governance, rigorous testing and validation, prioritization of transparency and explainability, and an ethical framework, AI banks will be able to maintain client trust and safety.

Future of Generative AI in Banking

The future of technology within this domain looks incredibly promising. Generative AI can be used to create virtual assistants for employees and customers. It can speed up software development, speed up data analysis, and make lots of customized content. It’s expected that Generative AI in banking could boost productivity by 2.8% to 4.7%, adding about $200 billion to $340 billion in revenue.

Moreover, statistics suggest that it could boost front-office employee efficiency by 27% to 35% by 2026. This will result in up to $3.5 million in additional revenue per employee. Financial institutions are already actively employing Gen AI in their operations, and the technology’s potential for transforming the industry is vast.

Starting internally and trying it out with employees is the first step. Still, there’s optimism for wider use. As these pilot projects succeed, we can expect this technology to spread across different parts of the industry. This will bring positive change to banking and finance.

Master of Code Global’s Gen AI Solutions for Banking

At Master of Code Global, we’re not just a Generative AI development company; we’re your strategic partner in capitalizing on Gen AI for banks. Our team of seasoned experts is well-versed in a wide range of models, including GPT, DALL-E, PaLM2, Cohere, LLaMa 2, and other LLMs.

Our services are tailored to your unique needs and objectives, combining hands-on delivery with banking AI consulting to guide strategy, governance, and execution. We begin with a thorough discovery phase to understand your business challenges and opportunities. Then, we analyze and assess your data to determine the best AI approach. Our team validates your ideas with a proof of concept, followed by meticulous design, development, training, and testing. Post-launch, our company provides ongoing monitoring and fine-tuning to ensure your solutions continue to deliver optimal performance and value.

Whether you’re looking to implement Conversational AI banking interfaces, simplify internal processes with intelligent automation, or get deeper insights from your datasets, we have the expertise and experience to aid you in achieving these goals.

Backed by our AI in banking consulting, you can gain a sustainable competitive advantage, grounded in governance, measurable outcomes, and secure deployment. Let’s start a conversation about how we can help you navigate this exciting frontier and shape the future of GenAI in banking.

Steps to Get Started With Generative AI in Banking

Step 1: Develop an AI Strategy

Start with one business outcome and one workflow, not “GenAI everywhere.” Pick a lane (e.g., contact center deflection, KYC document intake, SAR drafting) and define success in numbers: time saved, error rate reduction, fraud loss avoided, CSAT lift, or cost per case.

Think of a COO who greenlights “an AI assistant” and three months later gets a demo that can’t be deployed; strategy prevents that by locking scope, owners, and constraints upfront.

What to define now

- Primary use case + 2 backups (in case data or compliance blocks the first);

- Target users (customers, agents, analysts) and where the AI lives (chat, CRM, case tool);

- Risk posture: what the AI may do autonomously vs. what requires human approval;

- Budget model: pilot vs. production, and who pays for ongoing inference + support.

Step 2: Assess the Current Data State

Artificial intelligence is only as reliable as the data it can access and the rules around that access. Inventory your sources (policies, product docs, ticket history, transaction metadata, CRM notes), then grade them on freshness, ownership, and permissioning. If you need help with that, our Master of Code Global team has years of experience and expertise, and is ready to help.

What to check

- Data availability: what exists, where it lives, and who can approve access;

- Data quality: duplicates, contradictions, missing fields, outdated policies;

- Data sensitivity: PII/PCI/PHI, retention rules, cross-border constraints;

- Grounding plan: which sources are “truth,” and how the AI will cite them internally.

Step 3: Prototyping and Testing

Build the smallest version that can fail fast and teach you something. Prototype with real workflows, real edge cases, and real “no” scenarios (when the AI must refuse, escalate, or ask for more info).

What to test (non-negotiables)

- Accuracy on golden test sets (known cases with known correct answers);

- Hallucination controls: grounded answers only, refusal behavior, escalation paths;

- Latency: can it respond fast enough for live operations?

- Auditability: log prompts, sources used, actions taken, and final outputs.

Step 4: Deployment and Scaling

We help you deploy where you can control risk: we start internal (employee copilots) or low-risk customer flows (FAQs, status checks) before moving into high-impact decisions. Then, we integrate into the system of record (CRM/case tool) so the AI works in the same place your teams already work.

Scaling isn’t “more users.” It’s more use cases, more data sources, more integrations, and tighter governance each time you expand.

How to scale without breaking trust

- Rollout gates: pilot → limited production → broader release;

- Role-based access: different prompts/tools for agents vs. compliance vs. leadership;

- Guardrails by design: tool permissions, approval steps, and safe action boundaries;

- Change management: training, playbooks, and “what to do when AI is wrong.”

Step 5: Monitoring and Optimization

Treat Gen AI solutions for banks like a living system. Monitor quality (accuracy, escalation rate), safety (policy violations), and business impact (time saved, cost per case), then retrain prompts, retrieval, and workflows based on what real users do.

Picture week three after launch: agents stop using the AI because one edge case burned them. Monitoring catches that pattern early and turns it into a fix, not a slow adoption death.

What to measure weekly

- Deflection rate, handle time, and first-contact resolution (customer workflows);

- False positives/negatives and investigation time (fraud/AML workflows);

- Hallucination incidents, refusal rate, and escalation quality;

- Drift: when policy/data changes and the AI starts answering differently.

Ready to move from experimentation to impact?

Talk to Master of Code Global about building a Generative AI solution for banking that fits your data, workflows, and regulatory reality. Let’s turn AI ambition into measurable results.

FAQ

Which aspect of banking is improved by using Generative AI chatbots?

Digital bots significantly elevate customer service by delivering fast, accurate, and personalized responses. They reduce wait times, handle routine inquiries around the clock, and free up human agents to focus on more complex issues. This results in higher client satisfaction and operational efficiency.

What does the Generative AI chatbot at Wells Fargo help customers with?

Fargo assistant helps people manage everyday banking needs such as paying bills, transferring funds, checking transaction details, and answering questions about account activity. It also provides proactive insights like identifying unusual spending or forecasting future expenses, all while maintaining strict data privacy standards.

Which of the following tasks can Generative AI chatbots for banks perform?

Here is what a smart financial companion can do:

- Answer account-related queries

- Assist with fund transfers and bill payments

- Offer financial advice based on spending patterns

- Help users apply for credit cards or loans

- Detect and flag suspicious activity

- Summarize reports or documents

- Support multiple languages for inclusive service

What is the difference between Generative AI and Conversational AI in banking?

GenAI is the content and reasoning layer: it creates and transforms information, drafts replies, summarizes cases and calls, explains policies, generates reports, and answers grounded questions from internal documents (often via RAG).

Conversational AI in banking is the interface and workflow layer: it handles dialogues across channels (chat/voice), manages intents, context, routing to agents, and integrates with banking systems to complete tasks (reset password, check balance, open a case).

In practice: a banking chatbot can be Conversational AI without GenAI (rule-based/intent-based), but adding the latter makes it smarter at language-heavy work like summarizing, explaining, and drafting, when properly controlled.

Ready to build your own Conversational AI solution? Let’s chat!