LOFT: LLM-Orchestrator Open Source Framework

Building sophisticated Conversational AI is complex, but our framework accelerates the entire development lifecycle for faster, more efficient delivery. This means reduced time, seamless integration with your existing systems, and effortless future scaling. Want to discover how exactly LOFT helps us implement your AI project faster than our competitors would do? Get the voice deck for framework presentation and dive into benefits, numbers and real examples!

43%

Less effort for initial project setup

20%

Of budget may be saved when scaling before MVP

x3

Times faster project support

What is this product all about?

For Our Clients

LOFT empowers us to deliver AI solutions with unprecedented speed and efficiency. This translates to a faster time-to-market, allowing you to capitalize on opportunities and gain a competitive edge. By optimizing resources and streamlining development processes, we reduce overall project costs, maximizing your return on investment. Furthermore, LOFT’s inherent flexibility guarantees your AI solutions can seamlessly scale alongside your business growth, adapting to future demands without costly overhauls.

For Our Partners

The LLM-Orchestrator Open Source Framework enables us to enrich your existing platforms with cutting-edge AI capabilities, expanding your service offerings and strengthening market position. We can seamlessly integrate LOFT to introduce new AI-powered features or leverage its modularity to incorporate cost-effective alternatives for existing solutions, maximizing value for your clients. This collaborative approach not only reimagines your platform’s capabilities but also drives customer satisfaction by ensuring smooth, reliable, and engaging experiences.

What Are the 6 Interchangeable Components of LOFT?

The LOFT architecture is modular, allowing for flexible and adaptable project creation and ongoing maintenance. This means we can tailor solutions precisely to your needs, ensuring optimal performance and scalability.

Architecture

The LOFT architecture is modular, allowing for flexible and adaptable project creation and ongoing maintenance. This means we can tailor solutions precisely to your needs, ensuring optimal performance and scalability.

Architecture

The LOFT architecture is modular, allowing for flexible and adaptable project creation and ongoing maintenance. This means we can tailor solutions precisely to your needs, ensuring optimal performance and scalability.

SMG

Stateful Messages Gateway is the heart of communication, orchestrating sessions and chats with precision and efficiency. Think of it as the air traffic controller for your AI, making sure that every interaction flows smoothly.

Architecture

The LOFT architecture is modular, allowing for flexible and adaptable project creation and ongoing maintenance. This means we can tailor solutions precisely to your needs, ensuring optimal performance and scalability.

Input/Output Middlewares

These powerful tools customize and optimize user data interaction. They act as gatekeepers, strengthening security, ensuring compliance, and refining dialogue flow for a seamless user experience.

Architecture

The LOFT architecture is modular, allowing for flexible and adaptable project creation and ongoing maintenance. This means we can tailor solutions precisely to your needs, ensuring optimal performance and scalability.

Context

The brain of your AI, this component provides a set of essential tools for controlling the flow of conversation. It’s like the GPS for your virtual assistant, guiding interactions towards meaningful outcomes.

Architecture

The LOFT architecture is modular, allowing for flexible and adaptable project creation and ongoing maintenance. This means we can tailor solutions precisely to your needs, ensuring optimal performance and scalability.

Core Repository

This is a real memory bank of your AI, storing session data and context for future reference. With swappable repositories, you have the flexibility to choose the storage solution that perfectly suits your needs.

Architecture

The LOFT architecture is modular, allowing for flexible and adaptable project creation and ongoing maintenance. This means we can tailor solutions precisely to your needs, ensuring optimal performance and scalability.

LLM Manager

A unified orchestrator that enables seamless interaction with various Large Language Models. This allows us to select the best option for your specific use case, maximizing performance and accuracy.

Architecture

The LOFT architecture is modular, allowing for flexible and adaptable project creation and ongoing maintenance. This means we can tailor solutions precisely to your needs, ensuring optimal performance and scalability.

Manifest Manager

This component defines your bot’s personality and behavior. Securely accessible by AI trainers, it makes possible to continuously refine and optimize the conversational capabilities.

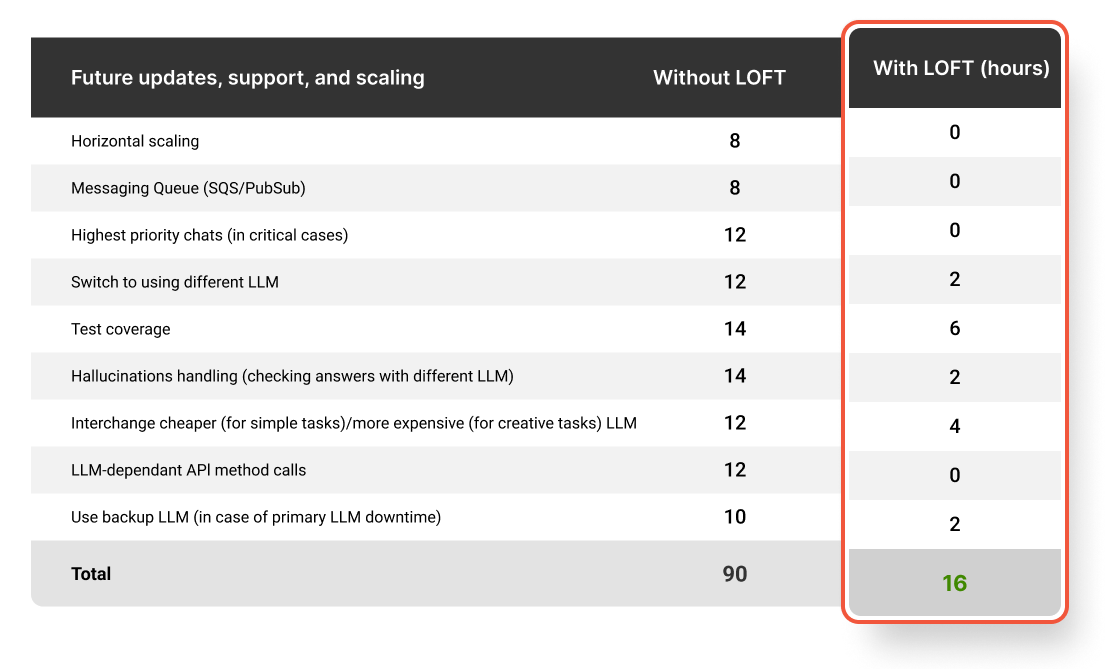

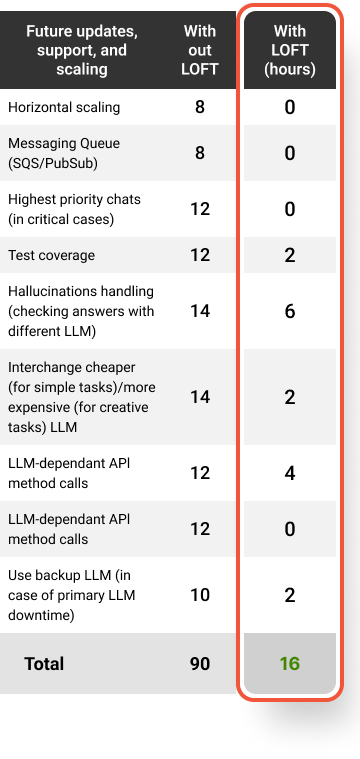

LOFT Helps Us Implement Custom Solutions and Scale Their Functionality Faster

How LOFT Empowers Our Engineers to Quickly Create AI Solutions from Scratch

Quality Assurance of LLM Responses

We deliver a consistently reliable experience with LOFT’s robust QA…

Unified API for Different LLMs

This aspect simplifies integration, allowing us to choose and incorpo…

Let’s Compare LOFT to Other LLM Frameworks

Share your vision with us, and we’ll bring our expertise and passion to make it a reality. Your next big success starts here!

Ready for growth?

John Colón

Olga Hrom

Director of Pre-Sales Strategy & Delivery