Did you know that 67.2% of businesses are prioritizing the adoption of Large Language Models and Generative AI this year? What could this mean for your organization’s future?

Large Language Models (LLMs) are deep learning algorithms that can recognize, extract, summarize, predict, and generate text based on knowledge gained during training on huge datasets. 58% of businesses have started to work with LLMs. Over the next three years, 45.9% of enterprises aim to prioritize scaling AI and ML applications. These tools can enhance their data strategy’s business value. In the upcoming fiscal year, 56.8% anticipate a double-digit revenue increase from their AI/ML investments. Another 37% expect single-digit growth.

Are you prepared for the AI transformation in the business world? Explore the possibilities of LLMs for enterprise adoption and secure your organization’s competitive edge.

Table of Contents

What is a Large Language Model?

According to Nvidia, LLM “is a type of artificial intelligence (AI) system that is capable of generating human-like text based on the patterns and relationships it learns from vast amounts of data.” Large Language Models are trained on extensive datasets of text. These can include books, Wikipedia, websites, social media, academic papers, etc.

Such datasets range from tens of millions to hundreds of billions of data points. The size of the dataset and the volume of training data significantly impact the accuracy and sophistication of a model. LLMs also have a huge number of learnable parameters.

These enable them to excel in tasks such as content generation, summarization, and translation. Moreover, they empower LLMs to comprehend language nuances, technical terminologies, and writing styles. So, Large Language Models produce responses that are more precise and refined compared to their smaller counterparts.

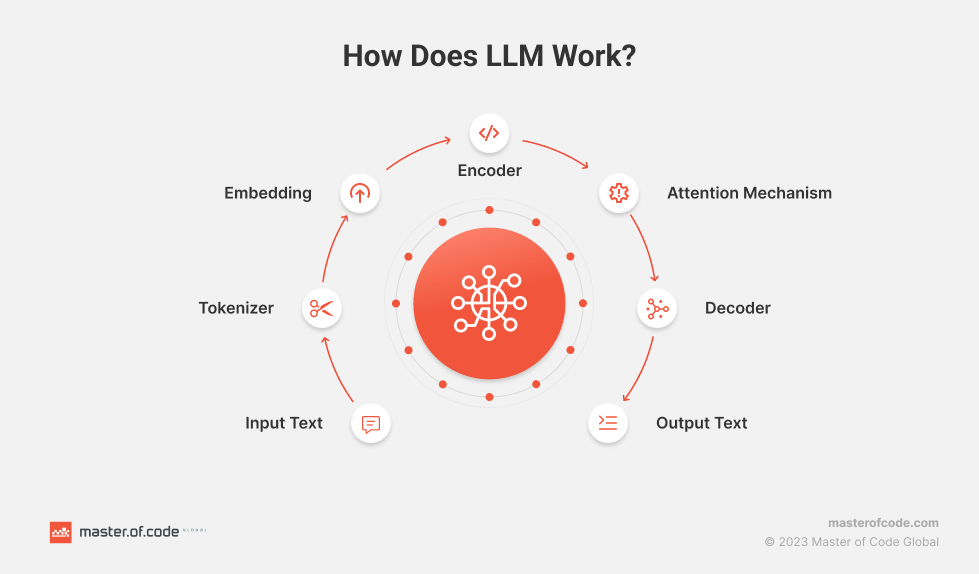

The Anatomy of LLM

Large Language Models function through a complex and systematic process. The key components that enable their remarkable capabilities are:

- Input Text. Raw text data serves as the foundation for LLMs. It represents the initial information provided for processing.

- Tokenizer. The input text undergoes tokenization, where it is broken down into smaller units such as words, phrases, or characters. Tokenization structures the text for the model’s comprehension.

- Embedding. Tokens are converted into numerical vectors through embedding. They capture the semantic and syntactic meanings of the text. Embeddings transform textual data into a format understandable by the neural network.

- Encoder. The embedded tokens are processed by the encoder. It’s a fundamental component that extracts relevant information from the input sequence. The encoder’s role is crucial in understanding the context and relationships between words.

- Attention Mechanism. This element allows the model to focus on specific parts of the input text. It assigns varying levels of importance to different tokens. Thus, it enhances the model’s ability to weigh the significance of words and improve context understanding.

- Decoder. Utilizing the encoded information, the decoder generates the output sequence. This component translates the processed data back into human-readable text. It also ensures the response is coherent and contextually relevant.

- Output Text. The final generated text is the result of the LLM’s intricate processing. It is based on the input and the model’s learned patterns and relationships.

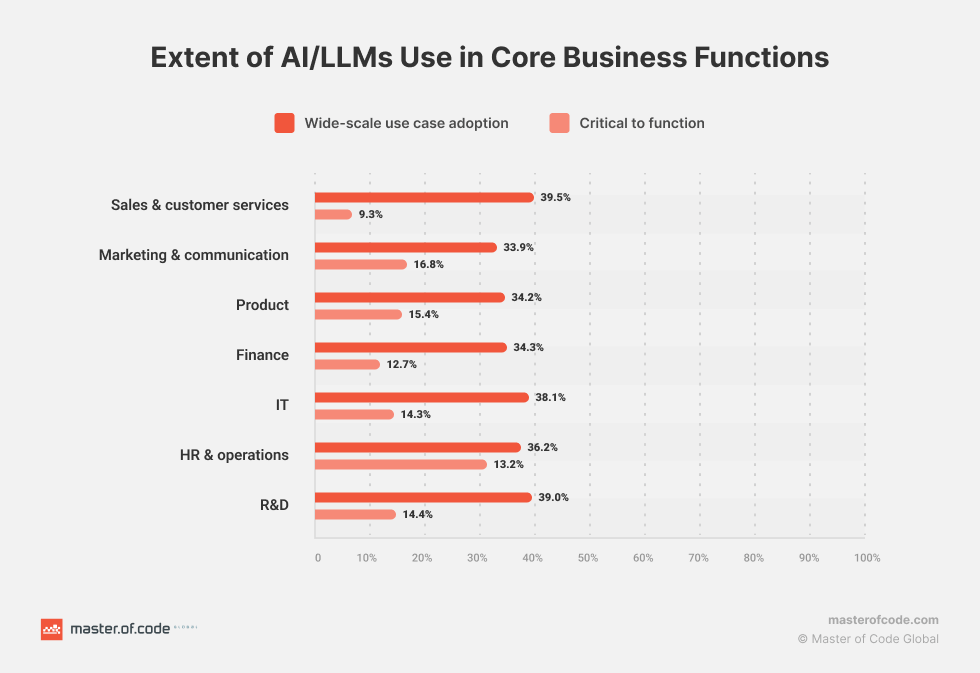

State of LLM Adoption in Enterprises

Enterprises are increasingly recognizing the transformative power of AI. As per The State of AI in the Enterprise Report, 64% incorporate it into their business models. The drive behind this adoption is multifaceted.

32% of enterprises aim to streamline efficiency and 20% focus on enhancing customer experiences. More than 75% of enterprises report positive outcomes from utilizing AI. Among them, 45% experienced moderate benefits, 23% substantial improvements, and 8% remarkable gains. Amidst the adoption fervor, enterprises grapple with significant challenges.

As per Enterprise Generative AI Adoption Report, top challenges hindering the adoption of Generative AI/LLMs include data-related challenges (62.9%) and the need for customization & flexibility (63.5%). In addition, organizations face concerns about security & compliance (56.4%), performance & cost (53%), and governance issues (60.2%).To address some of these challenges, 83.3% of companies plan to implement policies specific to Generative AI adoption.

Enterprises are diversifying their use of Large Language Models beyond Generative AI. 32.6% chose information extraction, emphasizing LLMs’ insight extraction from unstructured data. Additionally, 15.2% preferred Q&A/Search, demonstrating real-time response capabilities.

Additionally, customization emerges as a critical trend. 32.4% of enterprises opt for fine-tuning and 27% embrace reinforcement learning. This indicates a keen interest in tailored solutions.

Indeed, enterprises are increasingly relying on customized LLMs for precise outcomes. A majority of teams intend to tailor their Large Language Models through fine-tuning (32.4%) or reinforcement learning with human feedback (27%). This highlights the need for cautious deployment of LLMs to balance innovation with ethical norms.

Benefits of Integrating Generative AI Solutions

- Improved Performance: Generative AI Chatbots and virtual assistants can understand and respond to a wider range of language inputs. This can lead to understanding customer intent faster, higher containment rates, and improved overall customer experiences.

- Hyper-personalized Conversational Commerce: Utilizing LLMs can help enterpises create increasingly customized marketing messages and product recommendations based on individual customer profiles and preferences, leading to higher engagement, better conversion rates, and improved CSAT.

- Enhanced Search Functionality: Enhancing the search experience on websites, knowledge bases, and apps with the help of Generative AI solutions. Employing NLU and LLMs in search allows a user to more easily describe what they are looking for, as user intent is understood faster, increasing the likelihood of delivering accurate and relevant search results.

- Multilingual Conversational Commerce: Expanding global reach and adding new market segments. Using NLU and LLMs allows for the quick training and tuning of additional languages, making it easier to offer multilingual customer support and conversational commerce solutions.

- Advanced Analytics: Leveraging NLU and LLMs to analyze large volumes of unstructured data, such as customer interactions, to identify new intents, sentiment, trends, patterns, and potential areas of improvement throughout the customer journey.

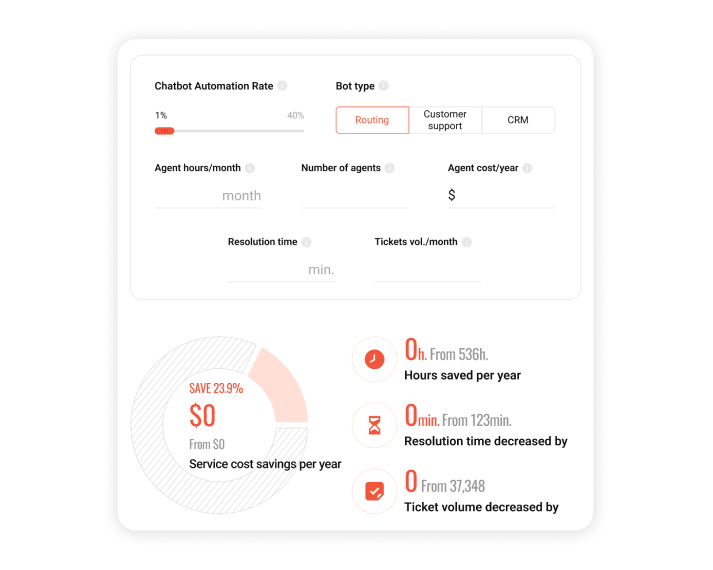

Thinking of incorporating Generative AI into your existing chatbot? Validate your idea with a Proof of Concept before launching. At Master of Code Global, we can seamlessly integrate Generative AI into your current chatbot, train it, and have it ready for you in just two weeks.

LLM Enterprise Use Cases

LLMs are transforming how enterprises manage content, engage with customers, and make decisions. They offer innovative solutions to complex challenges across different business functions.

Working with the Content/Documentation:

- Writing or editing emails, blog posts, and creative stories;

- Extracting/summarizing key data;

- Providing fluent translations in multiple languages;

- Identifying and filtering out inappropriate/harmful online content.

Assisting Application Development:

- Generate specific code snippets;

- Identify errors and security vulnerabilities in existing code.

AI-Driven Chatbots and Conversational AI:

- Engage customers in natural language conversations, addressing queries, and offering product recommendations;

- Gauge customer feedback for personalized responses;

- Integrate Generative AI into your support stack that is specialized in your specific domain so that it can summarize customer interactions, group similar cases, and surface contextualized knowledge for agents, reducing customer escalations;

- Enhance containment and CSAT of existing solutions by allowing customers to more easily self-serve through the use of Generative AI.

Sentiment Analysis:

- Evaluate emotional tones within textual data, sentiment, and mood accurately;

- Offer insights for brand management, enabling strategic decision-making.

Knowledge Management:

- Access documents, facilitating efficient indexing and retrieval processes;

- Extract insights from unstructured data for informed decision-making.

Predictive Analytics and Fraud Detection:

- Analyze patterns within textual data, predicting trends and future outcomes;

- Detect fraudulent activities and patterns in textual information for prevention measures.

Sales & Marketing Activities:

- Make in-depth analysis of customer reviews, social media mentions, and market trends;

- Anticipate customer preferences and behaviors, aiding targeted marketing strategies and product development;

- Streamlined lead generation, optimizing sales efforts and increasing overall efficiency;

- Data insights used to identify upsell and cross-sell opportunities.

- Check out more insights on Generative AI for Marketing.

These applications of Language Models signify a paradigm shift in how organizations work with data. Moreover, these advanced tools are shaping the future of enterprises.

Large Language Models Adoption Challenges

Navigating the limitations of LLMs for enterprises, the companies encounter challenges such as:

- LLM hallucinations – Generative AI service output is not necessarily based on facts or reality and should be carefully considered depending on the use case. You can employ several tactics to help tame its wild side, including:

- Limiting response length: Restricting the length of the generated response to minimize the chance of irrelevant or unrelated content.

- Controlled input: Rather than offering a free-form text box for users, suggest several style options to act as guide rails. For example, ask the user if the email they want to create should be 1. thankful, 2. remorseful, or 3. empathetic.

- Adjusting temperature: Controlling the randomness of the output by adjusting the temperature parameter.

- Using a moderation layer: Filtering out inappropriate, unsafe, or irrelevant content before it reaches the end-user.

- Implementing user feedback loops to instruct the model on what it has done right or wrong, enabling it to adjust parameters to perform better in the future.

- Fine-tuning the model: Improving the performance of the LLM model solution by fine-tuning it using a domain-specific dataset to reduce the likelihood of hallucinating answers.

- Data Privacy and Integration Challenges. The integration of Large Language Models into existing systems poses challenges due to the vast amounts of data they generate. Enterprises need robust security measures. These include encryption and data masking, to safeguard sensitive information. Moreover, working with vast volumes of data requires meticulous planning and execution.

- Financial and Skill Constraints. LLMs come with substantial costs, making them financially burdensome for many enterprises. Additionally, the skills shortage in LLM expertise hampers effective implementation. Investments in training programs are essential to bridge this gap.

- Unintended bias – Bias is present everywhere, and LLM solutions have been trained on available (biased) data available on the internet. Mitigating this risk is crucial to ensure fairness, safety, and inclusion. Possible strategies could be:

- Fine-tuning the model: Minimizing inherent biases by fine-tuning the LLM model(s) using a diverse and representative dataset.

- Adding Bias-detection tools: Identifying and flagging biased outputs using bias-detection tools that can be rule-based systems, machine learning models, or a combination of both.

- Implementing a moderation layer: Validating information received from a LLM by an external NLP system, for example, and then sending it to the end-user.

- Adding a user feedback loop with unbiased, external information from a source that is not strongly correlated to the outputs of the model.

- Collaborating with diverse teams: Working with a diverse team of experts, including ethicists, social scientists, and domain experts, to gain insights into potential biases and develop strategies to mitigate them.

- Providing iterative improvements so that task requirements are addressed and the appropriate level of quality is attained.

Ethical considerations, robust security measures, investment in skills development, and diligent oversight are paramount for responsible AI use. Such an approach enables enterprises to realize the full potential of LLMs in their operations.

Types of LLMs

LLMs can be classified based on their openness, purpose, and specialization. Each category sheds light on the nuanced capabilities and LLM enterprise use cases. Here are the most common Large Language Models types.

Open-Source LLMs

Open-source LLMs are large language models whose source code is freely available for the public to use, modify, and distribute. They are accessible to developers and researchers, encouraging collaboration and innovation.

- Examples: LLaMA 2, Alpaca, RedPajama, Falcon, and FLAN-T5.

- Benefits: free access and modifiability, community collaboration and support, foundations for diverse applications.

- Drawbacks: potential quality and performance inconsistencies, need for technical expertise for implementation and fine-tuning, and limited user support.

Closed-Source LLMs

Closed-source LLMs are proprietary models whose source code is not publicly available. They are developed and maintained by specific organizations. And access is often restricted or provided through licenses.

- Examples: Bard, GPT-4, Claude, Cohere, Gopher, LaMDA, and Jurassic.

- Benefits: rigorous quality control, consistent high-quality performance, dedicated user support, and high reliability.

- Drawbacks: licensing costs, restricted customization options, and closed ecosystem restricting flexibility.

General-Purpose LLMs

General-purpose LLMs handle lots of natural language processing tasks without extensive customization. They are versatile and can be applied to various applications.

- Examples: GPT-3, BERT, LaMDA, Wu Dao 2.0, and Bard.

- Benefits: versatility in NLP tasks, ease of use and minimal customization, broad availability of pre-trained models.

- Drawbacks: limited task-specific optimization, resource intensiveness for complex tasks, potential lack of precision in specialized domains.

Domain-Specific LLMs

Domain-specific LLMs are models fine-tuned for particular fields or industries. This ensures high performance and accuracy in specialized tasks within those domains.

- Examples: BioBERT, BloombergGPT, StarCoder, Med-PaLM, and ClimateBERT.

- Benefits: high accuracy in specialized domains, efficiency in domain-specific tasks, reduced training data requirements.

- Drawbacks: limited to specific industries or topics, complex fine-tuning, lack of general applicability.

LLMs are categorized into diverse groups, tailored for specific functions and applications. When it comes to chatbot and digital assistant development, Master of Code Global can recommend models such as GPT-3.5-turbo, PaLM2, and RedPajama based on our R&D results.

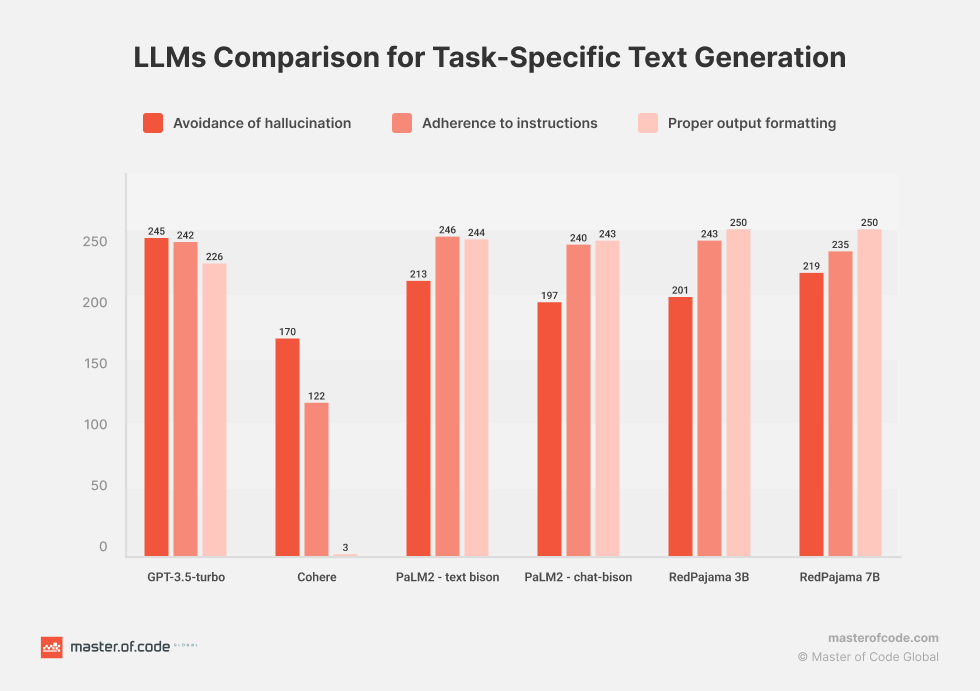

Comparative Evaluation of LLMs for Task-Specific Text Generation

In this in-depth R&D study by Master of Code Global, several leading language models were assessed. The focus was on their effectiveness in tasks commonly encountered in virtual assistants and chatbots. The evaluated models are GPT-3.5-turbo, Cohere, PaLM2 – text bison, PaLM2 – chat-bison, and Fine-tuned versions of RedPajama (3B and 7B).

GPT-3.5-turbo

GPT-3.5-turbo is an AI model developed by OpenAI. It is one of the most capable versions of GPT-3. It has a high natural language text generation capability. The model is capable of performing a variety of tasks such as text generation, answering questions, translations, code generation, and more. Developers can fine-tune this model to create custom models. These are better suited for their specific needs and can be run at scale.

Cohere

Cohere is a natural language processing platform that uses advanced deep learning models. It provides tools and APIs for analyzing text, discovering semantic relationships, and building applications based on text data. We have used Cohere Generation in our R&D. It’s suitable for interactive autocomplete, augmenting human writing processes, and other text-to-text tasks.

PaLM2 – text bison

PaLM2 is a deep learning model specialized in text data processing. The “text-bison” version is designed to process a variety of text inputs and for maximum performance and processing quality. It can be used for classification, sentiment analysis, entity extraction, summarization, rewriting text in different styles, generating ad copy, and conceptual ideation.

PaLM2 – chat-bison

PaLM2 – chat-bison is a variant of the PaLM2 model aimed at handling conversations and chats. The model is designed taking into account the specifics of text interactions between users. This allows it to better understand the context and generate more suitable responses. It can also support natural multi-turn conversation.

Fine-tuned RedPajama (3B and 7B versions)

RedPajama is a collaboration project between Ontocord.ai, ETH DS3Lab, Stanford CRFM, and Hazy Research to develop reproducible open-source LLMs. Two base model versions were taken for the experiment: a 3 billion and a 7 Billion parameters model. Overall, RedPajama is at the forefront of open-source initiatives. It enables broader access to powerful LLMs for research and customization purposes.

LLMs Evaluation R&D Results

This study aims to assess the efficacy of several language models in addressing specific tasks. The selected models represent a mix of established platforms and emerging research initiatives. The tasks chosen for evaluation encompass various domains and complexities.

The study focused on tasks such as informing customers about device upgrades, TV streaming promotions, trade-in eligibility, device compatibility, and extracting intents from customer inputs. Key evaluation criteria included adherence to instructions, avoidance of LLM hallucination, and proper output formatting.

All models, excluding RedPajama, underwent testing using a zero-shot task-specific dataset. However, RedPajama couldn’t match the selected models, so a training dataset was created. This dataset comprised 100 prompt-completion pairs for each task to fine-tune RedPajama. The evaluation test set for all models included 250 prompts, with 50 prompts assigned to each task.

The findings revealed nuanced differences in the performance of these models. Particularly noteworthy was the consistent and robust performance exhibited by the RedPajama models. They are highly promising options for task-specific text-generation applications within the realm of virtual assistants and chatbots.

Wrapping Up

LLMs allow enterprises to streamline operations, enhance customer experiences, and gain invaluable insights from textual data. One of the most compelling applications of LLMs is Generative AI-powered chatbots. These chatbots offer businesses the opportunity to engage customers in natural, human-like conversations, address queries, and provide tailored product recommendations.

At Master of Code Global, we’ve successfully implemented Generative AI-powered chatbots for businesses. The examples include BloomsyBox eCommerce Chatbot and Generative AI Slack Chatbot. These allowed to drive user engagement and automate the knowledge base for employees, respectively.

Beyond AI-powered chatbots, our expertise as an LLM development company extends to a comprehensive suite of Generative AI development services. We understand that every business is unique, and their requirements vary. That’s why we offer customized AI solutions that cater to specific industry needs.

With us, you’re not just adopting a technology; you get a strategic tool to boost your customer interactions and drive business growth. Join us on this journey to stay ahead in innovation and customer satisfaction.

Don’t miss out on the opportunity to see how Generative AI chatbots can revolutionize your customer support and boost your company’s efficiency.