When OpenAI launched ChatGPT, it kicked off an AI boom unlike anything seen before. Overnight, large language models became part of everyday life: powering personal projects, enterprise tools, and even customer interactions. The momentum was unstoppable, and for good reason: these systems promised speed, insight, and creativity on demand.

But as with any powerful technology, there’s a catch. In this case, it’s what experts call LLM hallucinations – those moments when a model confidently generates information that isn’t accurate or even real.

Does that mean businesses should step back from using these systems? Not at all. It means they should use them more wisely and responsibly.

In this article, we’ll unpack what causes LLMs to hallucinate and in what forms, as well as how to keep them under control. Drawing on insights from our AI engineers, conversation designers, and developers, we’ll share practical ways to reduce risks, maintain trust, and ensure maximum accuracy.

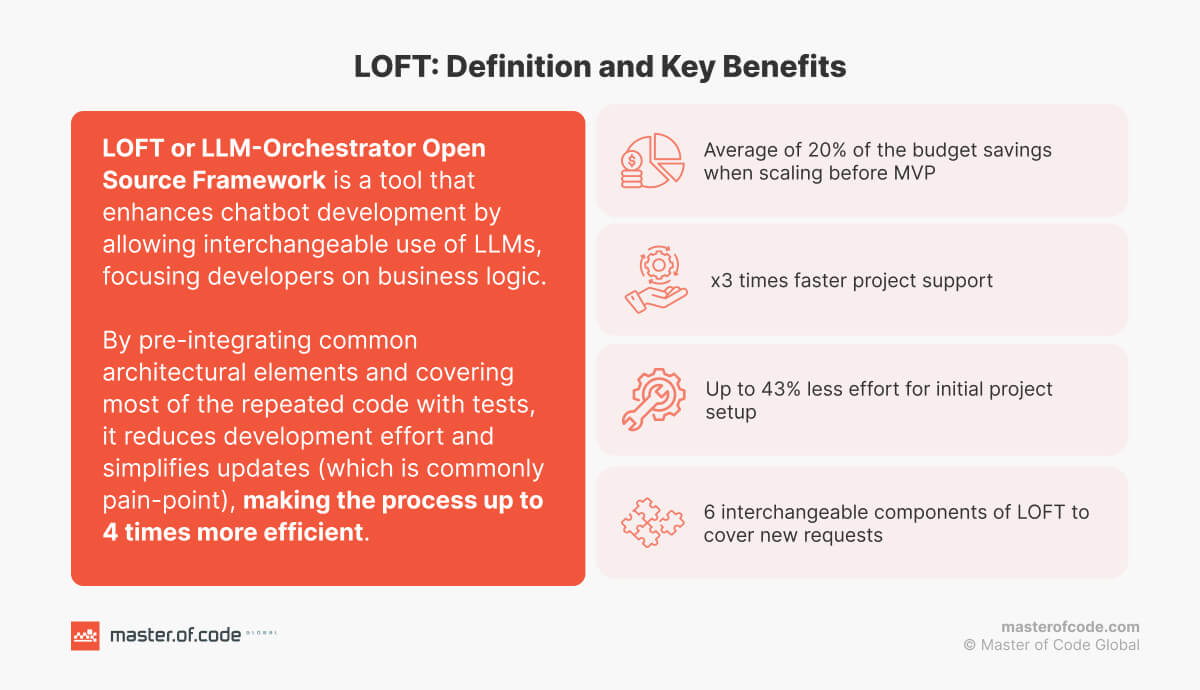

Finally, we’ll show how our proprietary LOFT framework ensures our custom-built solutions remain consistent and reliable in real-world scenarios. Let’s jump straight in.

Table of Contents

Understanding Large Language Model Hallucinations: Definition and Implications

First, let’s make sure we’re clear on what this phenomenon is.

LLM hallucinations describe moments when an algorithm generates fluent, confident responses that don’t align with factual or verifiable information. Researchers often define it as the production of “plausible yet nonfactual content,” or more simply, text that sounds right but isn’t true.

A helpful analogy is to imagine a student under pressure during an exam. When they don’t know the answer, they might write something that fits the question’s tone or structure – even if it’s wrong. LLMs behave similarly. Instead of admitting uncertainty, they predict what should come next based on patterns in their training data. The output reads naturally, yet it may lack any factual basis.

Hallucinations can appear in several ways:

- Invented facts or names when a model might cite nonexistent research papers, case laws, or sources.

- Logical inconsistencies imply contradicting itself within the same response.

- Contextual drift happens with long outputs, where earlier assumptions snowball into larger factual errors.

- When unsure, the model fills gaps with something that merely sounds plausible, also known as irrelevant but confident answers.

While their causes vary, the key idea is that hallucination isn’t a malfunction. It’s a byproduct of how these systems work: they’re trained to predict likely text, not verify truth. Recognizing limitations of LLMs helps teams manage risk and design solutions where accuracy is monitored and supported, not assumed.

Can LLM Hallucinations Be Completely Eliminated?

Now that we know what those are and why they happen, it’s tempting to ask the obvious question: can we get rid of them entirely?

According to Olena Teodorova, AI Engineer at Master of Code Global and expert in LLM training services, the answer is no. They cannot be completely eliminated in current large language models. She explains why:

- LLMs are trained to predict the next most likely token based on patterns in training data, not to verify truth. This core design makes hallucinations an inherent feature, not just a bug that can be patched out.

- Models have fixed knowledge cutoffs and can’t distinguish between what they truly “know” versus what seems plausible based on statistical patterns. They lack true understanding or access to ground truth.

- The same capabilities that make LLMs useful for creative tasks, brainstorming, and flexible problem-solving are what enable errors. Constraining one affects the other.

Olena reassures that while total elimination isn’t possible, you can dramatically reduce LLM hallucination rates to acceptable levels for many applications:

- Retrieval-augmented generation (RAG) systems can decrease it by 60–80% by grounding responses in verified documents.

- Multi-agent verification catches many errors through redundancy and cross-checking.

- Strong prompting techniques significantly improve factual accuracy.

We’ll come back to these methods later when we talk about how to manage the problem in practice. For now, let’s explore the taxonomy — the various forms they can take.

Types of Hallucinations in LLMs: Understanding the Spectrum

Not all cases are the same. Some stem from how a model interprets data, others from how it reasons or interacts with user prompts. Recognizing different hallucination scenarios helps organizations choose the right mitigation methods — whether that’s better data grounding, prompt design, or human oversight.

Below is a simplified taxonomy.

1. Knowledge-Based Hallucinations in LLM

These errors occur when a model misrepresents or invents information. They often arise because models predict what sounds statistically likely rather than verifying against a factual source.

- Intrinsic — contradicting the source or user input. For example, when summarizing a business report, it might misstate revenue figures or product names.

- Extrinsic — adding details not found in the source. For Generative AI in marketing, this could mean inventing customer statistics or testimonials.

- Factuality hallucinations in LLMs — producing content that is verifiably false, like citing non-existent research or listing imaginary product features.

- Amalgamated — blending unrelated facts into one. Think of it as merging two clients’ case studies into a single, inaccurate story.

- Faithfulness hallucinations in large language models — distorting or misrepresenting information from its source, even if it sounds accurate. For example, a financial summary might exaggerate profits or change timeframes while staying grammatically correct.

2. Reasoning-Based Hallucinations in LLM

This category involves logical or interpretive breakdowns rather than simple factual mistakes. These issues usually appear when prompts are ambiguous or when the model overgeneralizes from a limited context.

- Logical problems. The model’s response is coherent on the surface but internally inconsistent. For instance, an AI assistant might say, “the meeting is scheduled for tomorrow, on Wednesday,” when Wednesday is three days away.

- Contextual inconsistencies. The output contradicts earlier context, such as misquoting user preferences in a customer support exchange.

- Instruction deviations. The model fails to follow explicit directions. For example, translating text into the wrong language or generating a summary instead of a list.

3. Task-Based Hallucinations in LLM

Certain instances depend on the domain or task. And they reflect the model’s struggle to maintain accuracy under specialized or multi-step tasks.

- In customer support, the model might invent refund policies or warranty terms.

- In software development, it may produce syntactically correct but non-functional code.

- In content generation, it could distort data when producing summaries or reports.

- In multimodal systems, such as those handling both text and images, hallucinations can include mismatched descriptions. For example, labeling a photo of a car as a motorcycle.

4. User-Perceived Hallucinations in LLM

From a user perspective, the distinction between technical causes matters less than the visible outcome. For businesses, these instances are the most visible in LLM solutions. They affect customer trust, brand credibility, and compliance. Here are several common patterns:

- Factual incorrectness – clearly wrong statements or data.

- Fabricated information – made-up names, dates, or references.

- Nonsensical or irrelevant output – text that’s grammatically fine but meaningless or off-topic.

- Persona inconsistency – the AI abruptly changes tone or role mid-conversation.

- Unwanted or harmful generation – content that’s offensive, biased, or ethically problematic.

Key Causes of Hallucinations in LLMs

This phenomenon stems from multiple layers of the AI lifecycle – from how data is collected and trained to how outputs are generated at inference. They are not the result of a single malfunction but the outcome of small inaccuracies accumulating across data, model architecture, and user interaction.

1. Prompting- and Model-Induced Causes

Researchers describe two foundational sources:

- Prompting-induced occurs when the input itself is unclear, incomplete, or contradictory. If a user gives an ambiguous prompt (much like a vague client brief), the system may fill in gaps with assumptions that sound confident but are unfounded.

- Model-internal comes from the LLM’s own design and training process. Because the models predict the next most likely token rather than verifying facts, they sometimes prioritize fluent phrasing over factual accuracy. This trade-off between readability and truth lies at the core of Generative AI.

2. Data and Knowledge Gaps

Training data forms the foundation of any system’s “understanding” of the world. When that foundation is flawed, errors propagate.

- Low-quality or biased information leads to repeating misinformation or reflecting narrow perspectives.

- Contradictory or outdated sources create confusion about what’s true, much like an employee referencing obsolete manuals.

- Limited dataset diversity causes the model to generalize poorly, increasing the likelihood of hallucinations on rare or specialized topics.

3. Representation and Attention Failures

Even when the data is accurate, the algorithm can mismanage it.

- Knowledge overshadowing. LLM overfocuses on certain parts of the prompt, ignoring key details.

- Contextual misalignment. It fails to stay consistent with user input, producing content that feels related but misses the point.

- Attention failure. Higher layers of the network may retrieve information incorrectly, similar to a worker recalling parts of a meeting but forgetting the exact instructions.

4. Training and Alignment Challenges

LLMs learn through massive training cycles that balance prediction accuracy with human feedback. During this process, over-optimization can skew the model’s priorities.

- Misaligned fine-tuning. Training too heavily for politeness or fluency can suppress factual precision.

- Reinforcement learning trade-offs. Optimizing for “helpfulness” may reward confident tone over truth.

- Incomplete alignment data. If human feedback lacks examples of verification, the system learns surface patterns instead of reliability.

5. Inference and Decoding Variability

Once trained, an LLM constructs responses by sampling words based on probability. That randomness introduces another layer of uncertainty.

- Stochastic decoding. The algorithm adds randomness to avoid repetitive or robotic answers. When tuned for creativity, it may “guess” more freely, producing confident but inaccurate statements.

- Softmax bottleneck. To choose the next word, LLM simplifies many possible options into a smaller range of probabilities. In doing so, it can blur fine distinctions between accurate and misleading choices.

- Semantic entropy. When the model is uncertain, it fills gaps with plausible-sounding text. The longer the uncertainty continues, the more the output drifts away from the truth.

6. Prompt Ambiguity and Human Interaction

Ambiguous or poorly detailed prompts remain one of the most common triggers of hallucination. A request such as “Summarize this report” without specifying which section or data source can push the model to infer — and potentially invent — details. In business terms, it’s the equivalent of assigning a task without a clear brief: the output may look polished, but it won’t meet the original intent.

Exploitation through jailbreak prompts is a related issue. These occur when users intentionally craft inputs that bypass safeguards or force the AI into generating unverified content. While not always malicious, such inputs expose weaknesses in control layers and amplify the risk of hallucinated or unsafe outputs.

7. Zero-Shot and Few-Shot Learning Limitations

LLM integration services can answer questions or complete tasks without explicit examples. However, this flexibility can also produce hallucinations. When faced with topics or instructions outside their training scope, models rely on pattern-based reasoning instead of verified data, leading to errors. It’s similar to assigning an employee to write about a subject they’ve only overheard in passing: the response might sound informed, but it rests on guesswork.

8. Tokenization and Context-Length Effects

Language models process information in tokens — small chunks of text. When the context becomes too long or exceeds limits, earlier data may fade, leading to contradictions or forgotten details. This “context loss” is particularly visible in customer support or document summarization, where long conversations or reports must be condensed into a short answer.

LLM Hallucinations in Real-World Applications

Recent evaluations show that LLMs have become far more reliable than they were just a few years ago. The average rate of hallucinations across major models fell from nearly 38% in 2021 to about 8.2% in 2026, with the best systems now reaching rates as low as 0.7%. Benchmark data from the HHEM 2026 leaderboard confirms that leading models like GPT-4o, Gemini 2.0, and THUDM GLM-4 maintain it between 1.3% and 1.9%, while even lower-tier systems rarely exceed 3%.

Still, the problem hasn’t disappeared. Studies from 2026 report that false or fabricated information appears in 5–20% of complex reasoning and summarization tasks, and users encounter errors in about 1.75% of real-world AI interactions. These numbers prove that hallucination remains a recurring, measurable issue, especially when accuracy or context matters most.

Let’s look at how this plays out across different industries.

Legal

- In 2024, Vancouver lawyer Chong Ke used ChatGPT to draft legal arguments that cited two fabricated precedents in a family-law case. The court deemed it an “abuse of process,” and the Law Society of British Columbia opened an investigation, emphasizing that lawyers must verify any AI-assisted material.

- The privacy group NOYB filed a GDPR complaint after ChatGPT incorrectly claimed that a Norwegian man had killed two of his children and was serving a 21-year sentence. The model mixed factual personal data with invented crimes, raising concerns about AI-driven defamation.

IT Services

- In April 2026, Cursor’s AI assistant told users they were restricted to “one device per subscription,” a policy that never existed. The false claim led to user cancellations and refunds before the company admitted the message was a hallucination.

- Google’s search summaries generated by “AI Overviews” produced fabricated advice, including adding glue to pizza sauce to prevent cheese from sliding, and cited Reddit posts and nonexistent sources as facts. The incident fueled criticism over unreliable AI content in search results.

Hospitality

- Air Canada’s chatbot told passenger Jake Moffatt he could claim a bereavement refund after purchasing a full-fare ticket. When the airline denied the request, a tribunal ruled that Air Canada was responsible for its system’s statements and ordered compensation.

- Stefanina’s Pizzeria reported customers arriving with non-existent discounts and promotions. The fabricated offers caused financial losses and led industry groups to call for stricter verification of AI-based content.

Healthcare

- A 60-year-old man followed ChatGPT’s suggestion to replace table salt with sodium bromide, developing confusion and neurological symptoms from poisoning. Doctors linked the case to incorrect AI health guidance, emphasizing risks of unverified medical advice from Generative AI in healthcare.

Automotive

- A Chevy dealership’s ChatGPT-powered chatbot was manipulated into offering vehicles for one dollar and confirming false deals. The incident spread online, and the dealership temporarily disabled the system to review its safeguards.

Real Estate

- In Utah, a homeowner received bot approval for a $3,000 warranty claim that the company later denied as an “AI error.” Consumer advocates argued that firms remain legally bound by chatbot promises under existing contract law. Imagine the consequences if this had happened with Generative AI in banking.

Real-World Implications of Hallucinations in Large Language Models

These can cause tangible harm when deployed in business or public settings. They affect not only how systems perform but also how people perceive, trust, rely on them, and the overall security of LLM applications.

Discriminating and Toxic Content

Large language models inherit patterns from their training data, including human biases. When these biases surface in the text, they can shape unfair or offensive narratives. For example, an AI screening tool in recruitment might consistently favor male-coded terms when describing leadership traits or produce unequal language in candidate evaluations.

Recent studies show that 15% of AI-generated text reinforces gender bias, while 12% reflects racial stereotypes. At a broader level, 46% of users report frequently encountering biased or misleading content.

For businesses, this means that even subtle bias can undermine diversity goals, expose organizations to compliance issues, and damage brand integrity.

Privacy Issues

These risks emerge when a model fabricates or exposes personal details that seem credible. In healthcare, an AI assistant might generate a summary containing a patient’s name or diagnosis that was never provided, blending imagination with plausible data.

Around 59% of users believe AI hallucinations pose privacy and security risks, and almost half associate them with growing social inequality. Such incidents are particularly serious in regulated industries, where fabricated details can breach confidentiality or violate data protection standards.

Misinformation and Disinformation

Hallucinations often appear as confidently stated falsehoods. Because LLMs write text that sounds convincing, users may accept errors without verification. In publishing or media, for example, an AI content assistant might invent sources or misattribute quotes in a news brief. Once published, these errors can spread quickly and distort public understanding.

Surveys reveal that 72% of people trust AI to provide reliable information, yet 75% admit they’ve been misled by it at least once. Moreover, 77% of users have been deceived by LLM hallucinations, and 96% have encountered AI content that made them question its credibility.

In industries that depend on factual precision, like journalism or finance, even isolated errors can have financial or reputational costs.

Trust, Transparency, and Governance in AI

The most lasting consequence of hallucination is the erosion of trust. Users who repeatedly encounter inaccurate or inconsistent responses often disengage from AI tools altogether. In customer service, for instance, a chatbot that invents refund policies or misquotes warranty terms can instantly reduce satisfaction and loyalty.

Data shows that 93% of users believe hallucinations can cause real harm, and nearly one-third fear they could lead to large-scale misinformation or “brainwashing” effects.

As a result, businesses are investing in governance frameworks, audit systems, and transparency measures to ensure accountability. Managing ethical concerns of LLM hallucinations has become a core part of AI reliability and user experience.

Detecting Hallucinations in LLMs

Spotting errors is one of the biggest challenges in building reliable language-model applications. Unlike simple coding issues, hallucinations often sound completely natural — making them hard to catch even for experts. To measure, understand, and manage these errors, researchers and engineers use a mix of standardized benchmarks, automated checks, and observability tools that track performance.

Overview of Detection Approaches

There’s no single test that can reveal every hallucination. Instead, detection relies on several complementary methods:

- Reference-based evaluation compares model outputs to verified sources.

- Reference-free evaluation checks internal consistency when no single “correct” answer exists.

- Task-specific evaluation focuses on error-prone areas like summarization or Q&A.

- Observability systems monitor model behavior continuously in production.

Frameworks and Tools for Hallucination Detection

These instruments can detect factual inconsistencies, surface low-confidence statements, and provide transparency into how AI systems behave. This is essential for keeping LLM outputs accurate, traceable, and trustworthy.

- TruthfulQA tests whether models can separate truth from convincing falsehoods, a useful measure for understanding factual reliability.

- HalluLens classifies hallucinations in large language models into two types, intrinsic and extrinsic, using datasets such as PreciseWikiQA and NonExistentEntities.

- FActScore breaks an output into individual factual claims and checks each against reliable databases or reference materials to quantify factual precision.

- HaluEval and HaluEval 2.0 provide large-scale benchmarks for factual accuracy in summarization, dialogue, and question-answering tasks.

- QuestEval and Q² use automated question generation and answering to verify that a model’s statements are logically consistent with each other.

- Named Entity Recognition (NER) and Natural Language Inference (NLI) techniques identify factual contradictions or inconsistencies within a single response; for example, conflicting dates or company names.

- ROUGE, BLEU, and BERTScore remain traditional metrics for summarization accuracy, measuring how closely an AI-written summary matches its source text.

- TruLens helps developers evaluate and monitor quality, tracking hallucination frequency and groundedness during live deployments.

- Weights & Biases supports large-scale observability, providing dashboards for tracking model drift, factuality, and overall response reliability over time.

Even with these advances, no system is perfect. Benchmarks can miss subtle logical contradictions, while automated tools may overestimate factual errors in creative outputs. The best results come from combining strategies: quantitative methods for testing, monitoring instruments for live feedback, and human review for context.

LLM Hallucination Mitigation Techniques from an AI Engineer

If you can’t eliminate hallucinations entirely, architect systems that guide them, lead them through guardrails, deploy critic agents, demand step-by-step reasoning, and separate retrieval from generation so the model’s imaginative tendencies stay within verifiable bounds.

This principle underlies the most effective hallucination-prevention methods, which fall into several categories:

- Retrieval-augmented generation grounds responses in verified external information. Rather than relying solely on parametric knowledge, the model references retrieved documents, dramatically reducing fabricated content.

- Prompt engineering remains one of the most accessible techniques. Carefully designed prompts instruct models to acknowledge uncertainty, stick to the provided context, and show their reasoning. Key approaches include explicit uncertainty instructions, strict context grounding, chain-of-thought reasoning, mandatory citations, and structured output formats that separate facts from inferences.

- Deployment of multi-agent flows: distributing responsibilities across specialized agents, these systems create layered verification that catches errors single-agent ones might miss.

Multi-agent flows represent a powerful architectural approach that, according to Olena, surpasses other methods in effectiveness. Here is why.

- Specialized role division assigns distinct functions: one agent generates content, another verifies facts, a third checks citations, and a fourth reviews logical consistency. Each focuses on its strength, preventing hallucinations in LLMs and eliminating single points of failure.

- Cross-validation and consensus: run multiple agents independently on the same query, comparing outputs to flag disagreements. This redundancy surfaces potential hallucinations that might otherwise slip through.

- Iterative refinement pipelines sequence agents so each improves the previous output: draft, critique, research, and edit. The result is systematic checkpoints where errors can be caught and corrected.

- Separation of retrieval and generation dedicates one agent purely to finding sources while another writes answers using only that context. Such an approach enforces grounding and makes fabrication significantly harder.

Additionally, Olena recommends a few practical prompts engineering techniques that work alongside multi-agent flows:

- Explicit uncertainty instructions directing models to admit what they don’t know.

- Context grounding prompts constraining responses to provided information.

- Chain-of-thought requests forcing LLMs to show their work.

- Citation requirements creating accountability and traceability.

- Few-shot examples demonstrating proper behavior.

- Self-reflection prompts asking models to review their own output.

- Query decomposition breaking complex questions into manageable parts.

- Negative constraints explicitly forbidding speculation or invention.

- Confidence scoring requesting explicit certainty levels for claims.

Let’s look at a few more strategies for effective LLM orchestration below.

Model Fine-Tuning and Continuous Learning

This one entails adjusting a pre-trained model using domain-specific data. For instance, a legal firm could retrain an LLM on verified court rulings to reduce factual drift when drafting case summaries. Continuous learning extends this process by regularly feeding in new, validated information.

The approach minimizes hallucination caused by knowledge cutoffs or generalization errors. However, it requires high-quality, bias-free datasets and ongoing human review to prevent introducing new inaccuracies.

Pre-Training Mitigation Techniques

The method focuses on selecting, filtering, and structuring data before a model ever begins learning from it. For example, removing low-quality web text, duplicated entries, or unverified claims helps block the algorithm from “memorizing” misinformation. Some teams also introduce synthetic or counterfactual data, carefully designed examples that teach LLM to question false correlations.

In-Context (During Response) Mitigation

The model is taught to verify its own reasoning as it generates outputs. This can involve prompting it to pause and reflect, asking itself questions like, “What is my evidence?” or “Can this be confirmed?”

The technique works well in conversational systems, where users expect transparency. For example, a customer support assistant might include confidence scores or offer to validate uncertain facts instead of providing a potentially false answer.

Post-Response Filtering and Verification

Once a system produces an output, an automated validation layer can act as a “safety net.” This component checks facts, cross-references external data, or flags statements that exceed a defined uncertainty threshold. Many enterprise LLM workflows now incorporate this step to reduce risk in production environments.

Post-Training / Alignment Techniques

Even after training, LLMs can be aligned further to human expectations of truthfulness and reasoning. Methods such as Reinforcement Learning from Human Feedback (RLHF) or Direct Preference Optimization (DPO) fine-tune the algorithm’s priorities, teaching it to prefer accurate, cautious replies over speculative ones. Alignment ensures the model behaves in ways users perceive as trustworthy.

Defining Model Purpose and Use Cases

Not every AI application needs the same level of factual precision. A well-defined scope and intent set the foundation for realistic standards and mitigation planning. For example, a creative writing tool can tolerate some imaginative deviation, while a financial reporting assistant cannot. By matching the model’s behavior to its use case, organizations can allocate resources where they matter most.

Using Data Templates for Structured Inputs

Ambiguity in inputs often leads to hallucination. Data templates, predefined formats for organized prompt entry, help control how users provide information to the system. In customer service, a template might include separate fields for “issue description,” “client ID,” and “desired outcome,” giving the model a clear context. Such inputs reduce guesswork, making outputs more predictable and verifiable.

Limiting Response Scope and Length

The longer a model continues writing text, the higher the risk of factual drift. Constraining output length or topic scope helps keep information focused and grounded. In enterprise applications, short, context-bound responses are often more reliable and easier to verify than open-ended narratives. Setting strict boundaries ensures precision over verbosity.

Continuous Testing and Refinement Cycles

Ongoing testing (automated and human) is critical for maintaining AI reliability. Periodic reviews catch new hallucination patterns introduced by data changes or model updates. This process mirrors quality assurance in software: define test cases, log results, refine inputs, and retrain as needed. Over time, iterative testing builds institutional knowledge about where the system struggles and how to correct it.

MLOps Best Practices for Monitoring and Maintenance

These frameworks integrate regular tracking, alerting, and retraining pipelines into the lifecycle of generative systems. Key steps involve keeping track of hallucination metrics in production, setting automated alerts when error rates rise, and retaining transparent logs for auditability. These efforts ensure accountability, crucial for sectors like finance, healthcare, or insurance, where AI outputs must meet regulatory standards.

LOFT Framework: Our Answer to the Hallucination Problem

As part of our ongoing efforts to make AI systems more transparent and dependable, Master of Code Global developed a framework called LOFT, or LLM-Orchestrator Open Source Framework, led by Oleksandr Gavuka, our engineer. LOFT was designed with one goal in mind — to make large language models more reliable by minimizing hallucinations before, during, and after generation.

Unlike most out-of-the-box architectures, LOFT doesn’t rely on a single filter or post-processing layer. It integrates multiple checkpoints of control and validation that work together as a defense system, ensuring that every reply remains grounded, consistent, and verifiable.

Here’s how it works at a high level:

- Input Control. Before a model even begins to generate a response, LOFT validates every user input and configuration using schema checks and type safety. This prevents malformed or incomplete data from leading the model astray.

- Execution Control. During generation, the framework supervises tool usage and ensures that every operation has a verified result. This makes sure nothing “imaginary” slips through the workflow.

- Output Validation. LOFT enforces strict output formats, verifies results against pre-defined schemas, and uses structured responses to reduce free-form hallucinations.

- Observability and Monitoring. Every action, tool call, and model output is fully traceable through Langfuse integration, creating complete visibility into how and why an answer was produced.

- Resilience and Recovery. In case something goes wrong (network delays, incomplete results, or failed tool calls), LOFT employs retry logic, rate limiting, and error handling to recover gracefully without passing faulty outputs forward.

Together, these five layers of defense create a controlled environment where hallucinations are not just detected, but actively prevented at multiple stages of the process.

For enterprise clients, this means something simple but essential: more trustworthy AI behavior. With LOFT, our models stay structured, auditable, and consistent across use cases — whether they power customer-facing chatbots, knowledge management tools, or generative automation systems.

FAQ

- Why do LLMs hallucinate?

That’s because they’re designed to predict the next most likely word, not to verify facts. When information in their training data is missing, unclear, or conflicting, they fill gaps by guessing what sounds right. This can lead to confident but incorrect answers. - How to prevent hallucinations in AI agents using protocol layers?

They act like safety checkpoints. One layer might retrieve verified data, another checks accuracy, and a third decides whether the answer should be shown to the user. By separating these steps — retrieval, reasoning, and verification — AI systems reduce the chance of made-up information reaching the end user. - Which LLM has the least hallucinations?

As of 2026, top-tier models like GPT-4o, Gemini 2.0, and Claude 3.5 show the lowest hallucination rates — typically around 1–2% in standardized benchmarks. However, rates vary by task. Even the best can still produce errors in areas requiring complex reasoning or niche knowledge. - Do all LLMs hallucinate?

Yes. Every current large language model hallucinates to some extent. The difference lies in how often and how severely it happens. Larger, better-aligned ones fabricate content less frequently, but complete elimination isn’t possible with today’s architectures. - How does the quality of training data affect the likelihood of hallucinations in LLMs?

Training data acts as the model’s “memory.” If it’s full of outdated, biased, or incorrect information, the model learns those mistakes too. High-quality, diverse, and verified data reduces the chance of hallucination, while poor sources make them more frequent and harder to detect. - Is it possible to achieve hallucination-free LLM applications?

This phenomenon is a built-in part of how these systems generate output. Thus, completely mitigating hallucinations in LLMs is not fully achievable. But it is possible to build solutions that appear nearly hallucination-free by combining reliable data retrieval, human review, and continuous monitoring. The goal is controlled, predictable accuracy.

Final Thoughts

As we’ve seen, the origins of hallucinations within an LLM’s architecture lie in how these models are designed. They predict what’s most likely to follow, not what’s guaranteed to be true. That’s why this phenomenon will always appear to some extent, especially when systems lack grounding or human oversight.

The scope of hallucinations in LLMs also depends on context: a minor factual slip in a marketing draft isn’t the same as a misstatement in a medical or legal document. The solution is to manage risks intelligently, using techniques like RAG, multi-agent verification, and structured prompting to keep AI responses verifiable and consistent.

As our AI Engineer Olena Teodorova says, “any system claiming zero errors is either extremely limited or not being honest about its capabilities.” Accepting this truth is the first step toward responsible, transparent, and reliable AI adoption.

If your organization is integrating Generative AI, our team can help you build secure, well-aligned systems that minimize LLM hallucinations and maintain user trust. Contact us today to discuss your specific case and decide the next steps.