Generative AI Slack Chatbot

Company Internal Slack App Integrated with OpenAI and Internal Product Ecosystem to Automate the Knowledge Base

The client is an enterprise-level technology product company with two major divisions – product development and professional services.

Learn more

Challenge

The pain that existed was that due to the company extensive org-structure with a lot of product and customer teams who were not tightly connected it was hard to navigate through the company rich knowledge base and find the information quickly: the one had to spend some time to find out who is the best go-to subject matter expert and then initiate a live conversation with this person through Slack. The information was stored in multiple places or even was not available because the documentation was not updated. The company ended up with frustrated employees that spent days finding the information they needed.

Master of Code had to develop the Slack chatbot with OpenAI integration that serves as the internal knowledge base AI tool and helps to answer any question about the company and its services immediately. The beauty of the solution is to combine the benefits of LLM (data processing and enriched conversations) with the specific information that company owns, there is the possibility to connect human agents and Slack bots to enrich the functionality.

What We Created

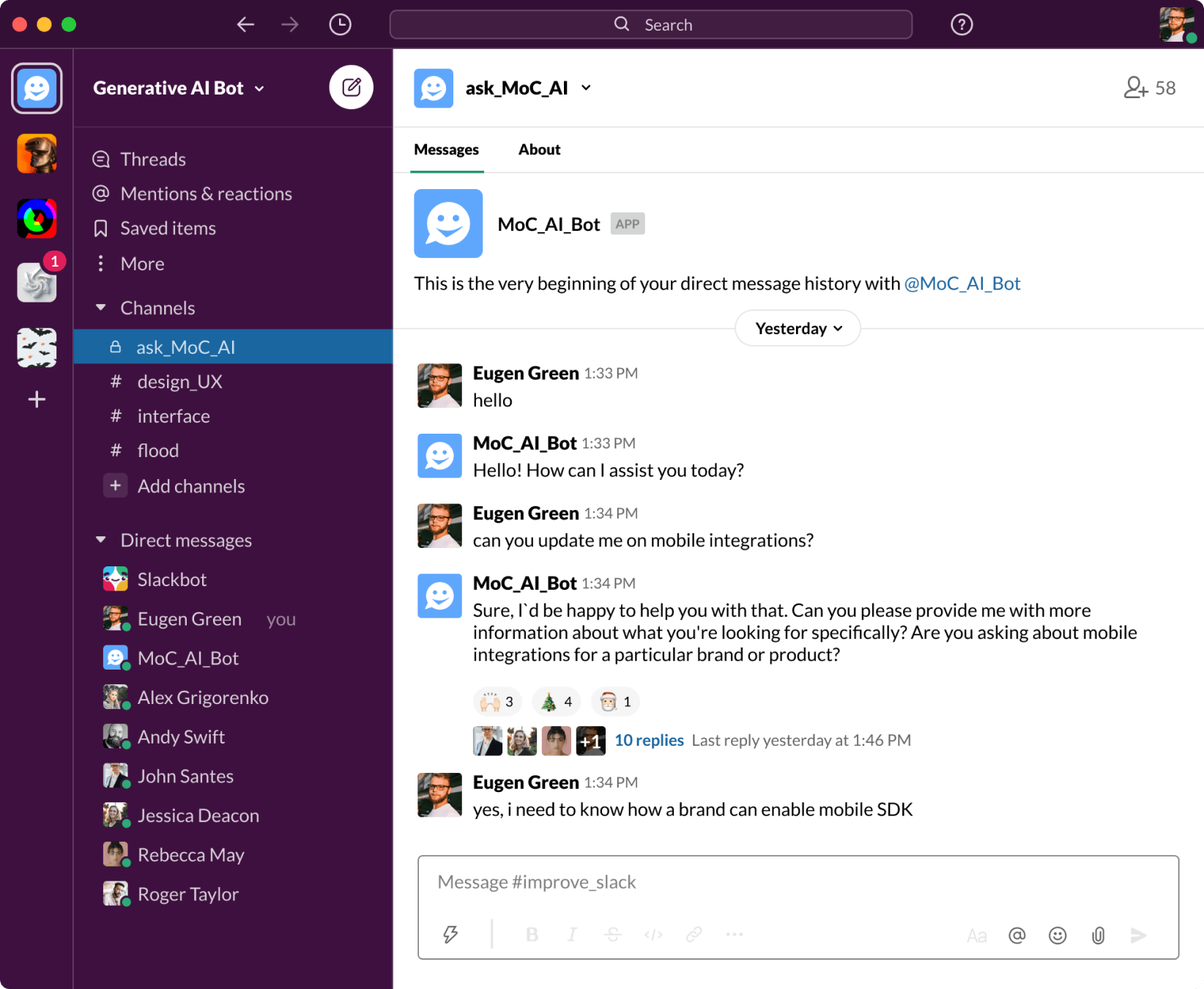

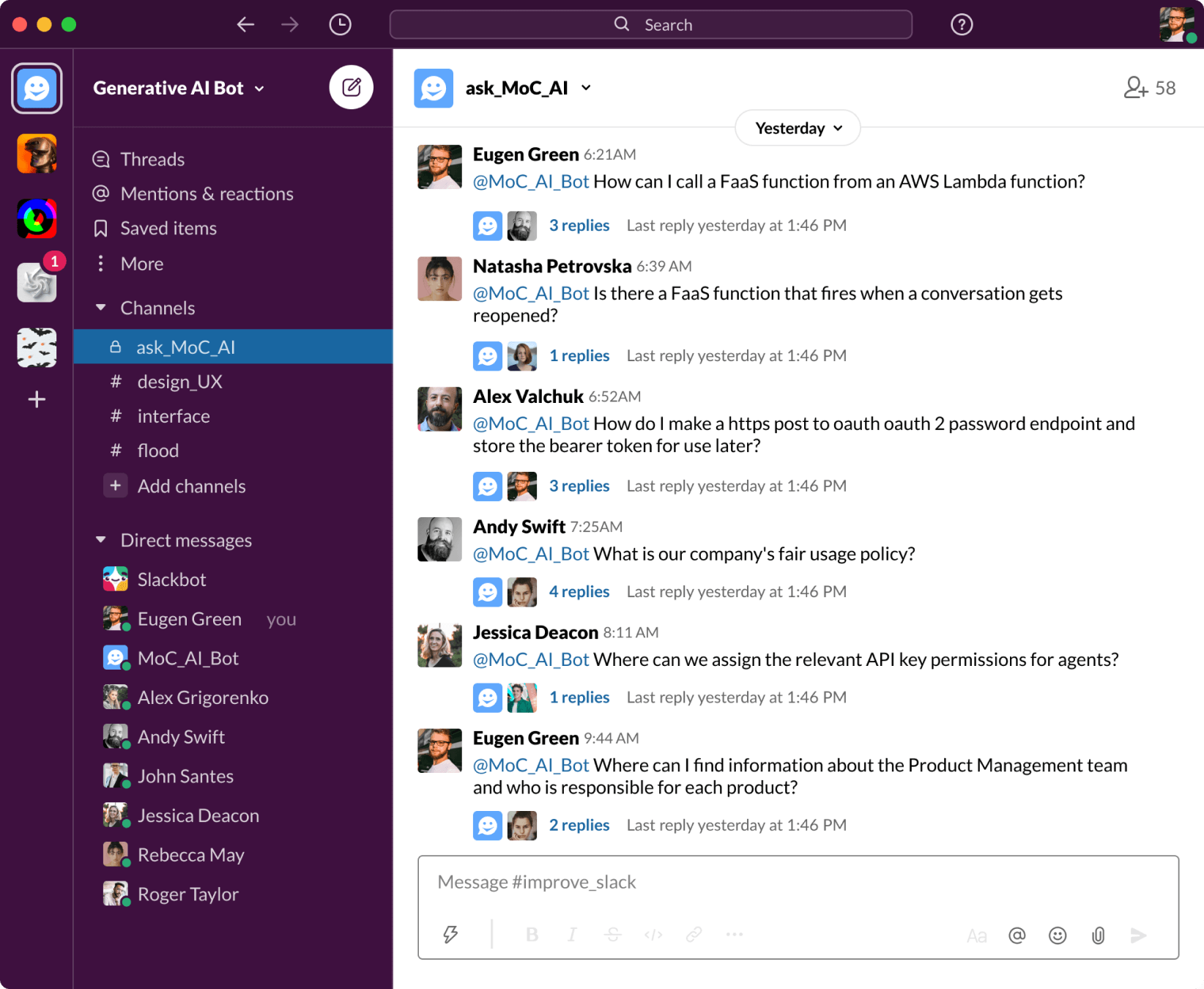

Slack connector

The company already developed their own wrapper for OpenAI that allows to enhance LLM with the domain-specific content and allows fine-tuning: website, articles from knowledge base, text/CSV files. The solution that we have created is the Slack connector for the company product platform and Slack application that consists of a few microservices and routing chatbots to process the requests.

LLM Orchestration Framework Toolkit (LOFT)

Phase 1 of the project was to create the Slack connector itself using microservices architecture, the phase 2 was adding the orchestration level and routing bots to process the specific use cases for LLM – LLM Orchestration Framework Toolkit (LOFT).

End Product

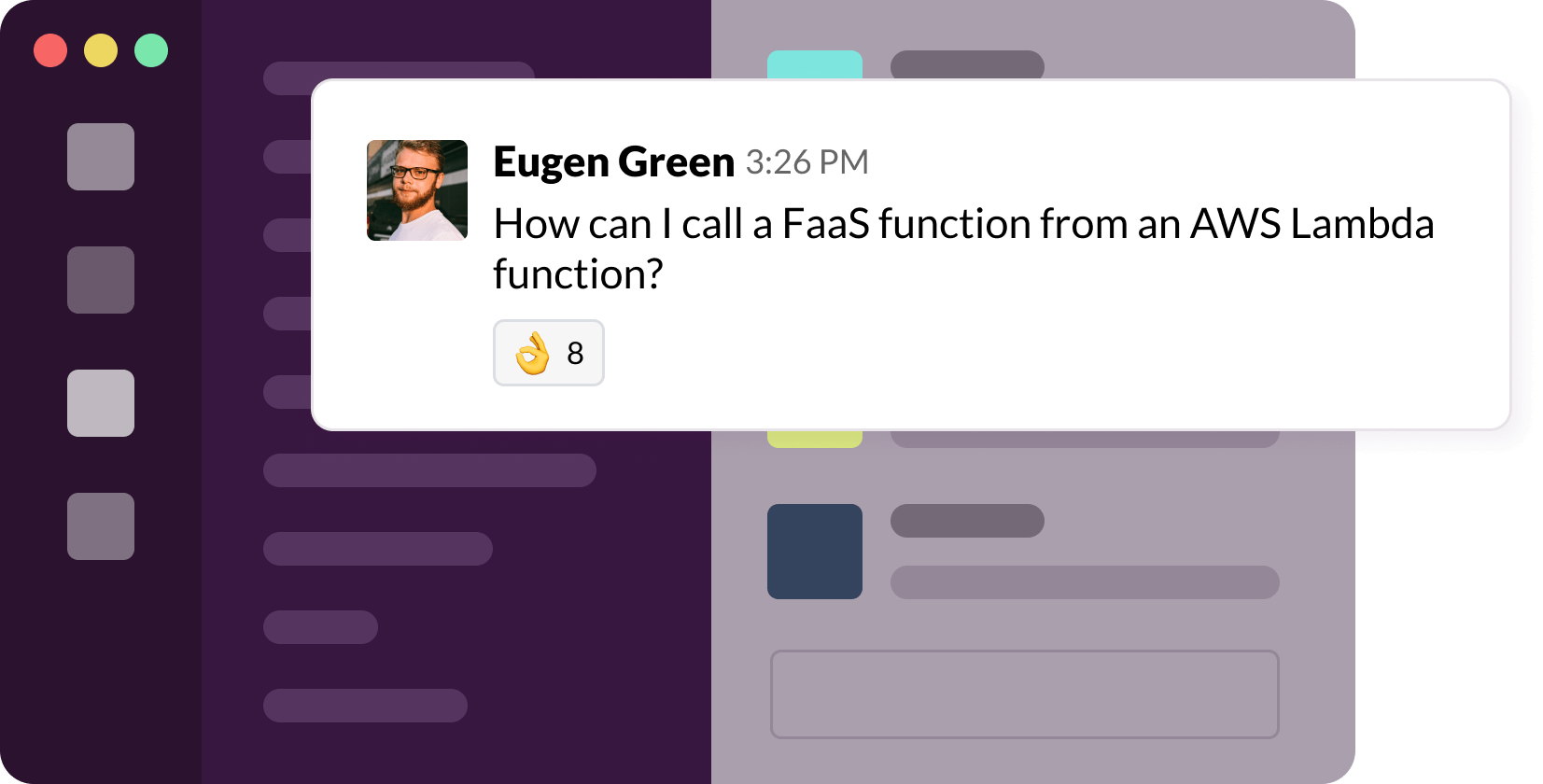

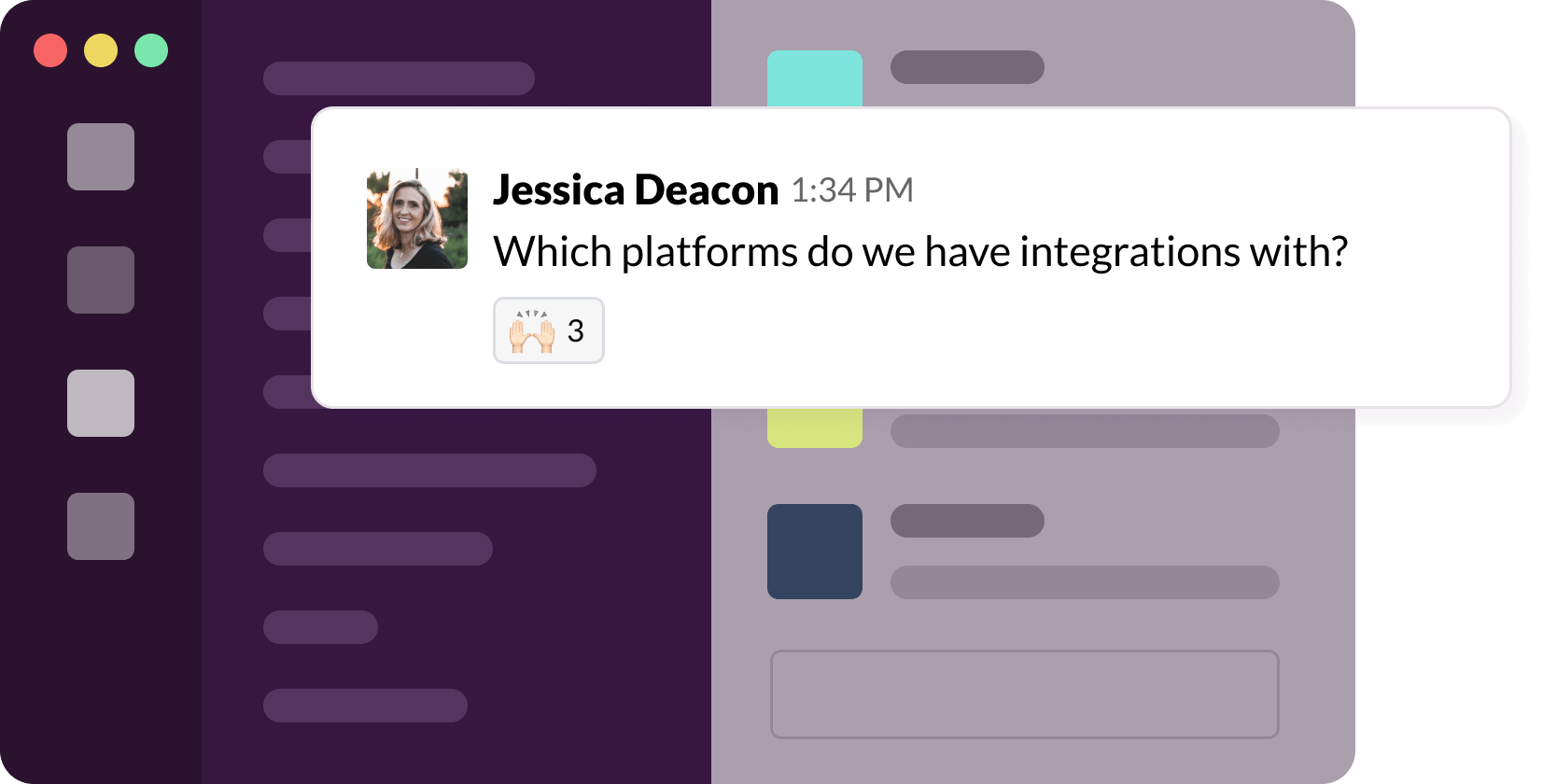

The end product is a Generative AI Slack Chatbot where the company employee can easily get the answers to any questions regarding the company products: from HR to technical ones. As the group also contained the company employees, others could contribute to the meaningful threads, and there is a possibility to escalate to a human from the chatbot.

Team Structure

For this use case, Master of Code Global provided a multi-disciplinary team to expand on the primary bot solution, providing end-to-end build and support for the service. These roles include:

Technologies

-

Node JS

-

Typescript

-

DynamoDB

-

OpenAI API

-

AWS

Results

Generative AI Slack Chatbot was successfully tested by the company employees and received extremely positive feedback. Currently it is used by around half of company personnel to contribute in the following areas:

Rapid Information Navigation

Help engineers and managers to navigate through multiple sources of information quickly (the process takes a few minutes compared to a few days).

Enhanced Product Understanding

The product managers also use the questions to understand the requests for their products better.

Streamlined Workflows

The Generative AI Slack Chatbot reduces manual work (for example, involving of the Solution engineers to answer the questions) and serves as a self-service and cost optimization tool for the company.

In addition to the quantifiable results identified above, some additional results were also recognized:

-

Self-Service Solution

The company is seriously considering to productize the solution and present it as a customer self-service solution to reduce the load on the customer support dept. and increase product adoption and recognition.

-

Error Reduction

Reduction in human error in product configuration and increasing the efficiency of the teams who now have access to the information they need.

-

Optimized Documentation

The company documentation was updated and is constantly optimized due to the high demand.

The solution is a perfect example of LLM usage and automation to make the internal teams work more efficiently and smarter without adding the additional resources or additional overhead on the existing resources.