Consumer expectations are shifting, with chatbots becoming an increasingly common point of interaction. A Tidio study found that a significant majority of customers (88%) have conversed with these tools within the past year. This highlights the need for businesses to explore the integration of conversational solutions for optimized experiences.

As a decision-maker, you’re likely balancing the benefits of technology with the financial implications. To estimate the full potential interactive agents hold for your business, you can start by discovering the compelling chatbot statistics we gathered for you.

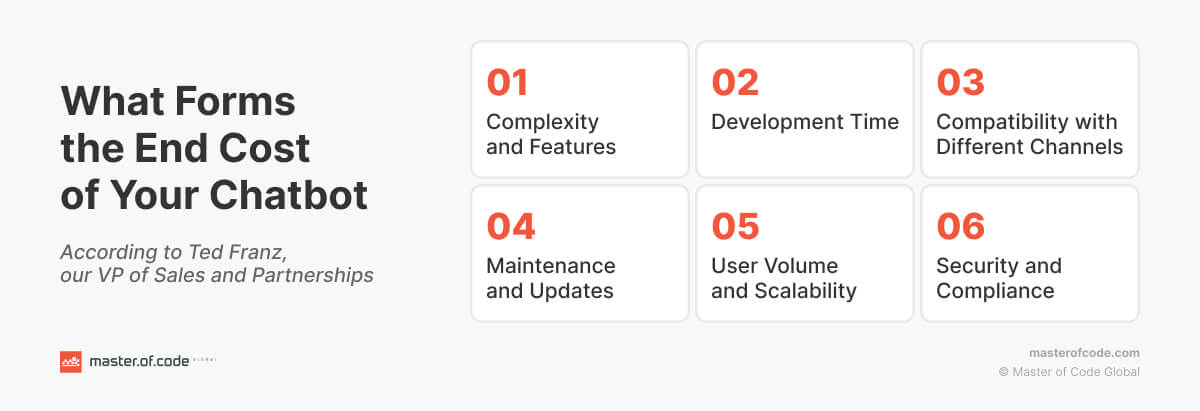

Before diving into integration, it’s also essential to get a clear picture of the associated expenses and possible ROI. So, Ted Franz, VP of Sales and Partnerships together with Olivera Bay, Senior Conversation Designer at Master of Code Global will accompany you on this trip to the roots of what actually forms the chatbot pricing.

We did our best to gather all the knowledge and the weight of more than two-decades expertise to help you make data-driven decisions that align with your business objectives. Read to the end to discover the top factors influencing the end chatbot cost, financial nuances of custom development for your business size, as well as our detailed comparison of all the options. Moreover, the expert list of best practices on how to choose the right solution awaits you inside!

Table of Contents

Factors Shaping Your Chatbot Pricing: Beyond the Base Price

The headline price of a solution is often misleading. Chatbots can handle a single customer interaction at a fraction of the cost of a live agent, with a typical range of $0.50-$0.70 per interaction. But what exactly influences the overall investment in each particular case? Ted Franz suggests the following list of factors:

The truth? There’s no one‑size‑fits‑all answer. The price of your solution hinges on a variety of aspects, and understanding these variables—including often overlooked hidden costs—is key to making a sound investment decision. To add a layer of transparency, consider the following strategic implications.

Technology Stack

Integrating your conversational solution with outdated internal tools may require custom adapters or even partial modernization, increasing the general expenses. While open-source libraries allow for flexibility, their use often requires higher in-house developer expertise, potentially raising labor rates.

Data Protection Measures

Overly simple chat-based platforms might tempt employees to bypass security protocols for quick implementation. This creates vulnerabilities that put your company at risk. Fines for data breaches in regulated industries can be staggering. Invest upfront in a secure chatbot to avoid devastating penalties down the line.

Performance Tracking

Superficial analytics won’t help you truly improve the bot. Look for platforms that offer qualitative insights into the user journey, including intent analysis and sentiment detection, even if they cost slightly more. Some analytics providers retain the right to use your data. Opt for solutions that give you full ownership to protect your competitive insights. No doubt that custom-developed bots tailored to your business specifics are the best possible solution. But it is also the most expensive one.

Deployment

Cloud-based software typically allows for quicker updates and adjustments, while having an on-site solution might mean lengthier deployment cycles for changes. Be cautious of cloud providers whose chatbot pricing model makes it difficult to migrate your bot or its data if needed, driving up long-term expenses.

Industry-Specific Requirements

In regulated fields, a chatbot is not merely a communication tool. It’s a potential legal liability. Invest in auditing processes and a development team experienced in your industry to avoid future issues. Even within a regulated niche, specialized domains might have their own standards (e.g., medical device vs. insurance bots), adding complexity.

AI Credit Consumption

If your bot uses external LLM APIs (like OpenAI), credit usage becomes an ongoing, variable cost. This is especially important for Generative AI deployments where each interaction consumes credits, which can scale rapidly with high user volumes.

Data Storage/Export

While not typically priced per export, data storage on platforms like AWS or Azure can grow significantly for high‑volume bots. This is particularly relevant if hosting includes analytics, backups, or long‑term data retention.

Custom Feature Add‑Ons

Post‑launch enhancements—such as adding language support, advanced analytics, or adapting to API changes—require development time and incur additional costs. These should be budgeted for as part of the chatbot’s ongoing evolution.

Pro Tip: Run an AI Proof of Concept (PoC) before full rollout. This small‑scale trial can validate your use case, estimate AI credit consumption, reveal integration challenges, and prevent overspending on a solution that may not deliver the expected ROI.

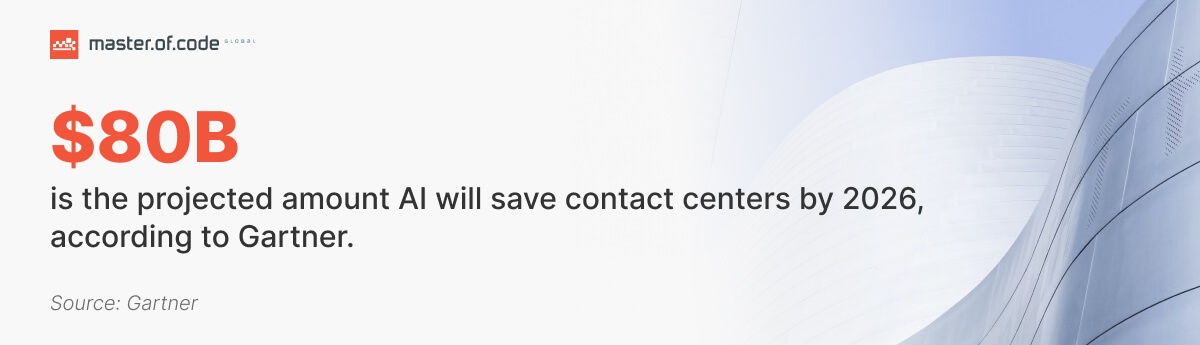

The drumbeat of automation grows louder by the day. McKinsey predicts nearly half of all work activities will be automated by 2045, while Gartner estimates Conversational AI the brains behind bots will slash contact center costs by a staggering $80 billion by 2026. These statistics paint a clear picture: automation isn’t a “maybe” anymore, it’s a “when.” So, it’s just the right time to evaluate all the chatbot pricing models available.

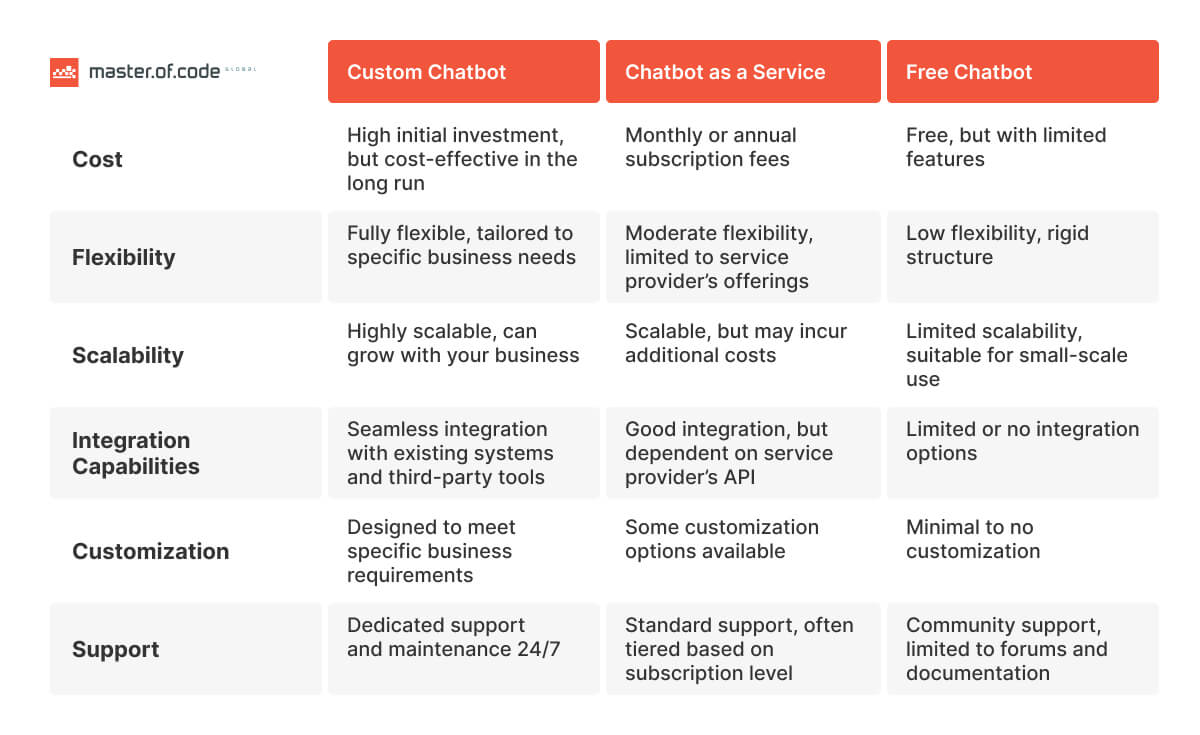

Basically, we can group all the options on the market and divide them into custom software tailored to your business and chatbot-as-a-service (CaaS) solutions. The latter include most of the variants you can find online. Let’s dive deeper into both these fields to come up with the most suitable tool.

Option #1: Custom Chatbot Pricing

The data speaks for itself: AI-powered customer service is paradigm-shifting. A 2022 Salesforce study revealed that organizations leveraging chatbots are 2.1 times more likely to deliver exceptional results. They also found that conversational solutions empower human employees – a whopping 64% of agents with AI tools can dedicate more time to resolving intricate problems.

But reaping these benefits comes with a price tag. Tailored AI bots, designed from the ground up to meet your specific needs, are the most expensive option. Let’s explore the two main avenues for custom development: in-house and agency approaches. The data here also varies according to the software type and functionality you require. So, what is the cost to build a chatbot?

To make it absolutely clear, take a look at this simple and concise video from IBM Technology on the core difference between standard rule-based and Generative AI-powered tools.

In-House Team: Building from the Ground Up

It requires assembling a team with diverse skill sets and significant upfront costs. Here’s a breakdown of the key specialists you’ll need, along with their average US salaries (figures are approximate and may vary based on experience and actual location):

- Project Manager (~$88,000): Responsible for overseeing the entire project lifecycle, ensuring it stays on budget and timeline.

- Chatbot Developer ($90,000 – $120,000): Possesses expertise in programming languages like Python and Java, and development platforms like Rasa or Dialog Flow.

- Natural Language Processing (NLP) Engineer ($110,000 – $140,000): Focuses on training the model to understand and respond to human language.

- User Interface/User Experience (UI/UX) Designer ($80,000 – $110,000): Creates a user-friendly and intuitive interface.

- QA Tester ($70,000 – $90,000): Ensures the chatbot functions flawlessly and delivers a positive user experience.

Cost Breakdown by Complexity:

- Rule-Based Chatbot Pricing ($50,000 – $100,000): This is the most basic and cost-effective option. It relies on predefined rules and decision trees to navigate conversations and provide predetermined responses. This makes such bots well-suited for handling simple, frequently asked questions or providing basic troubleshooting steps. However, their inability to understand natural language nuances or adapt to unexpected inquiries limits their usefulness for complex scenarios.

- AI Chatbot Cost ($100,000 – $200,000): Such a solution takes things a step further by incorporating machine learning algorithms. This allows the bot to analyze vast amounts of conversation data and user interactions. Over time, the AI learns to recognize patterns and identify user intent, enabling it to provide more dynamic and relevant responses. AI chatbots are ideal for handling a wider range of inquiries, including those requiring some level of explanation or problem-solving. They can also be integrated with CRM systems or knowledge bases to access and share relevant information during conversations.

- Generative AI Chatbots ($200,000+): They represent the cutting edge of technology. Leveraging advanced artificial intelligence techniques, like deep learning and natural language generation, these tools create human-quality text. This enables chatbots to engage in nuanced conversations that mimic natural human interactions. Gen AI bots are particularly well-suited for scenarios where creative text generation or highly personalized communication is essential. For example, they can be used to craft compelling marketing copy, offer individualized product recommendations, or even write different creative text formats, like poems or code.

Want a more detailed breakdown of AI solution costs and what factors influence pricing? Read also: How Much Does AI Cost?

Advantages:

- Complete control over the project and intellectual property.

- Ability to tailor the solution precisely to your needs.

- Potential for long-term chatbot cost savings if you plan on building multiple solutions.

Disadvantages:

- Significant upfront investment in hiring and training a team.

- Longer development timelines compared to other options.

- Requires ongoing maintenance and updates.

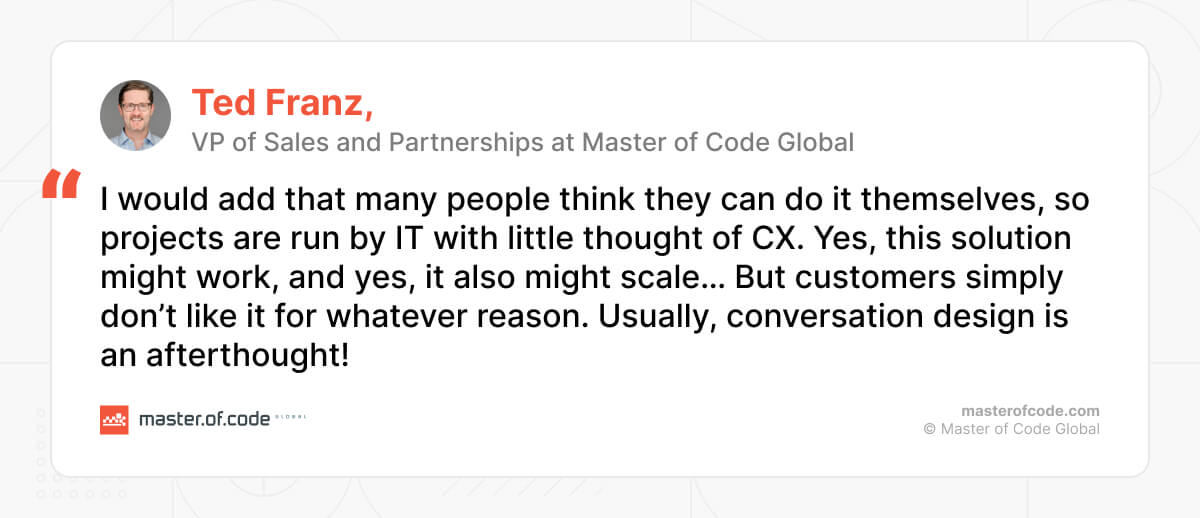

Anyway, there are also hidden pitfalls that not every company takes into account while building their in-house team. Ted Franz states: “I would add that many people think they can do it themselves, so projects are run by IT with little thought of CX. Yes, this solution might work, and yes, it also might scale… But customers simply don’t like it for whatever reason. Usually, conversation design is an afterthought!”

Nevertheless, while calculating the end cost, many companies consider only the development phase. Ted Franz also highlights a common misconception that chatbots are a one-time investment. In reality, there are ongoing costs for maintenance, updates, training, and scaling as the user base grows. How much does a chatbot cost to run? This depends on the model you chose, as well as your company’s size and the tool’s functionality. The sum may vary from the basic subscription plan to the whole department’s monthly salary.

Chatbot Agency Pricing: Expertise on Demand

Building an in-house team grants complete control, but it comes at a cost. Partnering with a chatbot development company offers an alternative path – one that prioritizes speed and potentially lower upfront investment. Let’s explore the breakdown of this approach, including estimated expenses for different options.

Cost Breakdown by Complexity:

- Rule-Based Chatbot Price ($15,000 – $30,000): Agencies can often develop this type of bots more cost-effectively than building an in-house team. Their established workflows and experience with pre-defined structures can streamline the process.

- AI Chatbot Pricing ($75,000 – $150,000+): In this case, costs can vary depending on the level of customization and desired functionalities. Generally, it might be slightly more expensive than building an in-house team due to the agency’s expertise and established development processes. However, the advantage lies in the potentially faster turnaround time and access to a wider range of specialists.

- Generative AI Chatbot ($150,000+): For these cutting-edge tools, AI development companies may charge a premium due to the specialized skills required. However, the cost might still be comparable or even lower than building an in-house team, considering the need to hire and train NLP engineers with expertise in deep learning and natural language generation.

Expenses:

There are three most popular payment models to consider:

- Hourly Rates: $50 – $200+ per hour depending on the specialist’s experience and agency location.

- Project-Based Fees: $15,000 – $300,000+ depending on chatbot complexity and functionalities.

- Retainer Model: A monthly fee (~$5,000+) for ongoing maintenance and support.

Advantages:

- Faster Development: Third-party service providers possess pre-assembled teams with the necessary expertise. This allows for quicker project completion compared to building an in-house team.

- Reduced Chatbot Cost: You eliminate the need to hire and manage a full-time staff, potentially reducing overall expenses, especially for simpler projects.

- Access to Expertise: Agencies have experience building bots for various industries and purposes. You can leverage their knowledge to create a solution that aligns with your specific needs.

While the initial investment in a chatbot can seem substantial, the potential return (ROI) is undeniable. Here are some success stories that illustrate the payoff:

- Fashion Retailer Streamlines Operations: TechStyle with millions of members and a high volume of customer interactions leveraged chatbots to automate tasks and deflect basic inquiries. This resulted in a staggering $1.1 million saved in operational costs within the first year, while simultaneously achieving a member satisfaction rating of 92%.

- London Borough Council Enhances Customer Experience: Barking & Dagenham implemented an advanced multi-department AI assistant, leading to a significant cost reduction of approximately £48,000 within just six months. More importantly, customer satisfaction soared by an impressive 67%.

These examples showcase the far-reaching implications of conversational solutions. By automating repetitive tasks and providing around-the-clock support, bots can free up your human agents to handle complex customer interactions. This translates to a more efficient workforce, happier clients, and a potentially substantial ROI that justifies the initial chatbot pricing.

Option #2: Chatbot as a Service Pricing

CaaS offers a convenient and potentially cost-effective way to implement the necessary functionality. However, it’s crucial to understand the different subscription models and their suitability for your company’s size and needs. Here’s a breakdown to help you navigate the options.

Small Companies

Many CaaS platforms offer free tiers with limited features and functionality. While a good starting point for testing the waters, these plans usually restrict conversation complexity, user interactions, and data storage. They might be suitable for very basic applications but won’t provide the power and flexibility needed for most customer service or lead generation scenarios.

Also, small businesses may find the pay-per-request model quite suitable. This option means charging only for the chatbot interactions you use. We will explore this opportunity more in the next section.

Mid-Size Companies

Most CaaS providers offer tiered subscription plans with varying feature sets and limitations. These plans typically include a set number of monthly conversations, data storage capacity, and access to specific functionalities. Carefully evaluate your needs and choose a plan that provides enough features without unnecessary extras. Here, a custom solution might offer a better fit if you require a highly specialized chatbot tailored to your unique workflows or brand voice.

Customized Enterprise Chatbots

For large-scale deployments and complex functionalities, most CaaS providers offer customized enterprise plans. These packages are typically tailored to the specific needs of the organization and often come with dedicated account management and support. While this level of customization can be beneficial, it’s important to weigh the cost against the potential benefits. A custom development approach might provide a more cost-effective solution in the long run, especially if you require a high degree of control over the chatbot’s design, integration capabilities, and data security.

Free Chatbots

While free solutions can be tempting, remember the adage “you get what you pay for.” These options often come with significant limitations in functionality, customization, and scalability. They might be suitable for basic experimentation, but they’re unlikely to meet the demands of a growing business.

Pay-Per-Request

These plans can be a good option for companies with unpredictable chat volumes. Nevertheless, it might not be ideal for businesses that anticipate consistent user engagement. The per-request cost can add up quickly if your chatbot usage increases significantly. It’s essential to factor in potential scaling from the beginning.

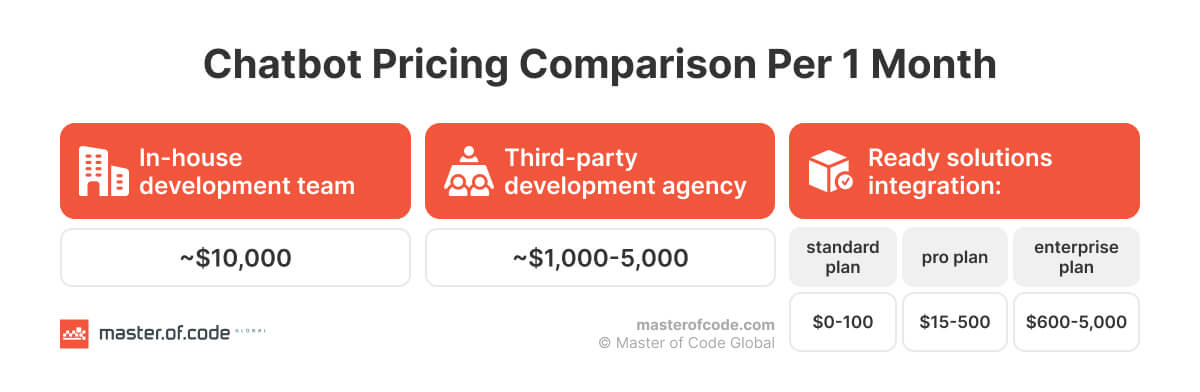

So, how much does a chatbot cost? See an approximate calculation in the table below.

As you can see, the ideal CaaS subscription plan depends on your company’s size, budget, and chatbot requirements. While it offers a convenient entry point, custom development solutions provide unmatched flexibility, control, and scalability. Carefully assess your needs and weigh the advantages of each approach before making a decision.

Choosing the Optimal Chatbot Pricing Path for Your Business

The comparison of custom development and chatbot-as-a-service pricing options hinges on a profound understanding of your business priorities. Here are some key criteria to consider while choosing the best-fitting solution:

Complexity of Your Needs

- Custom Development: Ideal for chatbots requiring intricate functionalities, integration with multiple systems, or a unique brand voice. Custom tools offer full control over style, development, and data security. This is particularly important for sensitive industries or scenarios where brand consistency is crucial.

- CaaS: These platforms often provide pre-built templates and functionalities, making them a faster and more cost-effective option for straightforward applications. However, they have limitations in customization, especially when it comes to replicating complex workflows or integrating with specific internal systems.

User Expectations

- Custom Development: Allows for meticulous tailoring of the conversation flow and UX to meet your specific customer expectations. Given the growing demand for natural and engaging interactions, it empowers you to create a chatbot that feels more human-like and fosters trust with users. Consider the statistic that 74% of internet users prefer using conversational tools for basic issues and FAQs. This indicates that even basic interactions can benefit from a well-designed conversation flow.

- CaaS: Limited customization options might restrict your ability to cater to specific user expectations. While CaaS platforms offer various functionalities, they might not provide the nuanced control needed to create a truly personalized and engaging UX.

To better understand what your clients really want to see while engaging with your virtual assistant, we recommend watching this insightful video by IBM Technology.

Development Speed and Budget

- Custom Development: Requires a longer development timeline and typically involves a higher initial investment. You’ll need to assemble or hire a team of specialists and factor in ongoing maintenance expenses.

- CaaS: Platforms handle maintenance and updates, but subscription fees can add up over time, especially for complex bots or high chat volumes.

Control and Scalability

- Custom Development: Provides complete control over the chatbot’s design, functionality, and data security. This allows for seamless integration with existing systems and ensures your data remains within your organization. Custom solutions are also highly scalable, allowing you to add functionalities or integrate with new platforms as your business grows.

- CaaS: Limited control over the customization and data storage. Integration with external systems might be restricted depending on the platform’s capabilities. Scalability can also be an issue, as some CaaS plans have limitations on user interactions or data storage.

Again, how much does a chatbot cost? Olivera Bay comments: “I would compare chatbot pricing to any product cost in 2 parts. First, getting the foundation done right – for example the foundation of an actual house, we wouldn’t want to compromise here, we gotta do it right or it becomes risky and we have to re-do it down the line. Second, the house should be maintained, with ongoing enhancements and repairs. A house is never an after-thought, and if it is, it becomes much more costly and difficult to fix.”

Chatbot ROI Examples

Real‑world chatbot implementations show just how quickly businesses can recover their investment.

- Fashion Retailer – TechStyle deployed AI chatbots to automate routine customer interactions across millions of members, achieving substantial operational savings and higher customer satisfaction.

- Local Government – Barking & Dagenham Council introduced a multi‑department AI assistant, reducing service costs while significantly improving citizen satisfaction.

- Banking – RBC Royal Bank implemented a voice and chat‑enabled virtual assistant to streamline service, shorten wait times, and strengthen customer loyalty.

These examples highlight how well‑designed chatbots not only lower operational expenses but also drive measurable revenue growth. With the right strategy, payback periods can be as short as a few months, making AI chatbot pricing an investment that delivers rapid and sustainable returns.

Industry-Specific Chatbot Pricing

Chatbot pricing differs significantly across industries based on security requirements, integration complexity, and expected performance. Here are typical cost ranges according to industry insights, including Crescendo and WotNot:

- Healthcare: $50 K–$100 K+ — These bots must comply with HIPAA standards, integrate with EHR systems, handle sensitive patient data, and undergo rigorous validation processes.

- Finance: $75 K+ — Requires built‑in multi-factor authentication, fraud detection mechanisms, secure transaction handling, and adherence to strict regulatory frameworks.

- eCommerce & Retail: $25 K–$50 K — Includes recommendation engines, integration with inventory and payment systems, scalable load balancing during high traffic, and personalized interaction logic.

WotNot’s public pricing—starting from $299/month for high-volume AI support and custom integrations—illustrates how platform fees scale with usage, underlining the importance of usage and compliance considerations in chatbot ROI planning.

By aligning your budget with industry demands around security, performance, and features, you’ll plan more accurately and avoid costly surprises mid-project.

A Decision-Making Matrix for Chatbot Success

AI Chatbot Pricing FAQs: Unveiling the Bottom Line

How much does an AI chatbot cost?

Typically, the AI chatbot pricing range is between $75,000 and $150,000+ for custom development. CaaS solutions might offer AI-powered tools for a lower starting point, but with limitations.

What are the average chatbot prices for in-house or third-party agency development?

- Rule-based: $10,000 – $30,000

- AI-powered: $75,000 – $150,000+

- Generative AI: $150,000+

How much does it cost to develop a chatbot with Generative AI?

It can be upwards of $150,000 due to the specialized NLP engineers required. CaaS solutions might offer similar functionalities for a lower price, but with potentially less control over the overall performance.

How can I estimate the cost of my Facebook chatbot?

Prices here can vary significantly. For basic rule-based bots, it can range from $2,000 to $10,000, while more advanced AI-powered tools might cost between $10,000 and $50,000.

What is a typical Whatsapp chatbot cost?

Monthly subscription fees for third-party platforms that support such bots can range from $50 to $500, depending on the features and level of support. Custom development price varies from $5,000 to $30,000 and above.

What hidden chatbot costs should I plan for?

Beyond AI chatbot pricing, plan for integration fees, API usage, ongoing AI model updates, advanced analytics, cloud hosting, and compliance with data privacy laws.

How to reduce chatbot cost without losing quality?

Start with an MVP focused on core use cases, use pre‑trained NLP models, and consider platform providers that allow customization without full custom build costs.

Cost of implementing chatbots in e‑learning

Rule‑based bots can start at $5,000–$15,000. AI‑powered chatbots with personalization and LMS integration may range from $30,000–$80,000.

How much does Conversational AI cost?

Conversational AI projects often start around $75,000 and can exceed $200,000 for Generative AI with deep integrations. Ongoing costs depend on scale and complexity.

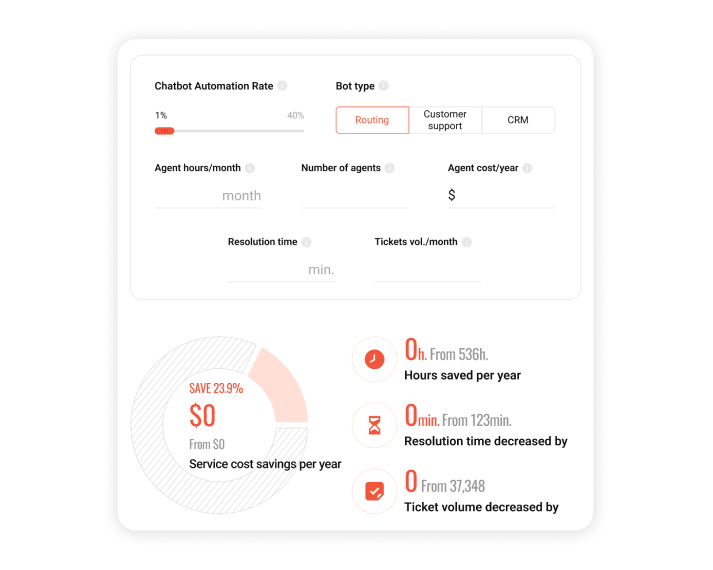

To determine the possible return on investment more accurately, you can try our convenient ROI Calculator.

Conclusion

While the initial cost of a chatbot might seem significant, the potential return on investment is undeniable. Consider the cost savings associated with streamlined workflows, 24/7 support, and improved customer satisfaction. Chatbots can be a wise investment for businesses seeking to optimize efficiency and profitability.

Master of Code Global is here to help you select the right solution for your current and future business needs. Partner with us for an enjoyable and transparent development process, engaging and captivating conversation design, expert guidance, and exponential growth.

Ready to build your own Conversational AI solution? Let’s chat!