Welcome to our tutorial on multimodal Conversation Design. Today we’ll take an in-depth look at what multimodal design is and learn more about its related components. Then, we’ll discuss conversation design best practices, use cases, and the outlook for the future.

From the moment we wake up, to when we’re about to go to bed, humans are absorbing information in a variety of ways. We combine multiple senses or modalities – sight, touch, taste, smell, sound – to draw singular conclusions. Our senses work together to build our understanding of the world and the challenges around us. With feedback and experience, they help reinforce certain behaviors and discourage the repetition of others. The more we process these combined or multimodal experiences, the more tangible and impactful they become.

Table of Contents

Multimodal Conversation Design: a Human-Centric Approach

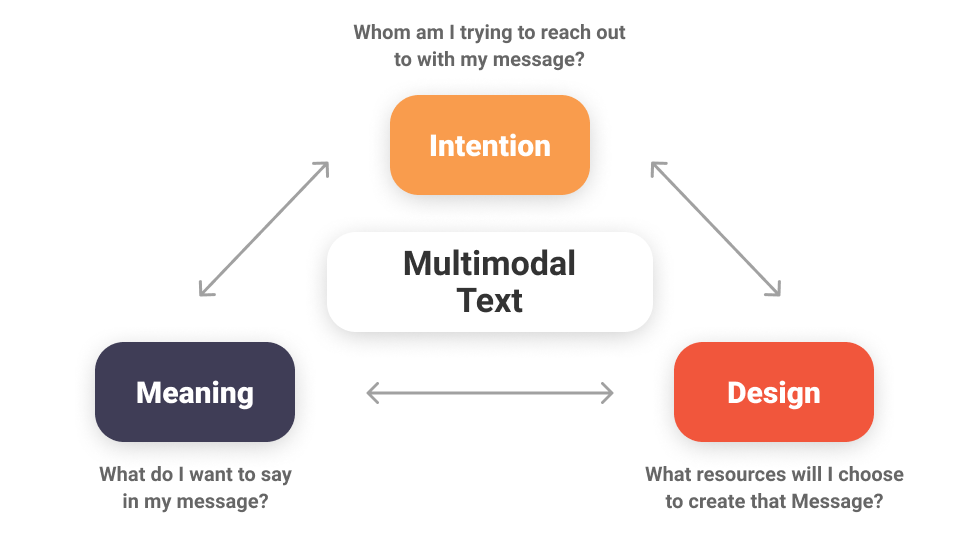

If multimodal is our natural human state, it stands to reason that multimodal design should be a natural outlook. Taking a human-centric approach, the multimodal design mixes art and technology to create a user experience (UX) that combines modalities across multiple touch points. Done well, this approach produces an interface that combines modalities in a way that sees them fit together organically to replicate an interpersonal human interaction. For example, using voice technology as an input mechanism married with a graphical user interface (GUI) as the output for the user.

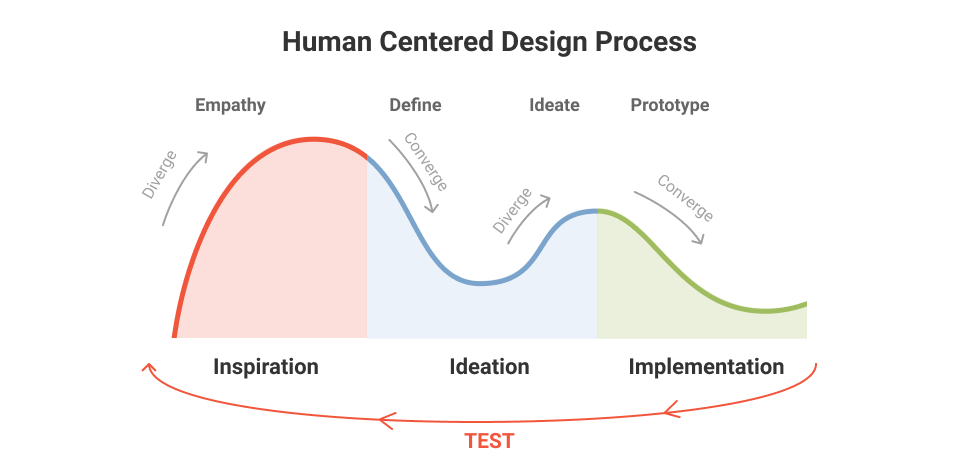

Traditionally we’ve thought about GUI and content design in silos; we even specialize designers and creatives along these lines. In a similar vein, modalities have also been siloed. Past processes would see the UX developed separately while content is developed and then the two are mashed together. In multimodal design, instead of building functionality one modality at a time, no input or output is treated separately or excluded. Developing all aspects of the UX together allows for the best modality for the context or circumstance to naturally emerge as the interaction unfolds.

Context is Everything in Multimodal

What we sense, what information we need to understand and operate smoothly, and what we expect to do, all change depending on whether we’re having a conversation at a party, making dinner, driving a car, or reading a text message. The patterns of how these bundled expectations and abilities come together are called modalities. Having multiple sensory options is important when considering that all senses might not be simultaneously available, either temporarily or permanently. Providing inputs for all channels can increase accessibility and improve the reliability of the activity or information.

Multimodal Conversation Design: Inputs & Outputs

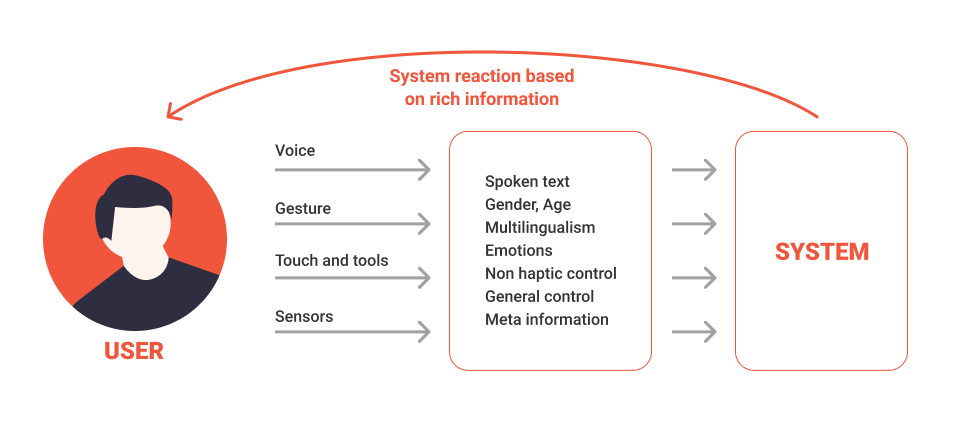

On a human level, communication isn’t limited to text and speech. We rely on a wide range of signals that include everything from hand gestures to eye movement to body language.

So why does conversation design interactions continue to primarily focus on text and speech interactions? Lived experience clearly tells us we should not be limited to these modalities alone. For example, a chatbot should be capable of showing dynamic content (graphics, links, maps) to provide the best possible response. Some chatbots can also take commands via voice and then display the results as text or using a synthesized voice.

A similar approach that successfully meets people’s inclination towards multimodal interactions is voice search. This is the trend where we speak into our browser rather than type and receive our search results in return. In this respect, we can think of Google as the biggest single-turn chatbot in the world. Technology has evolved from searching with keywords and abbreviated phrases to the ability to search using natural language.

From a user’s perspective, a voice-controlled interface is appealing in its straightforward ease of use; the user wants something and simply asks for it. This is commonly referred to as the intent. The user then expects the system to come back with a relevant response, either as an action or information. Consuming information aurally requires more cognitive load from users, which suggests clarity and attention are more easily achieved through multimodal design.

In addition to text and speech, the most commonplace input modalities for interacting with a system include use of a mouse, keyboard, or touch/tap. Newer modalities, including gestural and physiological, are continuing to expand their use cases. A user should be able to provide information to a system in the most efficient and effortless way possible.

How is the optimal approach determined? Context. Next we’ll learn about contextualized best practices, what the future might hold, and use cases for multimodal conversation design.

Contextual Design: Building Relevant and Customized Experiences

Context in multimodal conversation design is essential. We can’t just think in chat, or just think in voice, or visuals alone. We have to think about how they complement each other and which one best serves the user in any given moment. Where is the user? What are they trying to accomplish? These should be the main considerations when working with multimodal design.

Knowing where users are while they progress through different steps of their journey can reveal both pain points and opportunities in design. This is especially true if the user journey requires switching between devices. Careful review of the user journey helps with understanding the advantages of one modality over another at various points. This type of review should take into consideration the entire user experience from beginning to end and map how those interactions come to life using a combination of modalities.

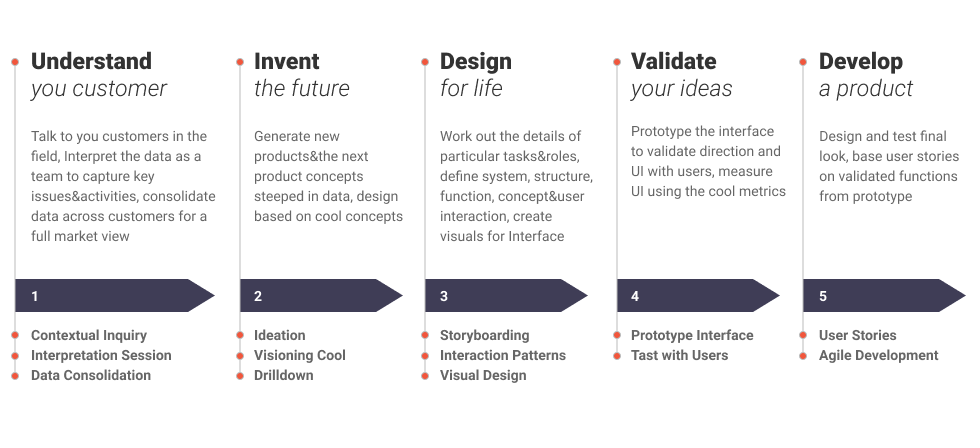

Cultivating a sense of safety and security for users in their interactions is also crucial for driving engagement that leads to customer loyalty. A multimodal experience can help achieve this. Speaking aloud and receiving an audio response make voice-first interactions inherently more public. If a use case involves the need for users to share private or sensitive information, combining voice-first with displaying text and visual inputs might be more effective. Contextual Design: The Process In Detail

It’s vital to recognize that this is not a case of designing chat or voice-first and then simply layering on graphical elements. Best practice requires us to understand and prioritize what a user is trying to do and support that goal instead of pushing or even forcing them to engage with a product or preferred interface. Often this means playing a supportive role. It requires critical reflection and honesty about whether a brand is truly committed to creating the most frictionless interaction for a user or are justifications being made for inadvertently furthering frustrations. Which leads us to…

Multimodal Is More Than Flash

Multimodal conversation design is intended to combine multiple inputs and outputs to improve a user’s experience. Designers make life easier for users by incorporating and automating actions through different modalities. If there was only one modality mechanism, it would negatively affect the user experience and the design would “fail” in the mind of the user.

That said, when everything in a design competes for your attention, nothing wins. Too many elements in a user’s journey can actually push the feel of the UX into the gimmicky territory. In multimodal conversation design, where the audible, visible, and tactile compete for attention, it’s less straightforward. Each modality has its advantages. The key lies in emphasizing one at a time.

Each element must be intentional. It should have a purpose, not just flash. It’s not just about what’s available, it’s about whether what’s available is even relevant or appropriate. If users are distracted by an element, they’re not concentrating on the intended user journey. Intentionally designed experiences work, while others can immediately come off as overwhelming. There’s an art to bringing together visuals and audio to create seamless communications.

Well-thought-out multimodal conversation design also prioritizes accessibility. The power of a multimodal experience should not be underestimated. It can reduce difficulties, improve independence, and include more people in the conversation. This is why it’s critical to ask the right questions at the beginning of the design process to determine if any users are being excluded from the product. Can all users of a multimodal journey complete a task or get from A to B without major roadblocks? Answering these questions will help demonstrate that a brand cares about the needs of all of its users. Multimodal Conversation Design

Multimodal Conversation Design: A Not So Simple Use Case

Let’s walk through a simple example of how multimodal design can improve a user’s experience for something like making a recipe. While it may seem simple on the surface, it involves multiple visual, audio, and touch interactions, sometimes simultaneously.

- User verbally asks their smart display for a recipe.

- Smart display verbalizes that it can help and provides visual recipe results.

- User manually scrolls through options on the smart display, taps for more details, and reads the recipe.

- User verbally updates the voice assistant’s shopping list for recipe ingredients.

- Smart display verbally and visually confirms the shopping list update.

- While driving to the store, the user remembers something else they need, uses an in-car smart assistant to verbally make an addition to their shopping list.

- At the grocery store, a user doesn’t want to disturb others, so they use the mobile app’s GUI to read their shopping list, tapping to check-off items as they go.

- Back at home, the smart display remembers the selected recipe and both verbally and visually explains the recipe steps.

- With their hands busy and messy, the user can verbally ask the smart display to repeat a step, set a timer, play music, etc

Think of the roadblocks this same user would experience through a rigidly audio or visual-only interface. Where might frustration arise without the ability to obtain or convey information within the most context-informed modality?

How Multimodal Design Will Impact the Future of Customer Interactions

The newest breed of Generative AI models has already integrated multiple modalities, such as chat, voice, and images. For instance, ChatGPT, powered by GPT-4o, can both receive input and respond in a combination of text, audio, image, and video. This capability makes interactions richer and more context-aware. Imagine snapping a picture of a pair of sneakers and instantly receiving a link to buy the same pair or uploading an audio clip in Spanish and having it immediately transcribed and translated into English. That’s the current reality.

Despite the excitement surrounding GenAI, its multimodal abilities have not yet been widely applied across enterprises. Nevertheless, the potential is enormous. In the future, we can expect to see such interfaces revolutionize customer support by enabling virtual assistants to handle complex queries involving text, voice, and images. In retail, these tools could enhance the shopping journey by allowing customers to upload photos of desired products and get personalized recommendations. In healthcare, multimodal AI could assist in diagnostics by analyzing medical images alongside patient history and symptoms.

As these technologies evolve, businesses must determine how to integrate them into their core services to provide the best multimodal experience. The goal is to create natural and realistic conversations and bridge the gap between technology and human communication.

Multimodal Design: Excellent Branding Opportunities

As experiences become more interactive and multimodal they will become more shareable in the design and product world. This means big opportunities for brands’ conversational experiences to get the customer and industry attention they deserve as they become more consumable for broader audiences. In turn, customers will come to expect multimodal interactions wherever they experience conversation design.

With this in mind, a design team’s skill sets will need to expand as it’s no longer solely about designing for one modality; it’s about designing for multimodal functionality and Conversational AI experience. This means determining how the visual interface interacts with the context provided by text and speech interfaces, as well as how these different forms of interaction harmonize with one another.

Ultimately, multimodal design’s purpose is to contextualize and offer up options to provide users with the best interaction for the moment they’re in. Having easily navigated an experience, customers should walk away satisfied with both the specific interaction and the overall brand. And when it comes down to it, hasn’t that always been the objective?

Discover how Master of Code Global can help enhance your customer’s experience and boost sales growth.