At Master of Code, we’ve been working with OpenAI’s GPT-3.5-Turbo model since its launch. After many successful implementations, we wanted to share our best practices on prompt engineering the model for your Generative AI solution.

We realize that new models may have launched after we publish this article, so it’s important to note that these prompt engineering approaches apply to OpenAI’s latest model, GPT-3.5-Turbo.

Table of Contents

What is GPT prompt engineering?

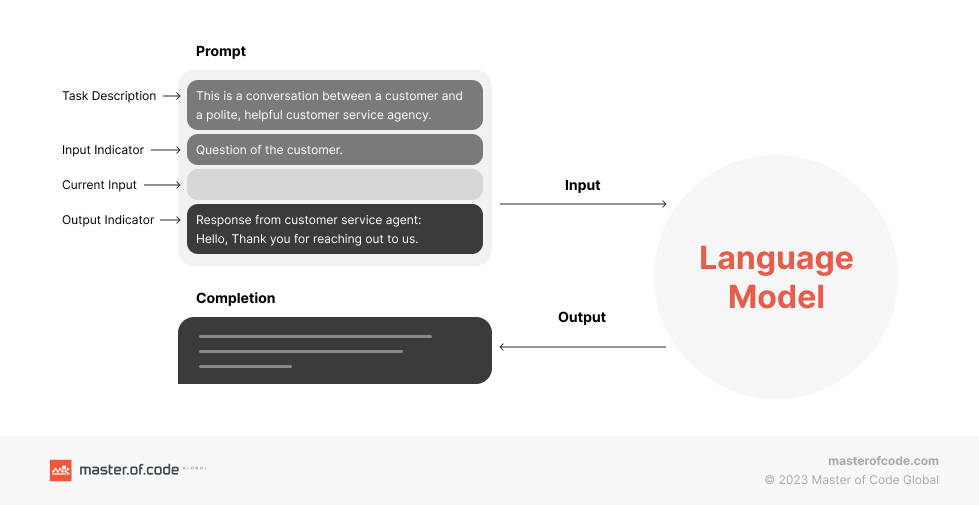

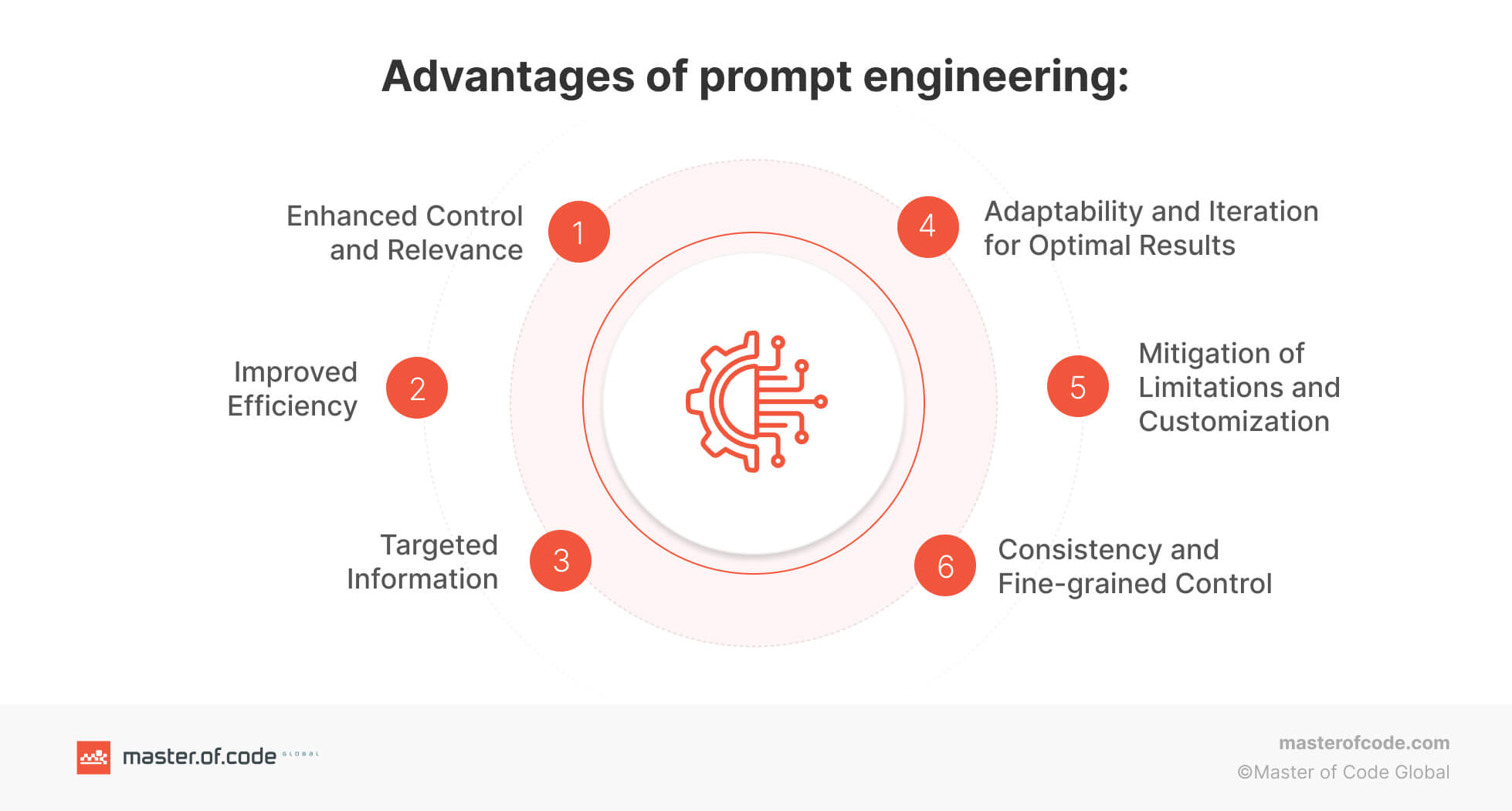

GPT prompt engineering is the practice of strategically constructing prompts to guide the behavior of GPT language models, such as GPT-3, GPT-3.5-Turbo or GPT-4. It involves composing prompts in a way that will influence the model to generate your desired responses.

By leveraging prompt engineering techniques, you can elicit more accurate and contextually appropriate responses from the model. GPT prompt engineering is an iterative process that requires experimentation, analysis, and thorough testing to achieve your desired outcome.

GPT Prompt Engineering Elements

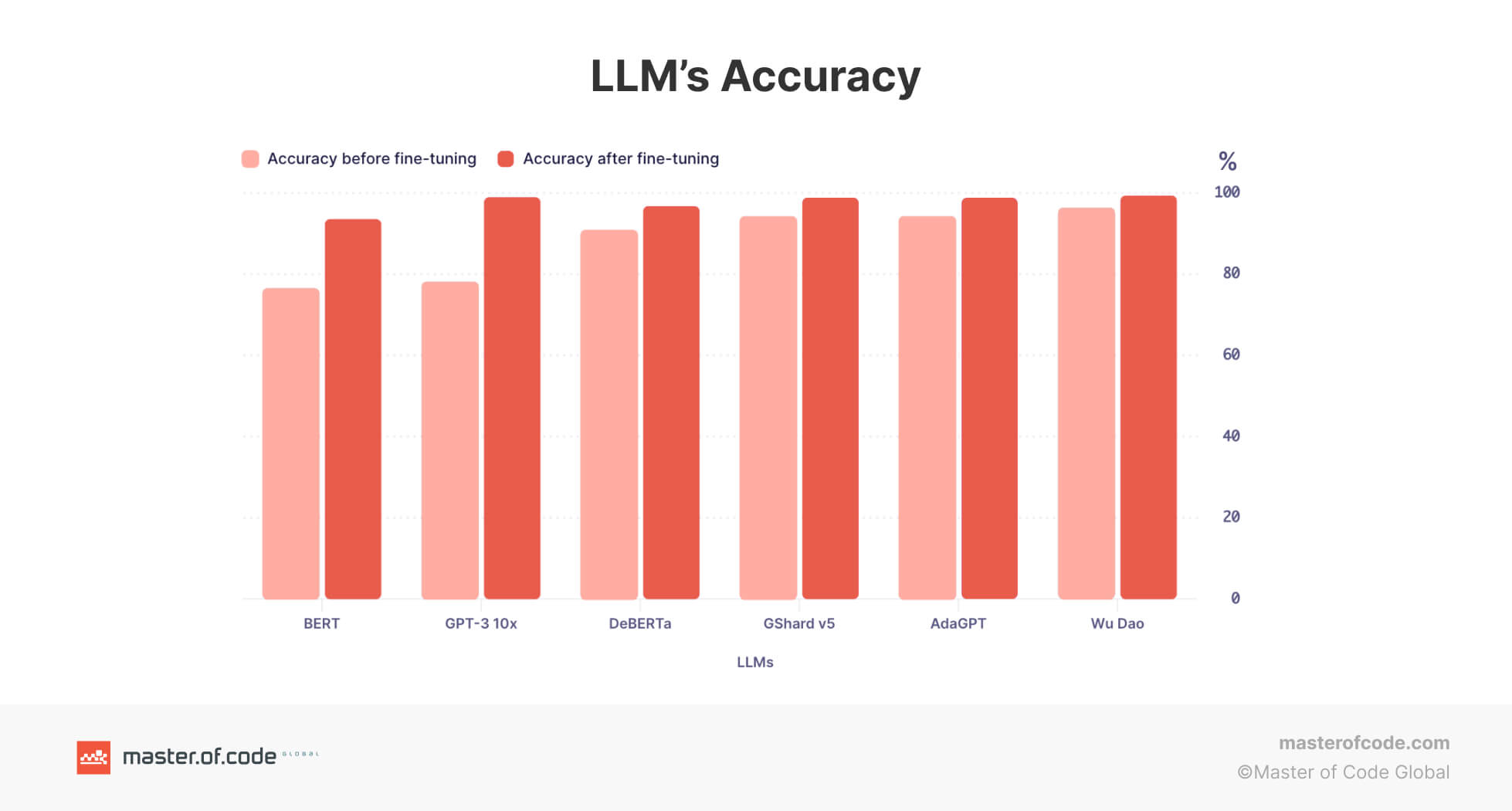

Generative AI models, such as ChatGPT, are designed to understand and generate responses based on the prompts they’re given. The results you get depend on how well you structure your initial prompts. A thoughtfully structured prompt will guide the Generative AI model to provide relevant and accurate responses. In fact, recent research has demonstrated that the accuracy of Large Language Models (LLMs), such as GPT or BERT, can be enhanced through fine-tuning, a prominent prompt engineering technique.

Here’s a list of elements that make up a well-crafted prompt. These elements can help you maximize the potential of the Generative AI model.

- Context: Provide a brief introduction or background information to set the context for the conversation. This helps the Generative AI model understand the topic and provides a starting point for the conversation. For example: “You are a helpful and friendly customer support agent. You focus on assisting customers troubleshoot technical issues with their computer.”

- Instructions: Clearly state what you want the Generative AI model to do or the specific questions you’d like it to answer. This guides the model’s response and ensures it focuses on the desired topic. For example: “Provide three tips for improving customer satisfaction in the hospitality industry.”

- Input Data: Include specific examples that you want the Generative AI model to consider or build on. For example: “Given the following customer complaint: ‘I received a damaged product,’ suggest a suitable response and reimbursement options.”

- Output Indicator: Specify the format you want the response in, such as a bullet-point list, paragraph, code snippet, or any other preferred format. This helps the Generative AI model structure its response accordingly. Example: “Provide a step-by-step guide on how to reset a password, using bullet points.”

Include these elements to craft a clear and well-defined prompt for Generative AI models to ensure relevant and high-quality responses.

Understanding the basics of the GPT-3.5-Turbo model

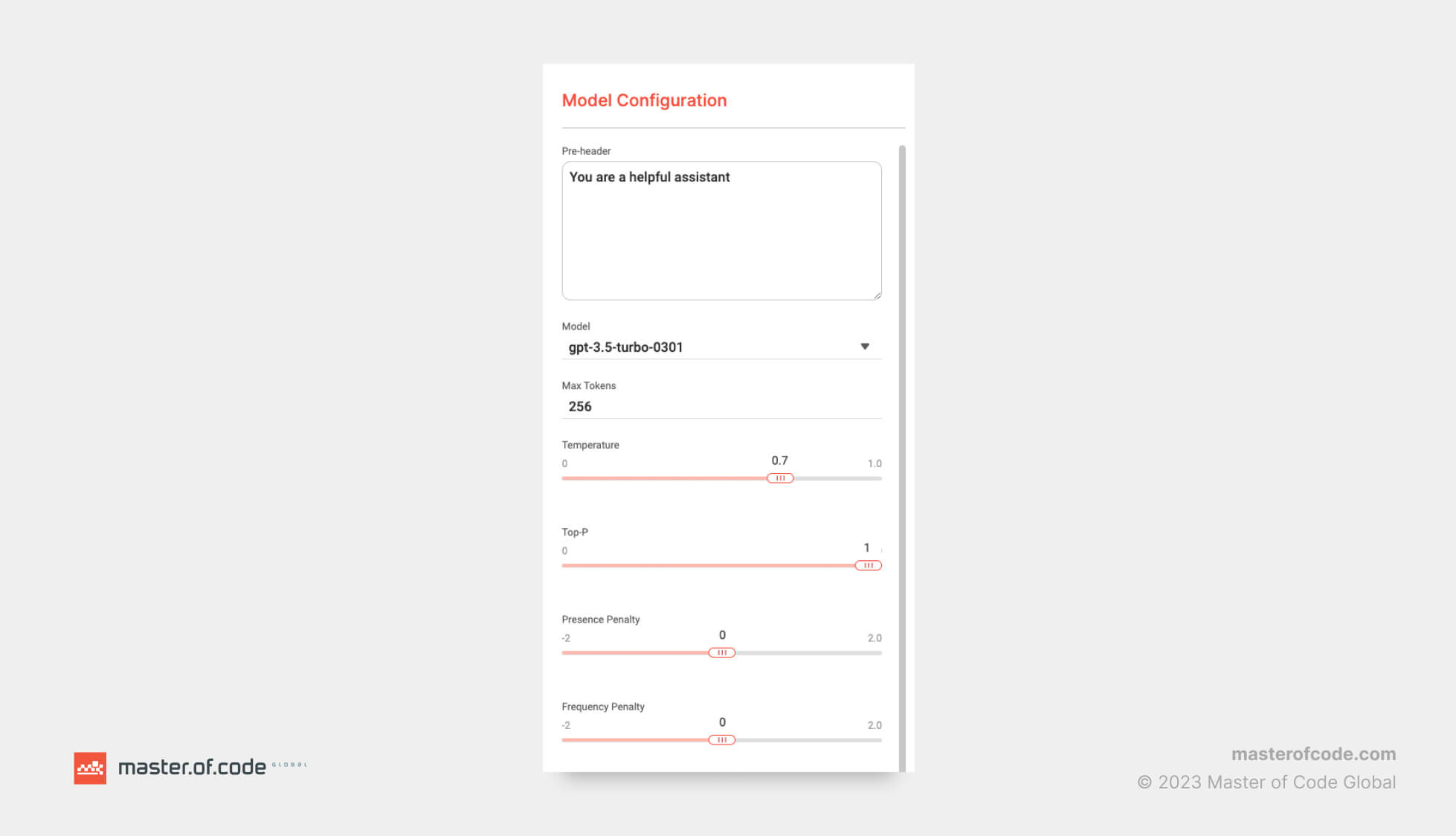

Before we jump right into GPT prompt engineering, it’s important to understand what parameters need to be set for the GPT-3.5-Turbo model.

Pre-prompt (Pre-header): here you’ll write your set of rules and instructions to the model that will determine how it behaves.

Max tokens: this limits the length of the model’s responses. To give you an idea, 100 tokens is roughly 75 words.

Temperature: determines how dynamic your chatbot’s responses are. Set it higher for dynamic responses, and lower for more static ones. A value of 0 means it will always generate the same output. Conversely, a value of 1 makes the model much more creative.

Top-P: similar to temperature, it determines how broad the chatbot’s vocabulary will be. We recommend having a top P of 1 and adjusting temperature for best results.

Presence and Frequency Penalties: these determine how often the same words appear in the chatbot’s response. From our testing, we recommend keeping these parameters at 0.

Setting up your parameters

Now that you understand the parameters you have, it’s time to set their values for your GPT-3.5 model. Here’s what’s worked for us so far:

Temperature: If your use case allows for high variability or personalization (such as product recommendations) from user to user, we recommend a temperature of 0.7 or higher. For more static responses, such as answers to FAQs on return policies or shipping rates, adjust it to 0.4. We’ve also found that with a higher temperature metric, the model tends to add 10 to 15 words on top of your word/token limit, so keep that in mind when setting your parameters.

Top-P: We recommend keeping this at 1, adjusting your temperature instead for the best results.

Max tokens: For short, concise responses (which in our experience is always best), choose a value between 30 and 50, depending on the main use cases of your chatbot.

Writing the pre-prompt for the GPT-3.5-Turbo model

This is where you get to put your prompt engineering skills into practice. At Master of Code, we refer to the pre-prompt as the pre-header as there are already other types of prompting associated with Conversational AI bots. Below are some of our best tips for writing your pre-header.

Provide context and give the bot an identity

The first step in the prompt engineering process is to provide context for your model. Start by giving your bot an identity, including details of your bot persona such as name, characteristics, tone of voice, and behavior. Also include the use cases it must handle, along with its scope.

Example of weak context: You are a helpful customer service bot.

Example of good context: You are a helpful and friendly customer support agent. You focus on assisting customers troubleshoot technical issues with their computer.

Frame sentences positively

We’ve found that using positive instructions instead of negative ones yields better results (i.e. ‘Do’ as opposed to ‘Do not’).

Example of negative framing: Do not ask the user more than 1 question at a time.

Example of positive framing: When you ask the user for information, ask a maximum of 1 question at a time.

Include examples or sample input

Include examples in your pre-header instructions for accurate intent detection. Examples are also useful when you want the output to be in a specific format.

Pre-header excluding an example: Ask the user if they have any dietary restrictions.

Pre-header including an example: Ask the user if they have any dietary restrictions, such as allergies, gluten free, vegetarian, vegan, etc.

Be specific and clear

Avoid giving conflicting or repetitive instructions. Good pre-headers should be clear and specific, leaving no room for ambiguity. Vague or overly broad instructions in pre-headers can lead to irrelevant or nonsensical responses.

Vague example: When the user asks you for help with their technical issue, help them by asking clarifying questions.

Clear example: When the user asks you for help with their technical issue, you need to ask them the following mandatory clarifying questions. If they’ve already provided you with some of this information, skip that question and move on to the next one.

Conciseness

Specify the number of words the bot should include in its responses, avoiding unnecessary complexity. Controlling the output length is an important aspect of prompt engineering, as you don’t want the model to generate lengthy text that no one bothers to read.

Instruction that may yield lengthy responses: When someone messages you, introduce yourself and ask how you can help them.

Instruction that limits response length: When the dialogue starts, introduce yourself by name and ask how you can help the user in no more than 30 words.

Order importance

Order your pre-header with: Bot persona, Scope, Intent instructions.

Synonyms

Experiment with synonyms to achieve desired behavior, especially if you find the model is not responding to your instructions. Finding an effective synonym could make the model respond better, producing the desired output.

Collaborate

When it comes to prompt engineering, having your AI Trainers and Conversation Design teams collaborate is crucial. This ensures that both the business and user needs are accurately translated into the model, tone of voice is consistent with the brand, and instructions are clear, concise and stick to the scope.

Read also: Generative AI Limitations and Risks

Testing your GPT-3.5-Turbo model

Now that you’ve set up your GPT-3.5 model for success, it’s time to put your prompt engineering skills to the test. As mentioned before, prompt engineering is iterative, which means you’ll need to test and revise your pre-header – the model is most likely not going to behave how you intended the first time around. Here are some simple but important tips for testing and updating your pre-header:

- Change one thing at a time. If you see your model isn’t behaving how you expect, don’t change everything at once. This allows you to test each change and keep track of what’s working and what isn’t.

- Test every small change. The smallest update could cause your model to behave differently, so it’s crucial to test it. That means even testing that comma you added somewhere in the middle, before you make any other changes.

- Don’t forget you can also change other parameters, such as temperature. You don’t only have to edit the pre-header to get your desired output.

- Need help getting better results? You can hire prompt engineers to guide your setup, refine your prompts, and move faster with fewer missteps.

And finally, if you think you’ve prompt-engineered your pre-header to perfection and the bot is behaving as expected, don’t be surprised if there are outliers in your testing data where the bot does its own thing every now and then. This is because Generative AI models can be unpredictable. To counter this, we use injections. These can overrun pre-header instructions and correct the model’s behavior. They’re sent to the model from the backend as an additional guide, and can be used at any point within the conversation.

By following these GPT prompt engineering best practices, you can enhance your GPT model and achieve more accurate and tailored responses for your specific use cases.