How often do you approve digital projects without knowing their full cost or the risk of them spiraling out of control?

With Generative AI, that risk grows. It can become your most valuable competitive edge or a long-term drain on budget and resources. The outcome depends entirely on how you plan, scale, and invest. What makes the difference is a clear, well-matched strategy.

The market is already moving. More than 16,500 companies and 6,000 startups are building Generative AI solutions. Together, they’ve created 944,000 jobs, with over 151,000 added in just the last year. The pressure to act is real, but moving fast without a plan can do more harm than good.

For many executives, the hardest part isn’t choosing the use case. It’s understanding what it will actually cost. Pricing models are hard to compare, fees are often buried, and real-world examples include ambitious projects that stalled and burned through capital.

This article is here to fix just that. Our perspective is built on years of industry experience and the delivery of over 1,000 digital projects, including AI solutions. You’ll get clear benchmarks of the cost of Generative AI, real usage scenarios, and practical models that show what different AI strategies will mean for your budget today and at scale.

Table of Contents

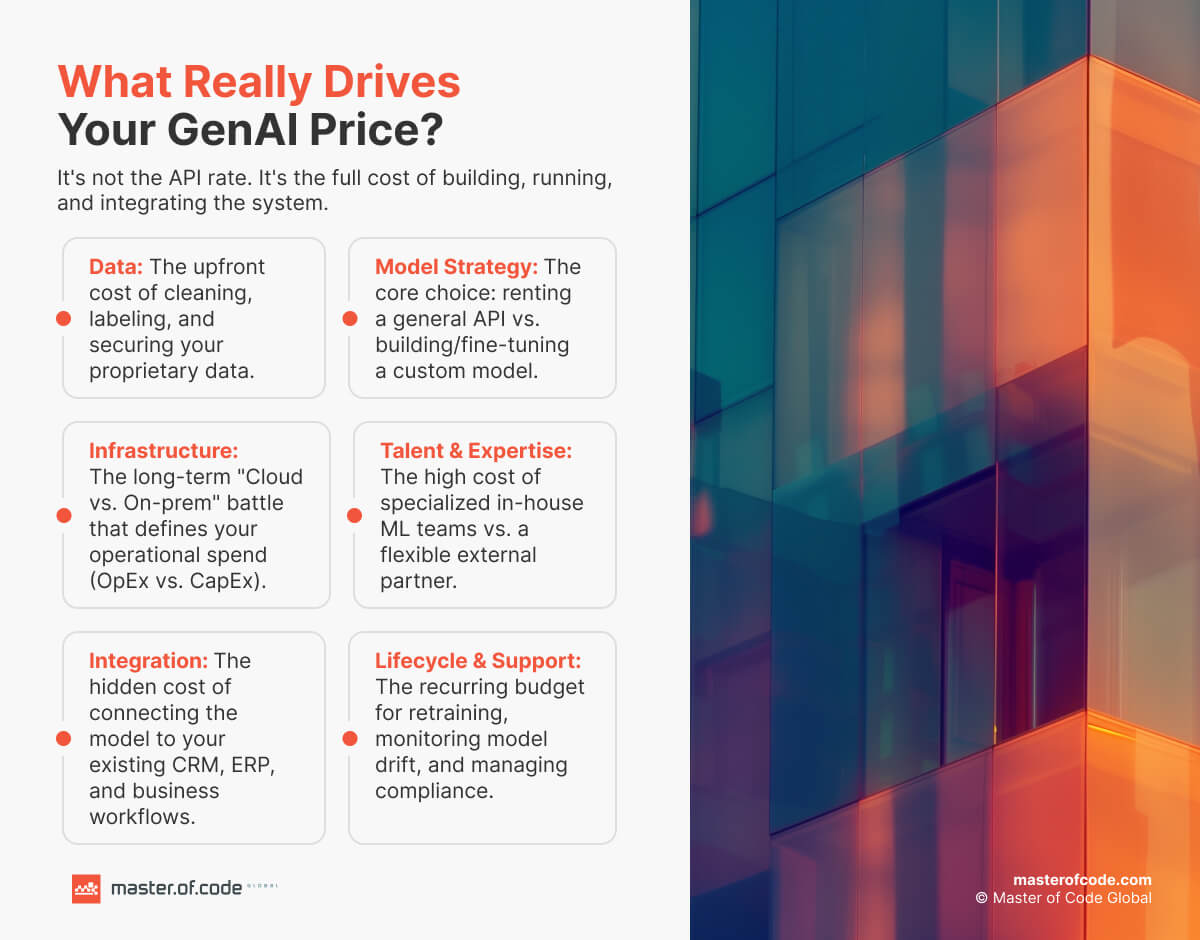

Deconstructing the Generative AI Price Tag

The real cost of technology is rarely what you see on a pricing page. While API rates and compute spend are easy to quote, they often mask the structural expenses that drive long-term total cost of ownership (TCO). To make AI sustainable, businesses need to treat this as a strategic architecture. The one that is built from interconnected layers: data, model strategy, infrastructure, talent, integration, and operational lifecycle.

Data

Most businesses already have the data advantage – they just haven’t monetized it yet. AI development allows you to extract lasting value from internal sources: customer support transcripts, CRM records, process documentation, and more. But raw datasets aren’t usable out of the box. One of the main Generative AI costs comes in preparing them: labeling, cleaning, de-duplicating, and securing access.

What matters is strategic data curation – identifying which data truly enhances model performance and business outcomes.

- Proprietary datasets become long-term differentiators. Once processed and embedded into your AI system, they create defensible knowledge assets that don’t depreciate.

- In regulated industries like Healthcare or Banking, embedding compliance (e.g., HIPAA, GDPR) into your data handling from the start avoids retroactive fixes, fines, and reputational risks.

This is the layer where upfront investment delivers compounding returns: better model performance, fewer hallucinations, and reduced dependence on external data sources.

Model Strategy

The core architectural decision is whether you’ll rent general-purpose intelligence or build task-specific capability.

- API models offer speed and convenience, especially at low volumes. But they charge per token, and usage-based billing can escalate quickly as adoption grows.

- Fine-tuning an existing model gives more control over tone, behavior, and efficiency, with a relatively low upfront Generative AI pricing. However, post-tuning inference costs rise by 50–100% depending on the provider.

- Custom hybrid setups, like Retrieval-Augmented Generation (RAG), allow you to layer a smaller open-source model over your proprietary knowledge base. This reduces spend while increasing control over security and output accuracy.

Model cost is not linear. At scale, even a minor optimization in its routing or output token size can lead to six-figure annual savings.

Infrastructure

Where your AI system runs – cloud, on-prem, or hybrid – shapes more than performance. It defines your long-term Generative AI cost structure, security posture, and compliance readiness.

- Cloud GPU rental is ideal for early-stage projects. It’s elastic, fast to deploy, and easy to right-size. But over time, it becomes a significant recurring expense.

- On-prem or dedicated GPU hosting shifts the cost from OpEx (operational expense) to CapEx (capital expense). For steady, high-volume workloads, this often delivers better unit economics, but only if properly maintained and staffed.

For example, running an 8x A100 setup continuously can be estimated over $500,000/year on a major cloud platform. The same setup on a specialized GPU host can run closer to $125,000, depending on service-level requirements.

The right decision depends on projected usage, latency requirements, and data governance needs. The wrong choice locks you into infrastructure costs that don’t align with your growth.

Talent & Expertise

Hiring an in-house AI team comes with significant long-term commitments. In the United States, a machine learning engineer earns around $158,000 per year on average, while an experienced MLOps engineer can earn anywhere from $133,000 to over $200,000 annually. When you add data scientists, privacy and compliance experts, DevOps support, and account for recruitment, onboarding, and retention overhead, the total annual investment can easily exceed $1 million for a fully staffed internal team.

This is why many enterprises choose to partner with specialized Generative AI development firms. A qualified vendor brings a cross-functional team with real-world delivery experience, ready to step in without the ramp-up time or overhead. It allows you to convert fixed staffing costs into predictable project-based investments that align with business outcomes. More importantly, it reduces risk. You get access to vetted processes, reusable components, and the ability to scale capacity up or down based on real demand, not headcount.

Integration

A powerful model that lives outside your core systems won’t drive real value. Integration is where technology meets business processes.

Whether it’s syncing AI outputs with your CRM, triggering workflows in ERP, or surfacing insights to customer service agents in real time, these tasks require secure, audited APIs, identity management, versioning, and uptime guarantees. None of that comes out of the box.

The cost of making AI usable – error handling, fallback logic, model monitoring, and orchestration layers – is often higher than the model access itself. Businesses that overlook this layer risk launching “pilots” that never graduate to production, or tools that no one adopts because they don’t fit into how teams already work.

Ongoing Support

Production AI systems degrade over time. Models drift, queries change, user expectations rise, and regulations evolve. Without scheduled retraining, performance declines quietly until the business impact becomes visible through lower conversions, incorrect outputs, or compliance issues.

Adding to that, some providers charge for re-embedding data, token caching, or even prompt retrieval. Such Generative AI costs often emerge only after a system hits production usage. Planning for these recurring expenses upfront prevents budget-draining “surprises” months down the line. A mature AI operation includes continuous evaluation, retraining, and proactive compliance updates.

Four Strategic Routes to Implementation

There is no universal “best” path for innovation. The right one for your company depends on scale, compliance needs, data maturity, and the end point. Below are four distinct routes, each with its own Generative AI cost structure, trade-offs, and tipping points. Use this as a framework for decision-making.

As a benchmark, for each dollar invested in GenAI, many companies see an average ROI of 3.7x when the deployment aligns with business value.

The Express Lane: Closed-Source APIs

What it is

You access powerful foundation models in a pay-per-use manner via APIs (OpenAI, Anthropic, Google, etc.). You don’t manage infrastructure or model maintenance.

When it makes sense

- You need speed to market;

- You are experimenting, prototyping, or adding a non-critical feature;

- You don’t yet have scale or cannot yet bear fixed infrastructure spend.

Core cost dynamics

The apparent simplicity of “pay per token” hides deeper levers. A token is the fundamental unit that models use to process text. As a simple rule of thumb, 100 tokens equal roughly 75 words.

The true TCO is shaped by which model tier you use (Flagship, Balanced, Economy), how many tokens you consume (input + output), and which ancillary features you employ (tool invocation, context caching, multimodal processing). It’s shaped not just by usage but by architectural choices and scale.

Model tier pricing snapshot

Here’s a rough comparative price per million tokens (input / output), based on publicly available data (late 2026):

| Tier | Representative Models | Input $/1M | Output $/1M |

|---|---|---|---|

| Flagship | GPT-5 Pro, Claude 4.1 Opus | $15.00 | $120.00 (or $75 for Opus) |

| Balanced | Gemini 2.5 Pro (>200k), GPT-5, Claude 4.5 Sonnet, Mistral Large 2 | ~$1.25 – $3.00 | ~$9 – $15 |

| Economy | Claude 3.5 Haiku, Gemini 2.5 Flash-Lite, GPT-5 nano | ~$0.05 – $0.80 | ~$0.40 – $4.00 |

Because output tokens often use more compute, their price is usually several times higher than input. Model choice is your biggest lever for Generative AI cost control.

Scenario modeling: prototype vs production

- Prototype / MVP: Suppose you run 1,000 daily queries to an internal Q&A bot, with 2,000 input tokens and 500 output tokens, using an Economy-tier model like Claude 3.5 Haiku at $0.80/1M input and $4.00/1M output. You’d spend about $3.60 per day, or ~$1,314/year.

- Production scale: A customer-facing chatbot with 50,000 queries/day, 3,000 input tokens, 750 output tokens, on a Balanced-tier model like Claude 4.5 Sonnet (at $3.00/1M input, $15.00/1M output) would cost ~$1,012.50 per day, or ~$369,562/year.

These contrasts show how quickly pay-per-token pricing can escalate. Choosing a model tier that overshoots your use case is a common driver of runaway budgets.

Hidden tolls & architectural complexity

- Tool calls / function invocation: Using external tools (web search, code execution) often comes at additional rates (e.g., OpenAI may charge $10–$25 per 1,000 web search calls).

- Context caching & storage: Some providers let you cache earlier context to reduce input cost, but that caching is itself a billable feature (e.g., Google charges both per-token and hourly storage for cached tokens).

- Multimodal processing: Models handling images, audio, or video have separate Generative AI pricing tiers. Video generation can cost $0.10–$0.50 per second of output.

- Model routing / classification layer: At scale, you can’t send every request to a flagship model. Many user queries are simple (status check, greeting). Efficient systems use a small “router” that sends simple queries to cheap models and complex ones to advanced ones. That routing logic is extra engineering work that most vendors don’t show on their price pages.

Annual TCO ranges (with scale)

- Prototype / small-scale: between $5,000 and $25,000.

- Production scale: from $150,000 up to $1,000,000+, depending on query mix, model routing efficiency, and tool usage.

The Custom-Fit Lane: Fine-Tuning a Rented Model

What it is

You take a commercial foundation model and fine-tune it on your own data—specializing it in your domain, style, or task. You still pay for usage via the provider, but your version is tailored.

When it makes sense

- Off-the-shelf models perform well but need adaptation;

- You want a unique tone, domain knowledge, or style;

- You prefer not to build everything from scratch.

Generative AI cost structure & risks

- Upfront tuning investment: Depending on dataset size and model complexity, fine-tuning can be from around $5,000 to $50,000, though more efficient strategies may reduce that.

- Inference premium: After tuning, many providers charge more per token than the base model. For example, inference spend might double after customization.

- Continuing maintenance: Updating data, retraining, drift management, and monitoring add recurring cost.

- Vendor lock-in: You’re still bound to the provider’s model, API changes, or deprecation.

Tuning example & impact

If you fine-tune GPT-4.1 mini with 25 million tokens of your internal data (≈100 MB), at $25 per million, the training cost is ~$625. But post-tuning, your per-token spend may double – if your base pricing was $0.40 input / $1.60 output, tuned versions might charge $0.80 / $3.20.

In a production scenario of 50,000 queries/day (3,000 input, 750 output), tuned inference could cost ~$87,600/year. Compare that to using the base model in the earlier production scenario ($369,562/year for the standard model) to see whether the improved domain accuracy justifies paying more per request.

Trade-off summary

Fine-tuning can boost precision and brand fit, but it doesn’t remove your dependency on providers and often increases per-use costs. It’s best when performance gains are clear, measurable, and sustained.

The DIY Approach: Raw Open-Source Models

What it is

You host open-source models on your own infrastructure and build the entire stack (serving, scaling, monitoring, compliance) internally.

When it makes sense

- You have deep technical expertise;

- You demand full control, data sovereignty, or custom base model innovation;

- You expect extremely high volume usage where API costs become prohibitive.

Estimated ranges & considerations

| Model Class | Year 1 Cost | Annual Recurring Cost |

|---|---|---|

| Small (7–13B) | $25,000–50,000 | $15,000–30,000 |

| Medium (70B) | $100,000–200,000 | $60,000–120,000 |

| Large (400B+) | $500,000–1,000,000+ | $200,000–400,000+ |

These figures assume you already have or will build supporting infrastructure. But these are only the headline numbers. To realize operational value:

- Infrastructure must run 24/7 with redundancy, autoscaling, backup, failover;

- Talent must maintain and tune models, oversee optimizations, guard against drift;

- Security, compliance, logging, and upgrades are constant burdens;

- Engineering risk is high, so an outage or misconfiguration can bring down your whole system.

In practice, self-hosting only becomes economically compelling once you cross a very high token volume (e.g., 100M+ tokens/day) with sustained 70%+ utilization. Before that, usage and infrastructure costs outweigh the “free license” appeal.

The Bespoke Blueprint: Custom & Hybrid Solutions

What it is

This is a hybrid, modular system tailored to your context, combining layers like RAG (Retrieval-Augmented Generation), model routing, selective fine-tuning, caching, and open-source inference. You gradually internalize components as growth justifies doing so.

When it makes sense

- You want full IP, data sovereignty, and cost predictability;

- You expect to scale and minimize dependency risks;

- You want the benefits of both managed APIs and internal control.

Why it often delivers the best long-term value

- 74% of organizations report their most advanced GenAI efforts are meeting or exceeding ROI expectations.

- At scale, hybrid implementations are roughly 40–60% less expensive than pure API-based systems while letting you keep control.

- They allow you to replace or optimize individual layers over time without full rebuild.

Typical structure

- Initial build: $150,000–500,000 over 3–6 months

- Annual operating cost: $200,000–750,000

- Layer-level breakdown:

• RAG ingestion & embedding setup: $10K–30K

• Model routing layer setup: <$50K (but can save 70–85% on expensive inference)

• Prompt optimization (token trimming, reuse): $5K–25K (often cuts token use by 40–60%)

ROI and savings example

Imagine processing 100M tokens/month under a premium API at ~$0.30 per token (input + output). That’s ~$30,000 per month or $360,000 per year. With a well-architected hybrid model, you might reduce expenditure to $10,000/month, saving $240,000 annually.

Budgeting for Your Generative AI Initiative

Let’s translate strategy into numbers. While every tech initiative is unique, most companies are now committing more than 5% of their overall digital transformation budgets to GenAI programs. But what you spend should always reflect where you are in the journey: from experimental pilot to enterprise-critical platform.

Here are three common investment tiers we see in the market today, each aligned with a clear strategic goal and set of outcomes. Use this as a framework to right-size your budget.

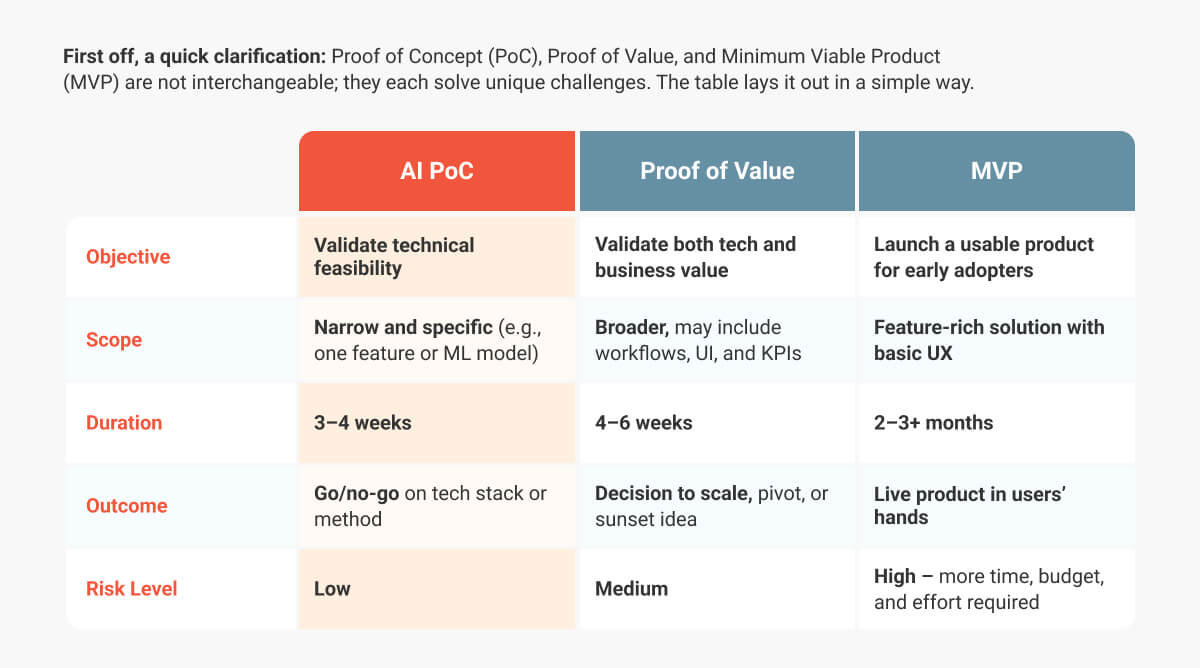

Proof-of-Concept & MVP

Typical Budget Range: $20,000 – $100,000

Primary Goal: To prove that Generative AI can create real value for your business, answering the critical question: “Can this work?”

This phase is about validation and learning quickly. Most businesses are testing high-potential applications that demand stronger validation and oversight. The idea is to fail fast and painlessly, iterate quickly, and verify the use case aligns with a real operational pain point and can create visible business results. You’re not committing to a full product or business transformation yet. Instead, the goal is to answer the following:

- Does Generative AI solve a pressing issue or improve existing workflows?

- Can you prove that it will have enough impact to warrant further investment?

What this budget typically buys:

- Access to third-party APIs (OpenAI, Anthropic, etc.) for initial model testing and prototyping;

- A focused, one-use case product (e.g., a small internal tool or a public-facing prototype);

- Model fine-tuning and initial setup (including API setup, integration, and UX/UI design);

- Basic integrations into existing business systems (CRM, communication tools);

- Limited token usage (1M–5M tokens per month).

Mid-Scale Application

Typical Budget Range: $100,000 – $500,000

Primary Goal: To evolve from a prototype to a functional, scalable application that delivers measurable business outcomes.

Here, the goal is to move from a successful test to a fully integrated product. This often involves connecting your AI model to existing systems (like CRM, customer service tools, or ERP platforms) and ensuring it serves a business unit or customer segment efficiently.

What this budget typically buys:

- Fine-tuned AI models suited for your specific use case;

- Integration with core business systems (CRM, ERP, etc.);

- Scalable backend and hosting setup (cloud services, compute power);

- Enhanced token usage (10M–50M tokens per month);

- Ongoing model adjustments for performance improvements and cost optimization;

- Initial MLOps setup for monitoring and retraining.

Enterprise-Grade Deployment

Typical Budget Range: $600,000 – $1.5M+

Primary Goal: To deploy an enterprise-grade AI system that’s highly secure, scalable, and fully integrated across your business, compliant with industry regulations.

In this phase, you are deploying mission-critical AI that needs to work reliably at scale, within complex systems, while maintaining high standards for security and compliance with regulations like HIPAA, GDPR, and SOC2. This includes integrating AI models into multiple platforms, monitoring the performance at scale, and setting up a comprehensive MLOps framework for long-term management.

What this budget typically buys:

- Sophisticated hybrid AI models (RAG, self-hosted models, fine-tuned APIs);

- Multi-platform integrations (ERP, CRM, knowledge management);

- Enterprise-grade infrastructure (secure cloud setup, compliance, data governance);

- Advanced MLOps framework (model monitoring, versioning, retraining, performance tracking);

- Token usage in the 100M–500M/month range;

- Enhanced security and compliance monitoring tools.

Unearthing the Hidden Costs of Generative AI

Innovation may fail not because of the initial build budget, but due to hidden, ongoing expenses that quickly escalate once the project is underway. These hidden costs are like the submerged portion of an iceberg: the part you don’t see until it’s too late. By understanding and planning for them up front, you can avoid unpleasant surprises and ensure your AI project remains sustainable and delivers long-term value.

Inference Costs Surpass Training

While most companies focus heavily on training AI models, the cost of inference – the process of using the model to generate outputs – can be ten times more expensive. It requires continuous real-time computation, and the more complex the model, the more it demands to run. For example, a model like GPT-5 Pro costs $120 per million output tokens to generate responses. As your user base grows and your AI application becomes more heavily used, these expenses become substantial. What may seem like a small investment at the beginning will compound at scale.

Moreover, inference costs are not just a matter of volume. They depend on how well your system is optimized. Without an intelligent approach to model routing and token management, AI inference can become a significant, ongoing financial burden. The key to managing this is not only selecting the right model tier but also leveraging optimization techniques like prompt engineering and token pruning to reduce the number of tokens being processed, thus decreasing overall expenses.

Ongoing Data Management Expenses

Generative AI models don’t work in a vacuum. They rely on continuous streams of clean, accurate data. Data governance, labeling, and cleaning are ongoing processes that require significant investment, especially when working with sensitive or industry-specific data. Whether you’re in Healthcare, Finance, or eCommerce, data is the foundation of your model’s performance, and if it’s not properly managed, the AI’s output will degrade.

In strictly regulated industries, the cost of Generative AI goes beyond just labeling and cleaning data; you must also ensure that everything is compliant with privacy laws like HIPHIPAA and GDPR. This means regular audits and updates to your pipelines. As your data needs scale, the administrative burden grows, and companies often underestimate the continuous investment required to maintain a clean, compliant dataset.

The Lack of Scalability

What works for a small-scale prototype often collapses when scaled to a production environment. It happens that AI projects begin with a Proof-of-Concept that works fine for a small set of users, but when the user base expands, the initial architecture can quickly become insufficient.

Scaling an AI system often requires a complete re-architecture, adding load balancing, distributed systems, and more robust cloud infrastructure to handle the raised demand. These changes can significantly increase spend, as the system must be optimized for speed, security, and uptime. Companies may also face additional development costs to ensure the model can handle large volumes of data and complex user interactions.

Ongoing Legal & Security Spend

Data privacy and regulatory compliance are critical concerns for businesses using AI. Complying with regulations such as GDPR, HIPAA, and CCPA is not a one-time task but a continual process. These laws require frequent audits, legal reviews, and updates to ensure that your AI systems are not inadvertently violating user rights or mishandling sensitive data.

Such compliance costs are often overlooked in initial budgeting but can add up quickly. Failing to comply can result in significant financial penalties, not to mention the reputational damage that comes with data breaches or privacy violations.

The Human-in-the-Loop Overhead

Despite the sophistication of Generative AI, human oversight remains a crucial part of the process. AI models are not perfect; they can produce biased, inaccurate, or unethical outputs, especially when deployed in customer-facing applications. This is where the human-in-the-loop (HITL) process comes in. Specialists must review and moderate the outputs to ensure they meet quality and ethical standards.

The need for such moderation grows as AI models scale and encounter more diverse user interactions. Companies may need to dedicate staff or contractors to review AI-generated content, correct errors, and make the system work as expected. While automated tools can help, human oversight remains essential for high-stakes environments like customer service or legal applications.

These HITL costs can be substantial and are often underestimated. Depending on the scale of the operation, moderation teams can quickly become a significant ongoing expense, especially in sectors with strict regulatory or ethical guidelines.

An Executive’s Guide to AI Budget Optimization

Smart expenditure control is not about reducing Generative AI costs across the board. It’s more about focusing on what matters most and ensuring long-term returns. Here’s how to maximize the effectiveness of every dollar you invest in technology.

Start with a PoC

The most common mistake is attempting a large-scale implementation from day one. A focused Proof-of-Concept is the most capital-efficient first step. Its goal is to validate a single, high-impact use case with a limited budget and a clear timeline. This approach allows you to confirm the technology’s business value and calculate a credible ROI before committing to a significant, enterprise-wide investment. It’s a disciplined way to manage risk and build stakeholder confidence.

Right-Size Your Model

The largest, most powerful AI systems are also the most expensive to operate. However, many business tasks do not require this level of capability. Using smaller, specialized language models (SLMs) for specific functions is a highly effective budget-control strategy. They are cheaper to run, respond faster, and can often outperform a general-purpose model on a focused task. The key is to match their capabilities to the complexity of the job, avoiding the premium Generative AI costs for unnecessary power.

Work Smarter with RAG

Instead of relying on a static, pre-trained knowledge, Retrieval-Augmented Generation connects the system to your live, proprietary data sources. This is like giving the AI an “open-book test” using your company’s latest information. RAG is a powerful cost-optimization tool because it drastically reduces the need for expensive and continuous fine-tuning or retraining every time your data changes. It delivers more accurate, contextually relevant results at a fraction of the long-term operational expenditure.

Partnering with the Right Experts

The fastest way to overspend on your initiative is through avoidable errors: choosing the wrong architecture, underestimating data preparation, or failing to plan for scale. A dedicated implementation partner brings a proven methodology, a team of specialized AI consultants, and the foresight to navigate common pitfalls. The initial investment in the right vendor is often offset by preventing costly mistakes and significantly accelerating your time-to-value.

Your Next Move

The cost of Generative AI is a complex but manageable aspect of tech adoption. At Master of Code Global, we specialize in optimizing investments by aligning real business needs with targeted, cost-efficient solutions. With our deep expertise, we ensure your innovation strategy not only delivers high value but does so in a way that scales with your business.

We invite you to schedule a tailored discovery session to explore how our approach can help you navigate the financial complexities of Generative AI. Let’s identify the best path forward for your organization, keeping both costs and outcomes in balance.