Chat Generative Pre-Trained Transformer or simply ChatGPT has become synonymous with Gen AI, and for good reason. It’s undeniably a leader in the field, used by individuals and companies alike. But this widespread adoption raises a critical question: what are the ChatGPT security risks for businesses? Is it truly safe to integrate into your operations? The answer is more nuanced than you might expect, and we’re here to address those concerns head-on.

In this article, crafted in collaboration with our cybersecurity experts, we’ll resolve the fundamental inquiry: is ChatGPT secure for business? Let’s explore the potential dangers of this OpenAI powerhouse and equip you with recommendations to protect your organization.

Table of Contents

Is GPT a Ticking Time Bomb for Your Business?

You might be thinking, “Why should I worry about ChatGPT threats just because my employees are utilizing it?” Well, here’s the thing: a lot of people are using the model, and not everyone knows how to do it safely.

Let’s look at the numbers:

- OpenAI’s model is a real workhorse, handling 10 million queries every single day.

- Users are spending a significant chunk of time on it too, with average sessions lasting over 13 minutes.

- Nearly half of professionals have adopted Gen AI tools for work tasks, and a surprising 68% didn’t tell their bosses!

- ChatGPT is already being embraced by almost half of all companies, and another 30% are planning to jump on board soon. It’s being integrated everywhere, from writing code (66% of organizations) and producing marketing content (58%) to managing customer support (57%) and summarizing meetings (52%).

- This adoption is happening across all kinds of industries. In 2023, the tech sector was leading the charge with over 250 institutions employing the model, followed by education and business services. Plus, fields like finance, retail, and healthcare are seeing increased usage.

Here’s where the problems start to creep in:

- Since its launch, 4% of employees have admitted to feeding sensitive information into ChatGPT, and that accounts for 11% of all the data it’s processed!

- Even worse, the number of incidents where confidential insights were accidentally leaked jumped by over 60% in just a couple of months in early 2023. The most common types of data shared are private company info, source code, and – yikes – client records!

- It’s not just accidents we must be cautious of. Most people (nearly 90%!) believe chatbots like ChatGPT could be used for malicious purposes, e.g., stealing personal details or tricking users. And a whopping 80% of respondents from another study agreed that cybercriminals are already utilizing the bot for their shady activities.

Here’s the real kicker: according to Microsoft and LinkedIn, the majority of workers (76%) know they need AI skills to remain competitive. But here’s the catch – only 39% have actually received any proper training on how to use these tools safely and effectively. And only a quarter of organizations intend to offer that kind of education this year.

All this tells us one thing: LLMs are here to stay, and it’s only going to get bigger. But without appropriate management and guidance, staff can make some serious mistakes that put your business and your clients at risk. Still don’t believe in the dangers of using ChatGPT? Then let’s dive into the next section and see the real-world issues that are already occurring.

Discover more valuable insights on ChatGPT adoption metrics in our latest article, featuring more than 50 informative statistics

ChatGPT Risks for Business: What You Absolutely Need to Know

It’s not just about accidental data leaks. The top concerns with generative technology include hallucinations (56%), cybersecurity (53%), problems with intellectual property (46%), keeping up with regulations (45%), and explainability (39%).

It’s interesting, though – professionals have varying worries when it comes to this new tech. According to a recent study, newbies are mostly stressed about information defense (44%) and the difficulty of integrating LLMs into their work (38%). The AI veterans, on the other hand, are more concerned about the bigger picture: social responsibility (46%), being eco-friendly (42%), and making sure they’re following all the data protection rules (40%). Here’s a real head-scratcher: most executives (79%) say that ethics in artificial intelligence is important, but less than a quarter of them have actually done anything to put ethical practices in place!

Alright, let’s get straight to the point. We’re going to break down the 7 biggest ChatGPT security implications and types of trouble they can cause.

Sensitive Data Sharing

One of the most significant perils of using GPT models in a business context is the potential for unintentional leakage of classified data. Employees, in their interactions with the model, might inadvertently incorporate confidential components within their prompts or receive outputs that reveal proprietary knowledge. This could involve anything from client details and financial records to internal communications, strategic plans, or even source code.

The outcomes of such exposure are severe. It could lead to breaches of data privacy regulations like GDPR, resulting in hefty fines and legal repercussions. Competitors could gain access to valuable intellectual property, eroding a company’s competitive advantage. Moreover, loss of restricted insights damages customer trust, harms brand reputation, and disrupts operations. The seemingly innocuous act of interacting with an AI chatbot can, therefore, have far-reaching consequences if not managed carefully.

Social Engineering & Phishing

Prepare yourself, because this is where things get really tricky. GPT’s advanced language capabilities make it a powerful tool for crafting highly convincing phishing emails, messages, or social media posts. Imagine receiving an email that seems to be from your bank, your boss, or a trusted colleague, but it’s actually created by a malicious actor with LLMs. Such interlocutors can be incredibly persuasive, making it difficult to distinguish them from legitimate contacts.

The danger here goes beyond just clicking on a bad link or downloading a virus. Attackers tailor their attempts to individual targets, utilizing personalized information and language that makes the scam seem even more believable. This increases the likelihood of individuals falling prey to these attacks, possibly revealing login credentials, financial info, or other private data. The effect? Monetary losses, compromised accounts, and turmoil of critical processes. ChatGPT, in the wrong hands, becomes a sophisticated weapon for social engineering and deception, requiring heightened vigilance and awareness to combat.

Integration Vulnerabilities & API Attacks

Let’s face it, ChatGPT doesn’t exist in isolation. To truly capitalize on its power, one needs to connect it with other systems, e.g., CRM, various platforms, or internal databases. At this moment, the stakes can increase. The embeddings, often made through APIs or custom connectors (those digital bridges between applications), are prime candidates for exploitation. Look at it from this perspective: if ChatGPT is a high-tech fortress, a poorly secured API is a secret backdoor that hackers slip through.

Now, visualize the consequences. Cybercriminals leveraging these flaws retrieve not just the model storage, but also the data flowing through those connections and the systems it’s linked to. Eventually, you are susceptible to alterations of evidence, interruptions in activities, and complete system compromise. And here’s the twist: because these susceptibilities are frequently present within the integration points, they might not be immediately obvious, rendering them harder to detect and address. So, as you’re researching the possibilities of ChatGPT integration services, remember to prioritize security every step of the way.

Inaccurate Content Generation

Unlike traditional software, ChatGPT doesn’t always provide accurate or reliable information. Its answers are based on the vast dataset it was trained on, which may contain biases, inaccuracies, or outdated facts. This may result in the generation of materials that are factually incorrect, misleading, or entirely fabricated, a phenomenon often referred to as “hallucination.”

How serious are the ramifications of this ChatGPT security vulnerability? Companies relying on LLMs for tasks such as creating marketing content, drafting legal documents, or providing customer support risk disseminating false info, potentially harming their credibility and weakening consumer loyalty. Faulty results also trigger poor decision-making, misinformed actions, and even legal challenges if the AI-generated piece is used in official communications or publications. Ensuring the accuracy and reliability of such outputs is, therefore, paramount for businesses aiming to exploit its advantages responsibly.

Unauthorized Access & Data Theft

Think of OpenAI’s model as a doorway to your company’s valuable insights. While it is a robust instrument for communication and productivity, it also presents a probable entry point for unpermitted intrusion and data stealing. Cybercriminals are constantly seeking new ways to take advantage of vulnerabilities, and AI systems are attractive games. They may attempt to bypass security measures, manipulate the inputs, or directly attack the infrastructure to penetrate into protected datasets.

The repercussions of such a breach are far-reaching. Hacked user data can lead to identity theft, financial fraud, and erosion of trust. Confidential business info, e.g., trade secrets or expansion plans, could be misappropriated, jeopardizing a firm’s market position and leading to substantial profit downturns. Furthermore, unauthorized access disrupts operations, compromises data integrity, and harms a brand image. And if you want to explore how different identity-theft protection services compare, Cybernews offers a detailed breakdown of Guardio vs. Aura, two reputable solutions designed to help individuals safeguard their personal data and reduce the risk of fraud.

Privacy Breaches & Data Retention Risks

Every conversation you have with any language model leaves a trace. Even though it feels like a casual chat, the facts you share – and the responses you receive – can be stored and retained, potentially indefinitely. This creates a hidden layer of threat that outstrips the immediate interaction. Picture sensitive consumer details, internal discussions, or brainstorming sessions lingering in the depths of the AI’s memory, vulnerable to infringement or accidental exposure.

Now, here’s where it gets even more complicated. The way ChatGPT handles your information might not match up with your organization’s policies or the requirements you have to follow. This creates a real headache when it comes to aspects like GDPR compliance and data retention rules. And there’s another sneaky aspect to this: even if you delete something on your end, it might still be hanging around inside the model, popping up unexpectedly in future conversations. This “ghost” causes unintended disclosures, privacy violations, and legal trouble down the road. So, while you’re busy exploring the cool bot features, don’t forget to ask the tough questions about where your input goes and how long it stays there.

ChatGPT Security Concerns: Your Action Plan for Safe and Effective Use

We’ve covered the worrisome issues. Now, let’s talk about effectively shielding from these threats. Don’t worry, it’s not all doom and gloom! With the right partner and a bit of know-how, you can maximize the effectiveness of GPT while keeping the data, employees, and reputation safe.

See it in this light: OpenAI’s model is a powerful instrument, but like any tool, it requires to be used responsibly and with the proper safety measures in place. Let’s dive into the essential strategies that will turn your company into a security pro.

First and foremost, don’t maneuver through the complexities of LLM protection alone. Look for a reliable AI provider like Master of Code Global, with a team of seasoned ChatGPT engineers who live and breathe AI security. We are your trusted guide, helping you set up the system safely, keeping a watchful eye on the processes, and fine-tuning the prompts for maximum defense and efficiency.

But that’s not all. We offer a full suite of services to provide all-encompassing safeguarding for the business in the age of intelligentization, including expert cybersecurity consulting, in-depth audits, proactive penetration testing, and specialized bot security assessments. We’ll even help you navigate the tricky waters of ISO 27001 and HIPAA compliance. With Master of Code Global on your side, you can confidently draw on the power of ChatGPT without compromising your digital well-being.

Don’t just take our word for it – see how we put these principles into practice! Here’s how Master of Code Global ensures robust safety for your ChatGPT projects:

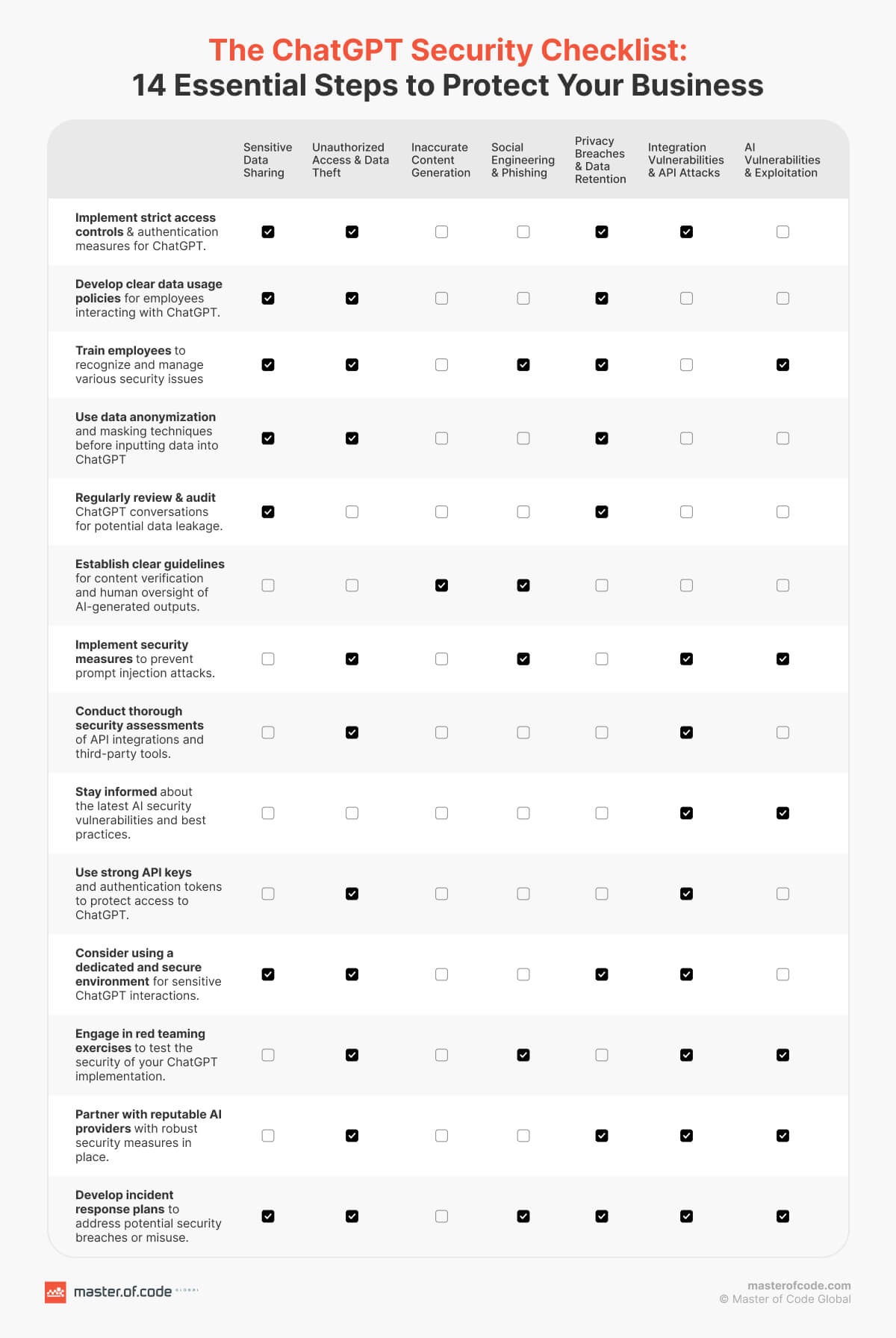

Building a Solid Security Base

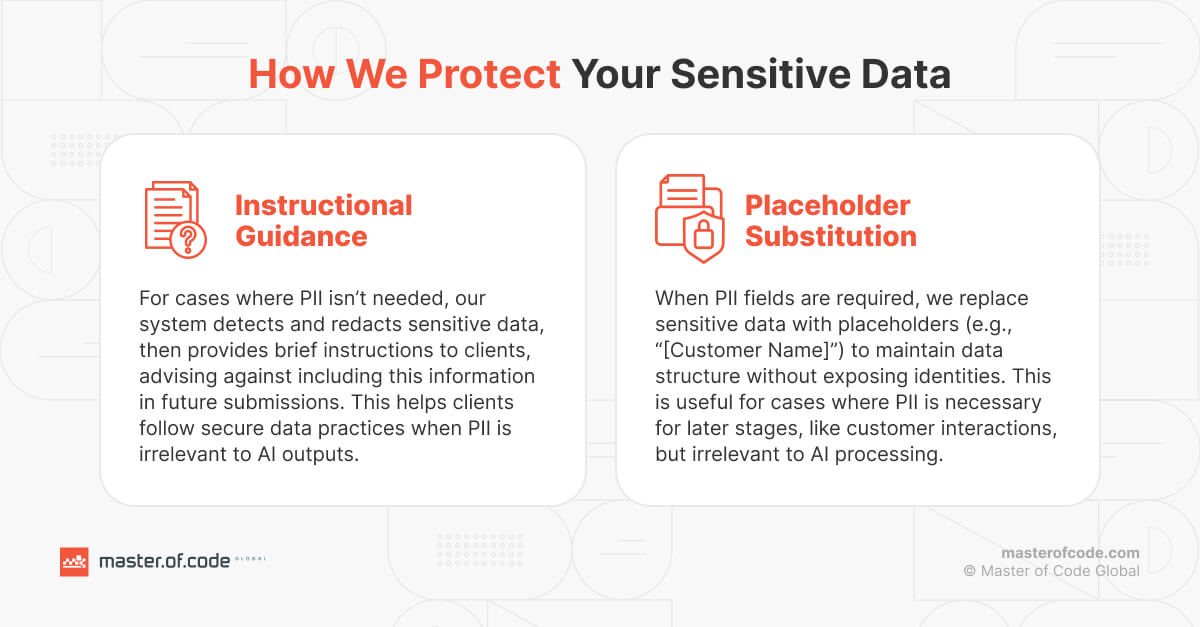

- Access control & data governance. Don’t let just anyone exploit your chatbot. We’ll set up strict login rules and permissions to control who can operate it and what they can do with it. The next step is to create clear guidelines for how employees should employ LLMs and train them to spot and handle security problems. Our team will aid you to make sure everyone knows what kind of information should never go near this AI.

- Data minimization & anonymization. Remember, the less data you feed into ChatGPT, the smaller the risk. Only give it the info it absolutely needs to do its job. And before you share anything sensitive, look for tools to hide or scramble those details, just in case.

- Secure integration & API management. Think of those APIs connecting the model to your other apps as potential weak spots. We’ll give them a thorough security check-up. Our specialists also recommend using strong passwords, fine codes, and encryption to protect your records as they travel between systems. And don’t forget to keep those protection efforts up-to-date!

Prioritizing Human Oversight & Content Integrity

- Human-in-the-loop & output verification. No matter how smart generative models seem, always have a human double-check its work. Our experts will specify exact parameters for verifying facts, spotting errors, and watching out for any bias in the responses. We also strongly advise not to rely on automation for everything, especially for important tasks where mistakes have big consequences.

- Educate your workforce. Awareness breeds capability when it comes to the safety of ChatGPT. Master of Code Global will develop training programs for your employees. With its help, you can confirm they understand the conceivable risks and master the principles of responsible usage while recognizing and avoiding common pitfalls. A well-informed team is your first line of defense in maintaining an unassailable environment.

Introducing Proactive Security & Incident Response

- Vulnerability management & threat intelligence. All of our specialists stay updated on the latest news on vulnerabilities, attack methods, and best practices. This allows us to be vigilant in finding and fixing possible dangers, similar to those sneaky prompt injection attacks. Based on this knowledge, we will put your defenses to the test with “red teaming” exercises to find any susceptible areas.

- Incident response planning. Even with the robust standards, things can still go wrong. For such cases, we design a detailed plan for dealing with ChatGPT security issues. This document will cover everything from damage control and investigation to getting the whole ecosystem back on track and protecting your reputation.

Conclusion

So, there you have it – the good, the bad, and the potentially ugly of ChatGPT for businesses. It’s a versatile instrument with a myriad of verified commercial applications, but as with any powerful tool, it needs to be handled with care. Remember, it’s not just about preventing those accidental data slip-ups; it’s about staying ahead of the curve in a world where artificial intelligence is changing the game for cyber hazards. As our Application Security Leader, Anhelina Biliak, puts it:

“AI-driven tools like ChatGPT present new security risks, notably enabling the creation of polymorphic malware that can adapt to evade detection. This technology lowers the skill barrier, making sophisticated cyber tactics accessible to a wider range of actors, potentially increasing the frequency and scale of attacks. Consequently, the need for advanced, AI-resistant security measures has never been greater. This shift is likely to drive substantial investment in cybersecurity innovation to counter these emerging threats.”

Feeling a bit overwhelmed? Don’t worry, we’ve got your back! Whether you require aid developing a secure ChatGPT solution, integrating it seamlessly with your existing systems, or just want to make sure your team is up to speed on the latest best practices, we’re here to help. Don’t wait for a security mishap to happen – contact us today and let’s build a safe and successful AI-powered future for your company.

Ready to build your own Conversational AI solution? Let’s chat!