Generative AI-Powered Knowledge Base Automation

Accelerating Knowledge Base Article Generation for Conversational AI Platform Using Large Language Model (LLM)

This solution is for a top-tier Сonversational AI company that enables 18k+ businesses worldwide to build stronger customer relationships. Their platform powers 1 billion natural conversations across a range of digital channels per month. With a global reach and diverse clientele, they optimize support services and increase brand engagement.

Challenge

How did we automate knowledge base development for the brand’s clients to efficiently address their end-user inquiries?

Customers of the Conversational AI Platform faced a significant hurdle in one of the embedded components which is manually building and updating their knowledge repository. Such a time-consuming process prevented them from fully utilizing their chatbots to streamline customer assistance by automatically extracting information to answer common questions. The solution needed to deal with this bottleneck, saving resources while enhancing the business self-service capabilities. This would ultimately improve efficiency and end-consumer experience without heavily increasing the workload on support agents.

What We Created

What We Created

Master of Code Global developed a solution that automatically turns past customer conversations into a powerful knowledge base for a chatbot.

Our team aimed to eliminate the tedious task of manually crafting articles for the knowledge base. The tool we developed allows platform accounts to intelligently analyze consumer-business interaction, pinpointing FAQs alongside the best answers provided by the support agents.

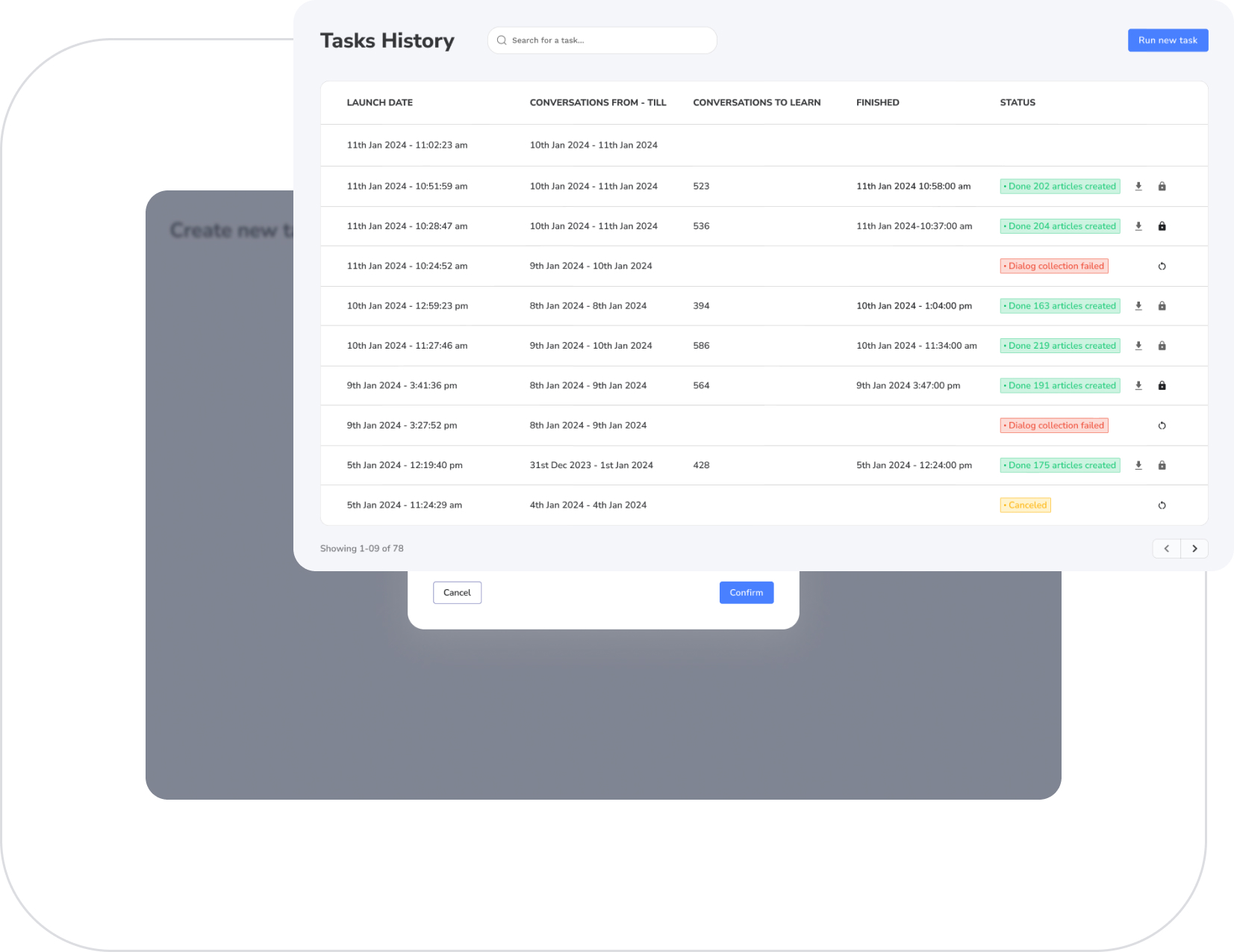

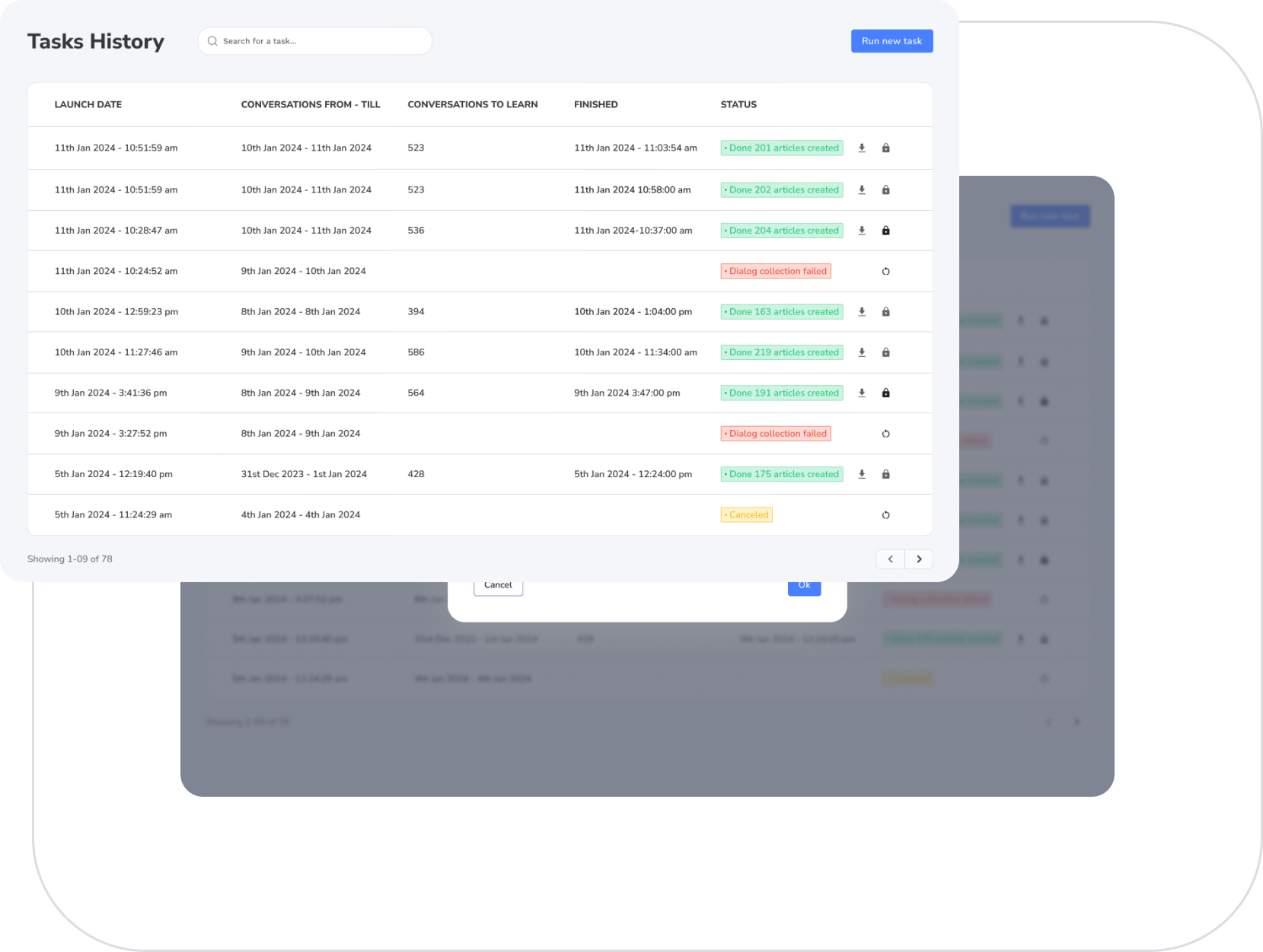

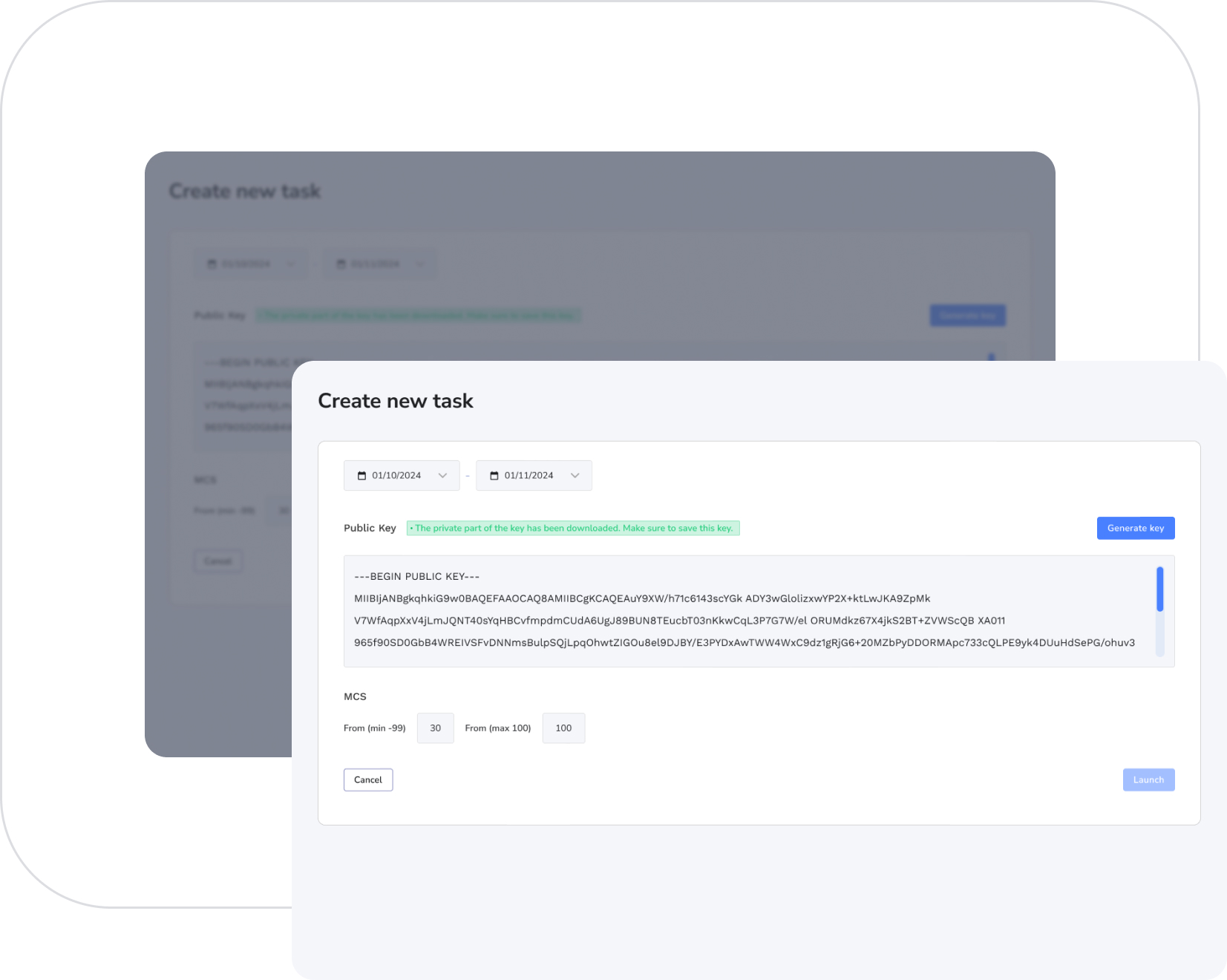

We designed an intuitive interface for users to create a workflow that would scan conversation history and dialogues. As a result, the task now outputs “articles” that contain question-answer topics in a knowledge base format. The LLM element of the solution powers the generation of these insights. This process significantly reduces the time needed to write such insightful entries from several days to a few hours, with thousands of pieces completed.

In order to produce such results, our team had to overcome a couple of critical challenges. Firstly, the tool had to work asynchronously to handle requests from multiple accounts separately. Moreover, the system was encrypted to ensure the protection of data and conversations.

Secondly, we had to address the issue of duplicated outputs. Users of the tool can request answers based on dialogues within a specific timeframe. Each dialogue is analyzed by an AI endpoint, with articles then generated, embedded for similarity checks, and clustered. Clusters undergo additional LLM processing to assemble a unique, consolidated answer for each question group, ensuring customers receive an informative repository of truly distinct pieces.

- Boosting usage of the platform's knowledge base capabilities.

- Support teams save significant time and resources by automating article creation.

- Chatbots gain the ability to instantly handle common end-customer inquiries.

- Businesses facilitate faster resolutions, leading to greater client satisfaction and reduced workload.

Technologies We Leveraged

Backend Tech Stack

-

Amazon

CloudFront -

SAP

-

Kubernetes

-

NestJS

-

Python

-

Platform’s LLM API

Gateway

Results

-

5

accounts successfully onboarded -

6,000

insightful articles generated -

30,000

dialogues processed and analyzed