Artificial Intelligence (AI) adoption has surged in recent years. In fact, Gartner highlights that Generative AI is currently at the Peak of Inflated Expectations in its AI hype cycle, with tools like ChatGPT leading the hype. Indeed, OpenAI’s model showcases the potential of advanced chatbots in real-world use. While businesses can harness this power, trust and reliability remain concerns. Good conversation design and GenAI guardrails are essential to making AI likable and trustworthy. They can help address ethical issues and improve user experience.

As we move forward, it’s also crucial to consider how these advancements can be integrated into broader technological strategies. One significant shift is from the mobile-first approach to embracing Conversational AI multimodality.

Discover effective AI solutions to create tailored customer experiences.

Table of Contents

From Mobile-First to Conversational AI Multimodality

Since Google introduced the mobile-first experience” in 2010, IT strategies have focused on optimizing for mobile. Now, it’s time to expand to multimodality. Multimodal technology combines different types of inputs and outputs to create a seamless user journey. Voice, chat, images, and video work together to make interactions more intuitive and accessible.

To determine if multimodal experiences suit your users, ask:

- Do they have multimodal devices?

- How valuable is it for them?

- What natural conversations are they having?

- What are they looking for?

- How can a bot help?

Multimodal design integrates diverse inputs and outputs, complementing each other’s strengths. For instance, voice assistants may have difficulty recognizing certain accents, but incorporating visual elements can enhance comprehension. This approach improves accessibility and ensures a more inclusive user experience.

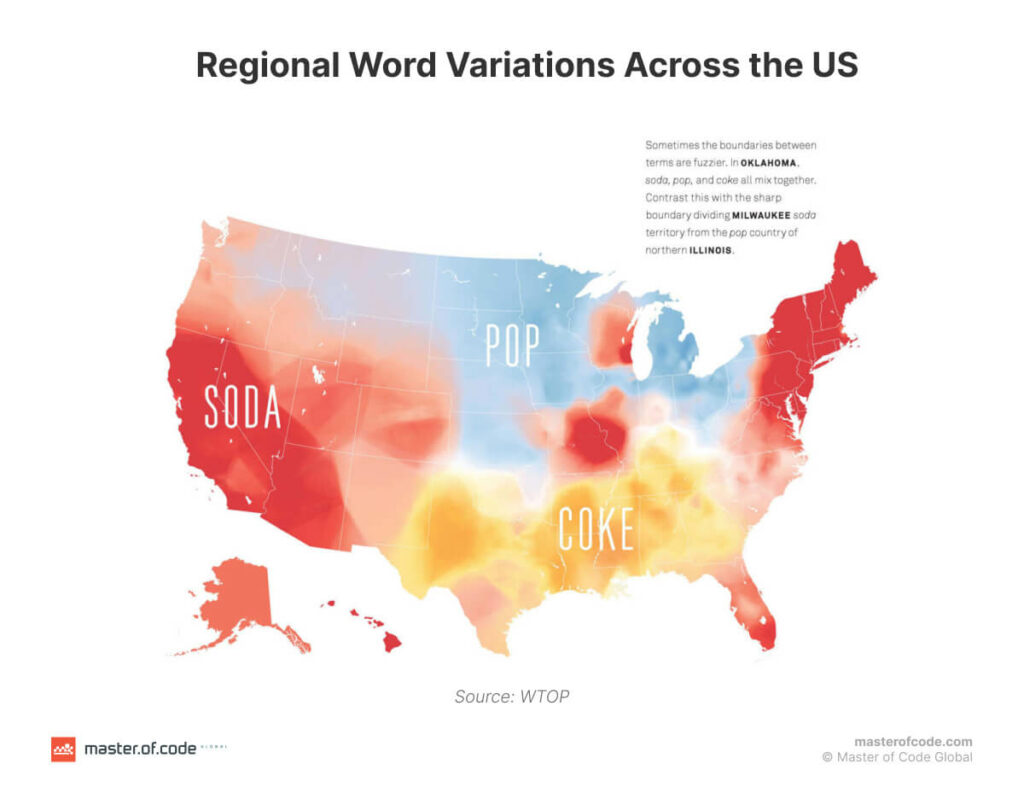

Multimodal technology is still new in enterprises but has great potential. For example, in the automotive industry, language customization is crucial due to cultural differences. Conversational AI needs stable, well-trained language models that consider dialects and languages within a country.

During consultation for the automotive industry, when we looked at English support it became very clear that for English US, English UK, Australian etc cultural context is extremely important to consider. So the way you would name a car part in English US would be different from English UK, and you really need to customize your language model.

Conversation design teams need to understand how end-users talk about products, services, and specific topics the virtual assistant will need to know. Always gather sample dialogues from a diverse and representative group of end users to ensure the system accurately understands various jargon, phrases, and communication styles.

t’s essential for conversation design teams to understand how the end-users talk about products, services, and things the virtual assistant will need to know. Always collect sample dialog from a diverse representative sample of the bot’s end users to ensure the system will understand all the different types of jargon and phrases.

Best Use Cases for Multimodal Conversational AI Assistants

A great multimodal experience feels seamless. It allows the user to switch between different modes of interaction without disruption. Here are some of the best use cases for multimodal conversational AI assistants:

1. Customer Service

Imagine starting a chat with a client care bot to troubleshoot an issue. If the problem requires more detail, the consumer can send an image or video of the matter to the bot. The bot can then analyze the visual input and continue the conversation with appropriate responses or direct the user to an agent for further assistance. This smooth transition enables maintaining continuity and enhances the overall experience.

2. Healthcare Support

Multimodal AI can assist patients by combining voice, text, and visual aids. For example, a person might receive text reminders for medication, use voice commands to schedule appointments, and send images of a prescription to confirm details. This integrated process ensures that patients get consistent support across various modes of communication.

3. Retail and eCommerce

In retail, a multimodal AI assistant can enhance the shopping journey. Customers might use voice commands to search for products, read reviews and descriptions, and send pictures of items they are looking for. The AI can then find similar ones and display photos or videos of them. This holistic approach helps shoppers make informed purchasing decisions and improves their buying experience.

The Future of Multimodal Conversational AI Experiences

The future of AI-driven multimodal interactions promises more natural, personalized, and accessible UX. By integrating multiple interaction modes, AI systems can better meet user needs and expectations and boost usability and satisfaction. Brands that invest in these technologies will not only improve client engagement but also see significant returns on investment.

We analyze your customer pain points and address them with automation.